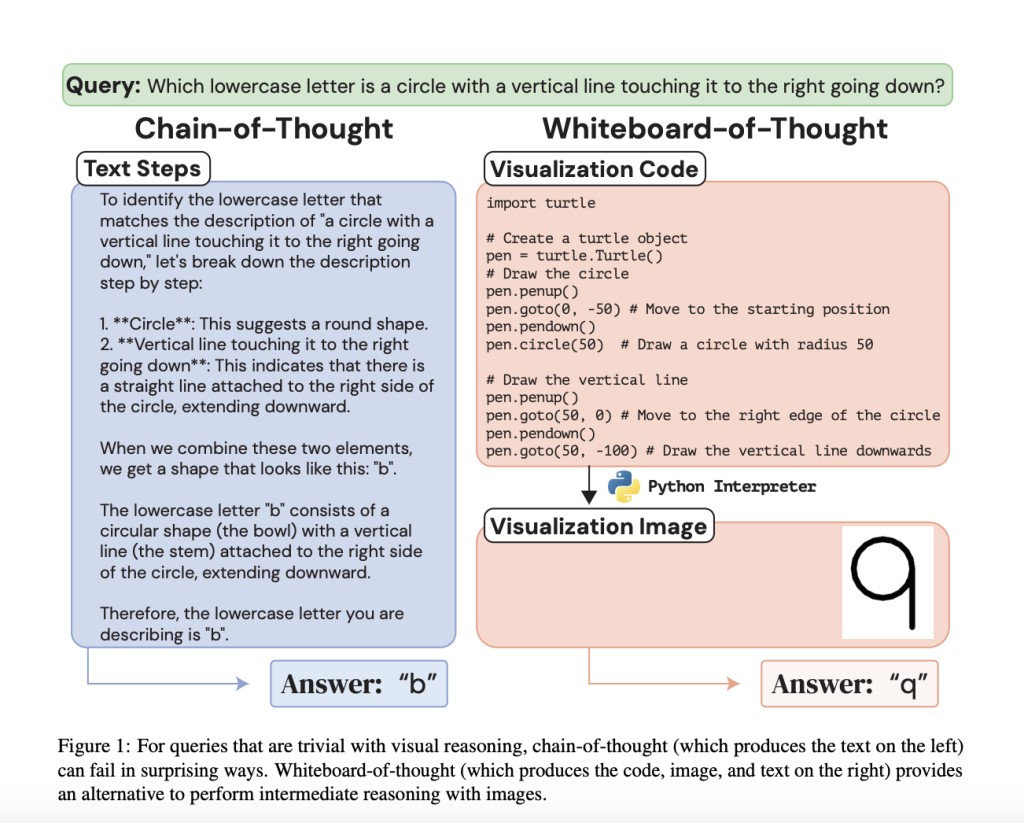

Large language models (LLMs) have transformed natural language processing (NLP) by demonstrating the effectiveness of increasing the number of parameters and training data for various reasoning tasks. One successful method, chain-of-thought (CoT) prompting, helps language models solve complex problems by breaking them into intermediate steps written as text before giving the final answer, focusing on tasks like arithmetic and symbolic reasoning. This poses an important question: can LLMs tackle tasks that humans solve using visual thinking? Research shows that even the best LLMs perform badly on tasks having visual and spatial reasoning.

To address these shortcomings, this paper discusses various existing approaches. The first approach is Intermediate reasoning for language models, in which the success of chain-of-thought (CoT) in arithmetic and symbolic reasoning tasks has attracted interest from the NLP community and beyond. The next approach is Tool usage and code augmentation. This approach is compared to using whiteboards, focusing on improving a language model with additional computation, in which a text buffer trained on Python execution traces is used. The last method is Visual and spatial reasoning in LLMs and MLLMs, where the limited success of these models on tasks requiring visual and spatial reasoning is noted. The ability of these models to connect knowledge from text to other areas, like vision, is still debated.

Researchers from Columbia University have proposed Whiteboard-of-Thought (WoT) prompting, a simple approach to enhance the visual reasoning abilities of MLLMs (multimodal large language models) across modalities. WoT prompting provides MLLMs a metaphorical ‘whiteboard’ where they can draw out reasoning steps as images and then return these images to the model for further processing. This method works without showing examples or special modules, using the models’ existing ability to create code with libraries like Matplotlib and Turtle. This simple method achieves state-of-the-art results on four difficult natural language tasks that require visual and spatial reasoning.Â

The main aim of WoT is to give MLLMs the ability to create images and visually process them to answer queries better. Current MLLMs usually do not inherently possess the ability to produce outputs in the visual domain, so, researchers showed how to create visuals using a model that only generates texts. The images created for visual reasoning are minimal, abstract, and symbolic, and such visuals are developed using a natural process of code. Moreover, several scenarios were found where GPT-4o fails badly when using chain-of-thought, even achieving 0% accuracy in some cases. In contrast, WoT can achieve up to 92% accuracy in the same scenarios.

The results of the experiments carried out by researchers show that LLMs using text perform best in a 2D grid setting but may perform badly in other types of geometries. The reason could be because of grid settings:

Being easier to represent as coordinates in text, especially in the form of a simple square.

Having more data available in this format online, such as tabular data, city grids, and 2D maze coding problems.

Humans often write about square grids in text, and grid cells, and use them to navigate physical spaces and map conceptual spaces. This poses interesting questions about how spatial understanding differs between humans and LLMs. The WoT performs consistently across various geometries, eliminating the dependencies on 2D-grid-specific textual knowledge and focusing on the general applications of the approach.  Â

In conclusion, researchers from Columbia University have introduced WoT, a zero-shot method that enables visual reasoning across modalities in MLLMs. This is achieved by generating code that can create a visual, and then returning the visual back to the model for further reasoning. This paper shows WoT’s capabilities across multiple tasks that need visual and spatial understanding, which have been difficult for current state-of-the-art models depending on text reasoning. However, WoT needs accurate vision systems, so future research should aim to improve state-of-the-art MLLMs to understand detailed geometric figures.Â

Check out the Paper and Project. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter.Â

Join our Telegram Channel and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 45k+ ML SubReddit

Create, edit, and augment tabular data with the first compound AI system, Gretel Navigator, now generally available! [Advertisement]

The post Whiteboard-of-Thought (WoT) Prompting: A Simple AI Approach to Enhance the Visual Reasoning Abilities of MLLMs Across Modalities appeared first on MarkTechPost.

Source: Read MoreÂ