Large Language Models (LLMs) have revolutionized natural language processing, demonstrating exceptional performance on various benchmarks and finding real-world applications. However, the autoregressive training paradigm underlying these models presents significant challenges. Notably, the sequential nature of autoregressive token generation results in slow processing speeds, limiting the models’ efficiency in high-throughput scenarios. Also, this approach can lead to exposure bias, potentially affecting the quality and coherence of generated text. These limitations have prompted researchers to explore alternative approaches that can maintain the impressive capabilities of LLMs while addressing their inherent shortcomings.

Researchers have developed various techniques to overcome the sampling challenges and enhance generation speed in LLMs. Efficient implementations have been proposed to optimize model performance, while low-precision inference methods aim to reduce computational requirements. Novel architectures have been designed to improve processing efficiency, and multi-token prediction approaches seek to generate multiple tokens simultaneously. Concurrently, efforts have been made to adapt diffusion models for text generation, offering an alternative to traditional autoregressive methods. These diverse approaches reflect the ongoing quest to overcome the limitations of autoregressive LLMs and achieve faster, more efficient language generation without sacrificing quality or capabilities.

Researchers from CLAIRE explore the strength of Score Entropy Discrete Diffusion (SEDD) and identify promising directions for improvement. SEDD emerges as a promising alternative to traditional autoregressive generation in language models. This approach offers a key advantage in its ability to flexibly balance quality and computational efficiency, making it particularly suitable for applications where a verifier is available. SEDD’s potential becomes evident in scenarios such as solving hard problems in combinatorics, where faster sampling can compensate for slightly reduced quality.

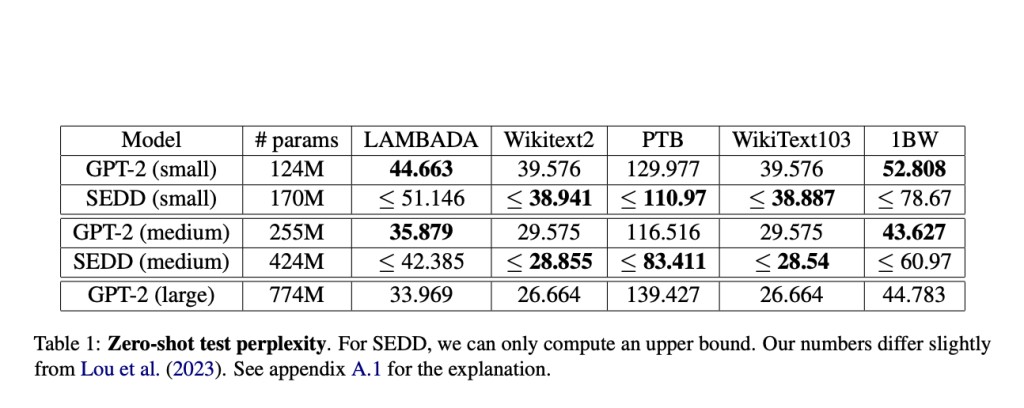

SEDD utilizes a transformer backbone similar to GPT-2, trained on the OpenWebText dataset. Comparative evaluations show that SEDD matches or exceeds GPT-2’s likelihood on various test datasets, including LAMBADA, Wikitext2, PTB, WikiText103, and 1BW. SEDD’s sampling process offers flexibility, allowing for fewer steps than the sequence length, with 32 sampling steps achieving better perplexity than GPT-2 without annealing for 1024-token sequences. The sampling algorithm is straightforward, making it accessible for further research. Unlike autoregressive models, SEDD’s non-causal token generation and flexible forward process definition open possibilities for tasks requiring reasoning over long sequences. The familiar architecture allows for the potential integration of alternative sequence models, such as state-space models, presenting opportunities for further architectural exploration and optimization.

Comparative evaluations reveal that SEDD matches or surpasses GPT-2 in unconditional generation quality, achieving lower perplexity without annealing and similar likelihood with 1024 sampling steps. In conditional generation, SEDD performs slightly lower on the MAUVE metric but shows comparable accuracy on downstream tasks. Diversity assessments indicate that SEDD is less diverse than GPT-2, with an unexpected increase in repetition rate and a decrease in unigram entropy as sampling steps increase. For the conditional generation with short prompts, SEDD appears slightly weaker than GPT-2. These results suggest that while SEDD offers competitive performance in many areas, there’s room for improvement in diversity and conditional generation, particularly with shorter prompts.

In this study, researchers present their strong arguments that diffusion models for text are a relevant alternative to autoregressive generation exemplified by SEDD which emerges as a viable alternative to autoregressive models, offering comparable generation quality to GPT-2 with increased sampling flexibility. While SEDD demonstrates promising results, challenges remain, particularly in sampling efficiency. Matching GPT-2’s unconditional text quality with nucleus sampling requires significantly more steps, resulting in slower generation compared to GPT-2 with KV-caching.Â

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter.Â

Join our Telegram Channel and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 45k+ ML SubReddit

The post The Rise of Diffusion-Based Language Models: Comparing SEDD and GPT-2 appeared first on MarkTechPost.

Source: Read MoreÂ