BigCode, a leading entity in developing large language models (LLMs), has announced the release of BigCodeBench, a novel benchmark designed to rigorously evaluate LLMs’ programming capabilities on practical and challenging tasks.Â

Addressing Limitations in Current Benchmarks

Existing benchmarks like HumanEval have been pivotal in evaluating LLMs on code generation tasks, but they face criticism for their simplicity and lack of real-world applicability. HumanEval, which is focused on compact function-level code snippets, fails to represent the complexity and diversity of real-world programming tasks. Additionally, issues such as contamination and overfitting reduce the reliability of assessing the generalization of LLMs.

Introducing BigCodeBench

BigCodeBench was developed to fill this gap. It contains 1,140 function-level tasks that challenge LLMs to follow user-oriented instructions and compose multiple function calls from 139 diverse libraries. Each task is meticulously designed to mimic real-world scenarios, requiring complex reasoning and problem-solving skills. The tasks are further validated through an average of 5.6 test cases per task, achieving a branch coverage of 99% to ensure thorough evaluation.

Components and Capabilities

BigCodeBench is divided into two main components: BigCodeBench-Complete and BigCodeBench-Instruct. BigCodeBench-Complete focuses on code completion, where LLMs must finish implementing a function based on detailed docstring instructions. This tests the models’ ability to generate functional and correct code snippets from partial information.

BigCodeBench-Instruct, on the other hand, is designed to evaluate instruction-tuned LLMs that follow natural-language instructions. This component presents a more conversational approach to task descriptions, reflecting how real users might interact with these models in practical applications.

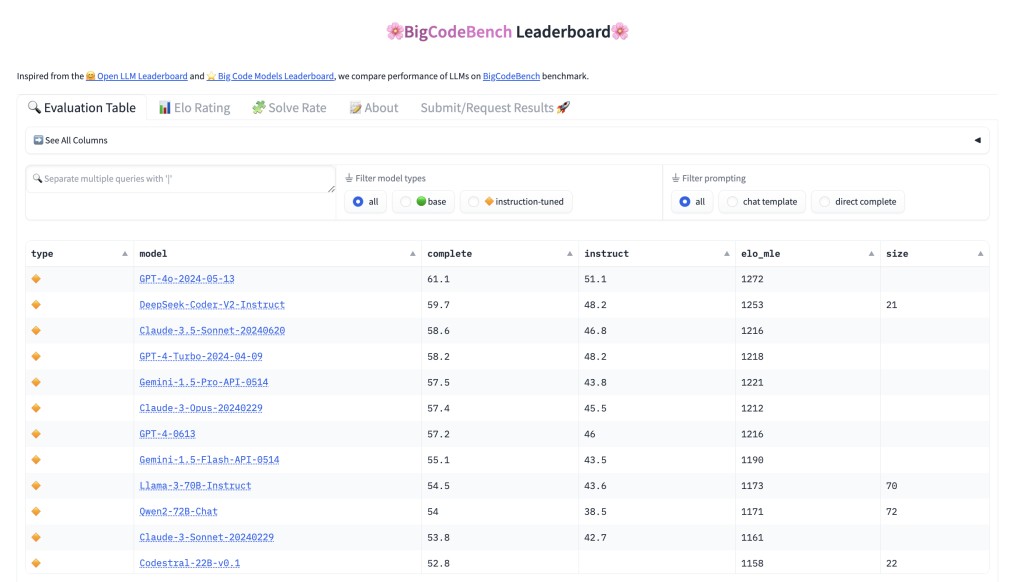

Evaluation Framework and Leaderboard

To facilitate the evaluation process, BigCode has provided a user-friendly framework accessible via PyPI, with detailed setup instructions and pre-built Docker images for code generation and execution. The performance of models on BigCodeBench is measured using calibrated Pass@1, a metric that assesses the percentage of tasks correctly solved on the first attempt. This metric is refined using an Elo rating system, similar to that used in chess, to rank models based on their performance across various tasks.

Community Engagement and Future Developments

BigCode encourages the AI community to engage with BigCodeBench by providing feedback and contributing to its development. All artifacts related to BigCodeBench, including tasks, test cases, and the evaluation framework, are open-sourced and available on platforms like GitHub and Hugging Face. The team at BigCode plans to continually enhance BigCodeBench by addressing multilingual support, increasing the rigor of test cases, and ensuring the benchmark evolves with advancements in programming libraries and tools.

Conclusion

The release of BigCodeBench marks a significant milestone in evaluating LLMs for programming tasks. By providing a comprehensive and challenging benchmark, BigCode aims to push the boundaries of what these models can achieve, ultimately driving the field of AI in software development.

Check out the HF Blog, Leaderboard, and Code. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter.Â

Join our Telegram Channel and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 45k+ ML SubReddit

The post Meet BigCodeBench by BigCode: The New Gold Standard for Evaluating Large Language Models on Real-World Coding Tasks appeared first on MarkTechPost.

Source: Read MoreÂ