LLMs like ChatGPT and Gemini demonstrate impressive reasoning and answering capabilities but often produce “hallucinations,†meaning they generate false or unsupported information. This problem hampers their reliability in critical fields, from law to medicine, where inaccuracies can have severe consequences. Efforts to reduce these errors through supervision or reinforcement have seen limited success. A subset of hallucinations, termed “confabulations,†involves LLMs giving arbitrary or incorrect responses to identical queries, such as varying answers to a medical question about Sotorasib. This issue is distinct from errors caused by training on faulty data or systematic reasoning failures. Understanding and addressing these nuanced error types is crucial for improving LLM reliability.

Researchers from the OATML group at the University of Oxford have developed a statistical approach to detect a specific type of error in LLMs, known as “confabulations.†These errors occur when LLMs generate arbitrary and incorrect responses, often due to subtle variations in the input or random seed. The new method leverages entropy-based uncertainty estimators, focusing on the meaning rather than the exact wording of responses. By assessing the “semantic entropy†— the uncertainty in the sense of generated answers — this technique can identify when LLMs are likely to produce unreliable outputs. This method does not require knowledge of the specific task or labeled data and is effective across different datasets and applications. It improves LLM reliability by signaling when extra caution is needed, thus allowing users to avoid or critically evaluate potentially confabulated answers.

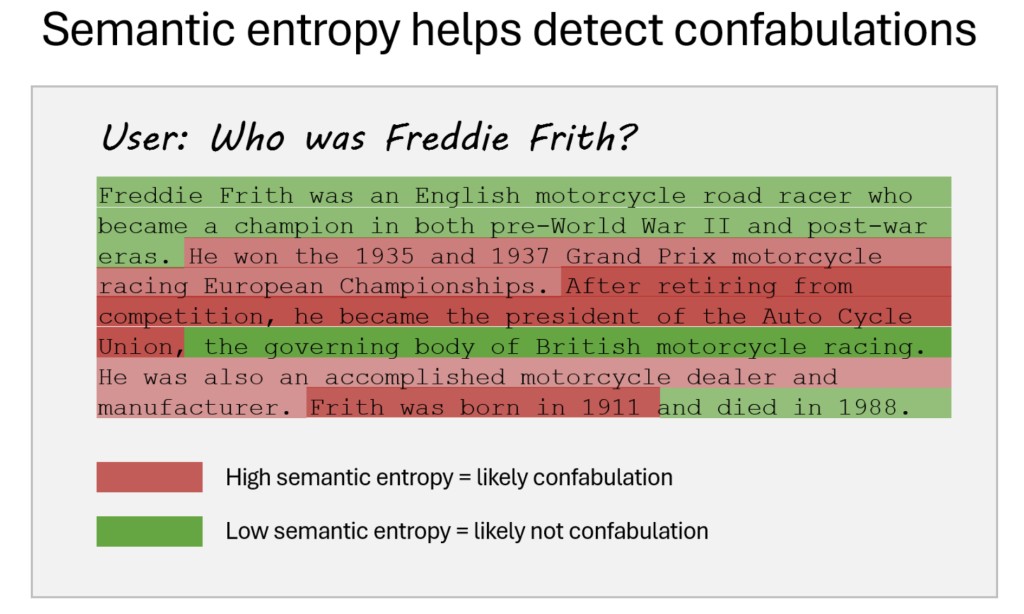

The researchers’ method works by clustering similar answers based on their meaning and measuring the entropy within these clusters. If the entropy is high, the LLM is likely generating confabulated responses. This process enhances the detection of semantic inconsistencies that naive entropy measures, which only consider lexical differences, might miss. The technique has been tested on various LLMs across multiple domains, such as trivia, general knowledge, and medical queries, demonstrating significant improvements in detecting and filtering unreliable answers. Moreover, by refusing to answer questions likely to produce high-entropy (confabulated) responses, the method can enhance the overall accuracy of LLM outputs. This innovation represents a critical advancement in ensuring the reliability of LLMs, particularly in free-form text generation where traditional supervised learning methods fall short.

Semantic entropy is a method to detect confabulations in LLMs by measuring their uncertainty over the meaning of generated outputs. This technique leverages predictive entropy and clusters generated sequences by semantic equivalence using bidirectional entailment. It computes semantic entropy based on the probabilities of these clusters, indicating the model’s confidence in its answers. By sampling outputs and clustering them, semantic entropy identifies when a model’s answers are likely arbitrary. This approach helps predict model accuracy, improves reliability by flagging uncertain answers, and gives users a better confidence assessment of model outputs.

The study focuses on identifying and mitigating confabulations—erroneous or misleading outputs—generated by LLMs using a metric called “semantic entropy.†This metric evaluates the variability in meaning across different generations of model outputs, distinguishing it from traditional entropy measures that only consider lexical differences. The research shows that semantic entropy, which accounts for consistent meaning despite diverse phrasings, effectively detects when LLMs produce incorrect or misleading responses. Semantic entropy outperformed baseline methods like naive entropy and supervised embedding regression across various datasets and model sizes, including LLaMA, Falcon, and Mistral models, outperforming baseline methods like naive entropy and supervised embedding regression, achieving a notable AUROC 0.790. This suggests that semantic entropy provides a robust mechanism for identifying confabulations, even in distribution shifts between training and deployment.

Moreover, the study extends the application of semantic entropy to longer text passages, such as biographical paragraphs, by breaking them into factual claims and evaluating the consistency of these claims through rephrasing. This approach demonstrated that semantic entropy could effectively detect confabulations in extended text, outperforming simple self-check mechanisms and adapting probability-based methods. The findings imply that LLMs inherently possess the ability to recognize their knowledge gaps, but traditional evaluation methods may only partially leverage this capacity. Thus, semantic entropy offers a promising direction for improving the reliability of LLM outputs in complex and open-ended tasks, providing a way to assess and manage the uncertainties in their responses.

Check out the Paper, Project, and GitHub. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter.Â

Join our Telegram Channel and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 45k+ ML SubReddit

The post Enhancing LLM Reliability: Detecting Confabulations with Semantic Entropy appeared first on MarkTechPost.

Source: Read MoreÂ