Language model evaluation is a critical aspect of artificial intelligence research, focusing on assessing the capabilities and performance of models on various tasks. These evaluations help researchers understand the strengths and weaknesses of different models, guiding future development and improvements. One significant challenge in the AI community is a standardized evaluation framework for LLMs. This lack of standardization leads to consistency in performance measurement, making it difficult to reproduce results and fairly compare different models. A common evaluation standard maintains the credibility of scientific claims about AI model performance.

Currently, several efforts like the HELM benchmark and the Hugging Face Open LLM Leaderboard attempt to standardize evaluations. However, these methods must be more consistent in the rationale behind prompt formatting, normalization techniques, and task formulations. These inconsistencies often result in significant variations in reported performance, complicating fair comparisons.

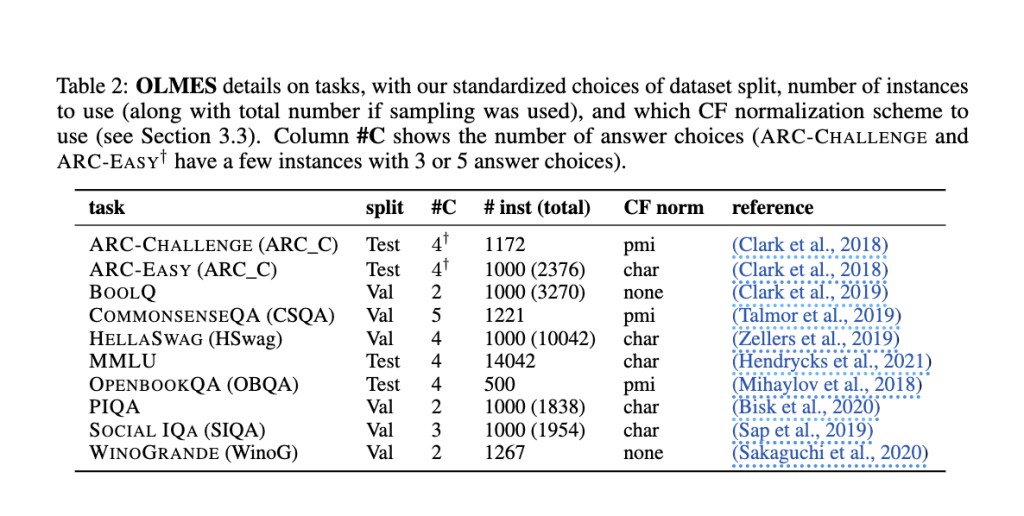

Researchers from the Allen Institute for Artificial Intelligence have introduced OLMES (Open Language Model Evaluation Standard) to address these issues. OLMES aims to provide a comprehensive, practical, and fully documented standard for reproducible LLM evaluations. This standard supports meaningful comparisons across models by removing ambiguities in the evaluation process.

OLMES standardizes the evaluation process by specifying detailed guidelines for dataset processing, prompt formatting, in-context examples, probability normalization, and task formulation. For instance, OLMES recommends using consistent prefixes and suffixes in prompts, such as “Question:†and “Answer:â€, to clarify tasks naturally. The standard also involves manually curating five-shot examples for each task, ensuring high-quality and balanced examples that cover the label space effectively. Furthermore, OLMES specifies using different normalization methods, such as pointwise mutual information (PMI) normalization, for certain tasks to adjust for the inherent likelihood of answer choices. OLMES aims to make the evaluation process transparent and reproducible by addressing these factors.

The research team conducted extensive experiments to validate OLMES. They compared multiple models using both the new standard and existing methods, demonstrating that OLMES provides more consistent and reproducible results. For example, Llama2-13B and Llama3-70B significantly improved performance when evaluated using OLMES. The experiments revealed that the normalization techniques recommended by OLMES, such as PMI for ARC-Challenge and CommonsenseQA, effectively reduced performance variations. Notably, the results indicated that some models reported up to 25% higher accuracy with OLMES than other methods, highlighting the standard’s effectiveness in providing fair comparisons.

To further illustrate the impact of OLMES, the researchers evaluated popular benchmark tasks such as ARC-Challenge, OpenBookQA, and MMLU. The findings showed that models evaluated using OLMES performed better and exhibited reduced discrepancies in reported performance across different references. For instance, the Llama3-70B model achieved a remarkable 93.7% accuracy on the ARC-Challenge task using the multiple-choice format, compared to only 69.0% with the cloze format. This substantial difference underscores the importance of using standardized evaluation practices to obtain reliable results.

In conclusion, the problem of inconsistent evaluations in AI research has been effectively addressed by the introduction of OLMES. The new standard offers a comprehensive solution by standardizing evaluation practices and providing detailed guidelines for all aspects of the evaluation process. Researchers from the Allen Institute for Artificial Intelligence have demonstrated that OLMES improves the reliability of performance measurements and supports meaningful comparisons across different models. By adopting OLMES, the AI community can achieve greater transparency, reproducibility, and fairness in evaluating language models. This advancement is expected to drive further progress in AI research and development, fostering innovation and collaboration among researchers and developers.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter.Â

Join our Telegram Channel and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 45k+ ML SubReddit

The post This AI Paper by Allen Institute Researchers Introduces OLMES: Paving the Way for Fair and Reproducible Evaluations in Language Modeling appeared first on MarkTechPost.

Source: Read MoreÂ