Text embeddings (TEs) are low-dimensional vector representations of texts of different sizes, which are important for many natural language processing (NLP) tasks. Unlike high-dimensional and sparse representations like TF-IDF, dense TEs are capable of solving the lexical mismatch problem and improving the efficiency of text retrieval and matching. Pre-trained language models, like BERT and GPT, have shown great success in various NLP tasks. However, getting a high-quality sentence embedding from these models is challenging due to the anisotropic embedding spaces created by the masked language modeling objective.

TEs are usually tested on a small number of datasets from one specific task, which doesn’t show how well they might work for other tasks. It is not clear that the state-of-the-art embeddings for semantic textual similarity (STS) can solve tasks like clustering or reranking, making it challenging to track progress. To address this problem, the Massive Text Embedding Benchmark (MTEB) was introduced, which covers 8 embedding tasks, 58 datasets, and 112 languages. By testing 33 models on MTEB, the most thorough benchmark has been developed for TEs so far where no single TE method works best for all tasks. This means a universal TE method that performs at a state-of-the-art level across all tasks is still not discovered.

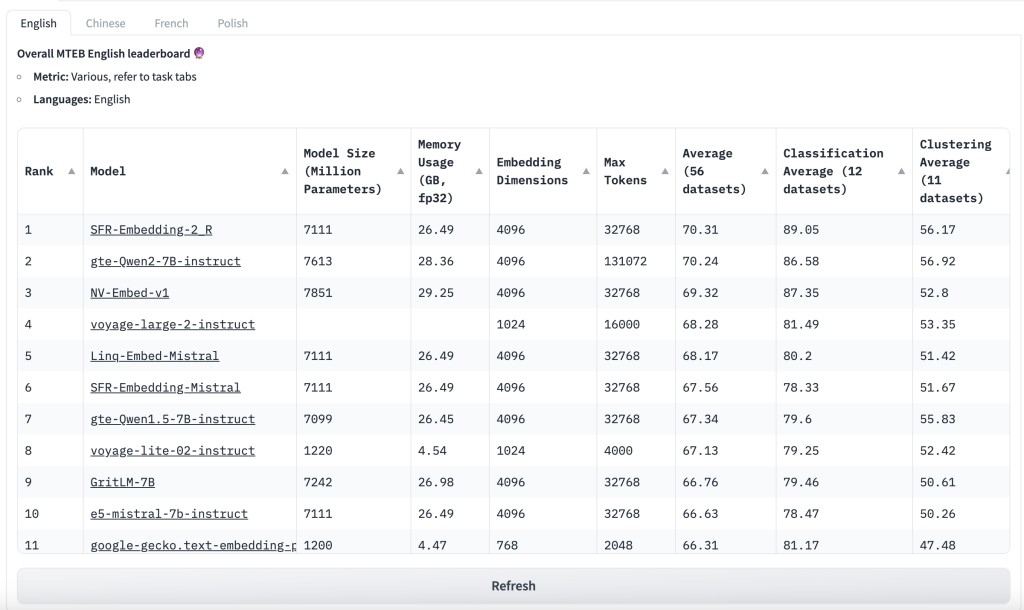

Alibaba researchers have just released a new embedding model called gte-Qwen1.5-7B-instruct, following their earlier gte-Qwen1.5-7B-instruct model. The main change is that the new model is based on Qwen2-7B instead of Qwen1.5-7B, highlighting the improvements of Qwen2-7B. The performance has a drastic increase with an overall score improved from 67.34 to 70.24, and nDCG@10 for Retrieval on the MTEB leaderboard went from 57.91 to 60.25. This model contains 7B parameters, which is very large for embedding models, and it supports a maximum sequence length of 32k (max input tokens). Moreover, it is integrated with Sentence Transformers, making it compatible with tools such as LangChain, LlamaIndex, Haystack, etc.

The gte-Qwen2-7B-instruct is the latest model in the General Text Embedding (gte) model family. As of June 21, 2024, it ranks 2nd in both English and Chinese evaluations on the Massive Text Embedding Benchmark (MTEB). The gte-Qwen2-7B-instruct model is trained based on the Qwen2-7B LLM model, which is present in the Qwen2 series models released by the Qwen team recently. This new model uses the same training data and strategies as the earlier gte-Qwen1.5-7B-instruct model but with the updated Qwen2-7B base model. Given the improvements in the Qwen2 series models compared to the Qwen1.5 series, consistent performance improvements are expected in the embedding models.

The gte-Qwen2-7B-instruct model uses several important features:

Incorporating bidirectional attention mechanisms enhances its ability for contextual understanding.

Instruction Tuning is an important technique that is applied only on the query side for better efficiency.

Comprehensive Training is a process in which the model is trained on a large, multilingual text collection from various domains and situations. It uses both weakly supervised and supervised data to make it useful for many languages and various tasks.

Moreover, the gte series models have released two types of models, Encoder-only models which are based on the BERT architecture, and Decode-only models which are based on the LLM architecture.

In conclusion, Alibaba researchers have released the gte-Qwen2-7B-instruct model, succeeding the previous gte-Qwen1.5-7B-instruct model. The new model, based on Qwen2-7B, shows improved performance with a higher overall score and better retrieval metrics. It supports up to 32k input tokens and integrates with Sentence Transformers, making it usable with various tools such as LangChain, LlamaIndex, Haystack, etc. Also, the model ranks first in both English and Chinese on the MTEB as of June 16, 2024. It uses bidirectional attention for better context understanding and instruction tuning for efficiency. Lastly, the gte series includes both encoder-only (BERT-based) and decode-only (LLM-based) models.

The post Alibaba AI Researchers Released a New gte-Qwen2-7B-Instruct Embedding Model Based on the Qwen2-7B Model with Better Performance appeared first on MarkTechPost.

Source: Read MoreÂ