Code intelligence focuses on creating advanced models capable of understanding and generating programming code. This interdisciplinary area leverages natural language processing and software engineering to enhance programming efficiency and accuracy. Researchers have developed models to interpret code, generate new code snippets, and debug existing code. These advancements reduce the manual effort required in coding tasks, making the development process faster and more reliable. Code intelligence models have been progressively improving, showing promise in various applications, from software development to education and beyond.

A significant challenge in code intelligence is the performance disparity between open-source code models and cutting-edge closed-source models. Despite the open-source community’s considerable efforts, these models must catch up to their closed-source counterparts in specific coding and mathematical reasoning tasks. This gap poses a barrier to the widespread adoption of open-source solutions in professional and educational settings. More powerful and accurate open-source models are crucial to democratizing access to advanced coding tools and fostering innovation in software development.

Existing methods in code intelligence include notable open-source models like StarCoder, CodeLlama, and the original DeepSeek-Coder. These models have shown steady improvement thanks to the contributions of the open-source community. However, they must still catch up to the capabilities of leading closed-source models such as GPT4-Turbo, Claude 3 Opus, and Gemini 1.5 Pro. These closed-source models benefit from extensive proprietary datasets and significant computational resources, enabling them to perform exceptionally well in coding and mathematical reasoning tasks. Despite these advancements, the need for competitive open-source alternatives remains.

Researchers from DeepSeek AI introduced DeepSeek-Coder-V2, a new open-source code language model developed by DeepSeek-AI. Built upon the foundation of DeepSeek-V2, this model undergoes further pre-training with an additional 6 trillion tokens, enhancing its code and mathematical reasoning capabilities. DeepSeek-Coder-V2 aims to bridge the performance gap with closed-source models, offering an open-source alternative that delivers competitive results in various benchmarks.

DeepSeek-Coder-V2 employs a Mixture-of-Experts (MoE) framework, supporting 338 programming languages and extending the context from 16K to 128K tokens. The model’s architecture includes 16 billion and 236 billion parameters, designed to efficiently utilize computational resources while achieving superior performance in code-specific tasks. The training data for DeepSeek-Coder-V2 consists of 60% source code, 10% math corpus, and 30% natural language corpus, sourced from GitHub and CommonCrawl. This comprehensive dataset ensures the model’s robustness and versatility in handling diverse coding scenarios.

The DeepSeek-Coder-V2 model comes in four distinct variants, each tailored for specific use cases and performance needs:

DeepSeek-Coder-V2-Instruct: Designed for advanced text generation tasks, this variant is optimized for instruction-based coding scenarios, providing robust capabilities for complex code generation and understanding.

DeepSeek-Coder-V2-Base: This variant offers a solid foundation for general text generation, suitable for a wide range of applications, and serves as the core model upon which other variants are built.

DeepSeek-Coder-V2-Lite-Base: This lightweight version of the base model focuses on efficiency, making it ideal for environments with limited computational resources while still delivering strong performance in text generation tasks.

DeepSeek-Coder-V2-Lite-Instruct: Combining the efficiency of the Lite series with the instruction-optimized capabilities, this variant excels in instruction-based tasks, providing a balanced solution for efficient yet powerful code generation and text understanding.

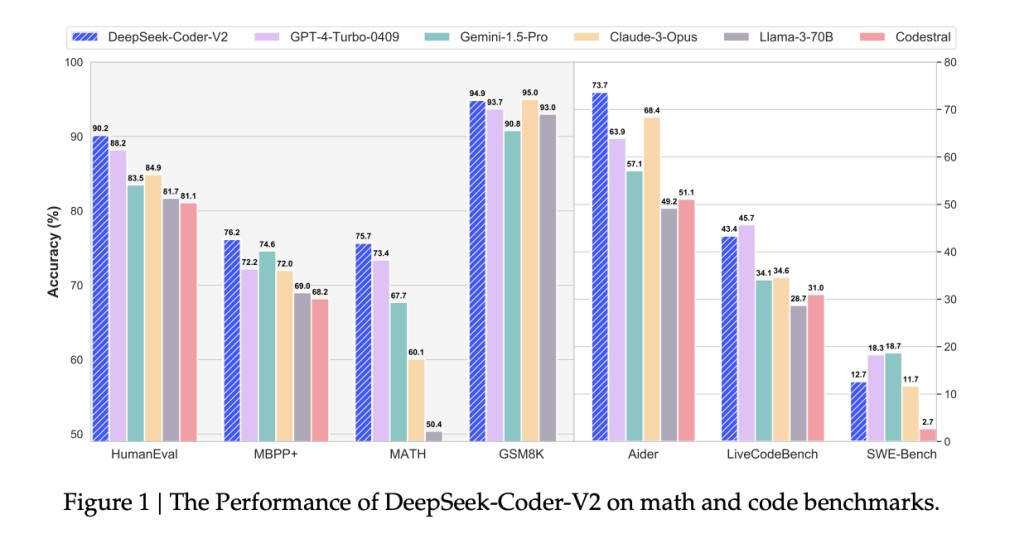

DeepSeek-Coder-V2 outperformed leading closed-source models in coding and math tasks in benchmark evaluations. The model achieved a 90.2% score on the HumanEval benchmark, a notable improvement over its predecessors. Additionally, it scored 75.7% on the MATH benchmark, demonstrating its enhanced mathematical reasoning capabilities. Compared to previous versions, DeepSeek-Coder-V2 showed significant advancements in accuracy and performance, making it a formidable competitor in code intelligence. The model’s ability to handle complex and extensive coding tasks marks an important milestone in developing open-source code models.

This research highlights DeepSeek-Coder-V2’s notable improvements in code intelligence, addressing existing gaps in the field. The model’s superior performance in coding and mathematical tasks positions it as a formidable open-source alternative to state-of-the-art closed-source models. With its expanded support for 338 programming languages and the ability to handle context lengths up to 128K tokens, DeepSeek-Coder-V2 marks a significant step forward in code model development. These advancements enhance the model’s capabilities and democratize access to powerful coding tools, fostering innovation and collaboration in software development.

In conclusion, the introduction of DeepSeek-Coder-V2 by researchers represents a significant advancement in code intelligence. By addressing the performance disparity between open-source and closed-source models, this research provides a powerful and accessible tool for coding and mathematical reasoning. The model’s architecture, extensive training dataset, and superior benchmark performance highlight its potential to revolutionize the landscape of code intelligence. As an open-source alternative, DeepSeek-Coder-V2 enhances coding efficiency and promotes innovation and collaboration within the software development community. This research underscores the importance of continued efforts to improve open-source models, ensuring that all advanced coding tools are available.

Check out the Paper and Models. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter.Â

Chat with DeepSeek-Coder-V2 (230B)

Access Coder-V2 APIs at the same unbeatable prices as DeepSeek-V2

Join our Telegram Channel and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 44k+ ML SubReddit

The post Meet DeepSeek-Coder-V2 by DeepSeek AI: The First Open-Source AI Model to Surpass GPT4-Turbo in Coding and Math, Supporting 338 Languages and 128K Context Length appeared first on MarkTechPost.

Source: Read MoreÂ