Data-driven methods that convert offline datasets of prior experiences into policies are a key way to solve control problems in various fields. There are mainly two approaches for learning policies from offline data, imitation learning and offline reinforcement learning (RL). Imitation learning needs high-quality demonstration data, while offline reinforcement learning RL can learn effective policies even from suboptimal data, which makes offline RL theoretically more interesting. However, recent studies show that by simply collecting more expert data and fine-tuning imitation learning, it often outperforms offline reinforcement learning RL, even when offline RL has plenty of data. This raises questions about what is the main cause that affects the performance of offline RL.

Offline RL focuses on learning a policy using only previously collected data, and the main challenge in offline RL is dealing with the difference in state-action distributions between the dataset and the learned policy. This difference can lead to significant overestimation of values, which can be dangerous, so to prevent this, previous research in offline RL has proposed various methods to estimate more accurate value functions from offline data. These methods train policies to maximize the value function after its estimation using techniques like behavior-regularized policy gradient like DDPG+BC, weighted behavioral cloning like AWR, or sampling-based action selection like SfBC. However, only a few studies have aimed to analyze and understand the practical challenges in offline RL

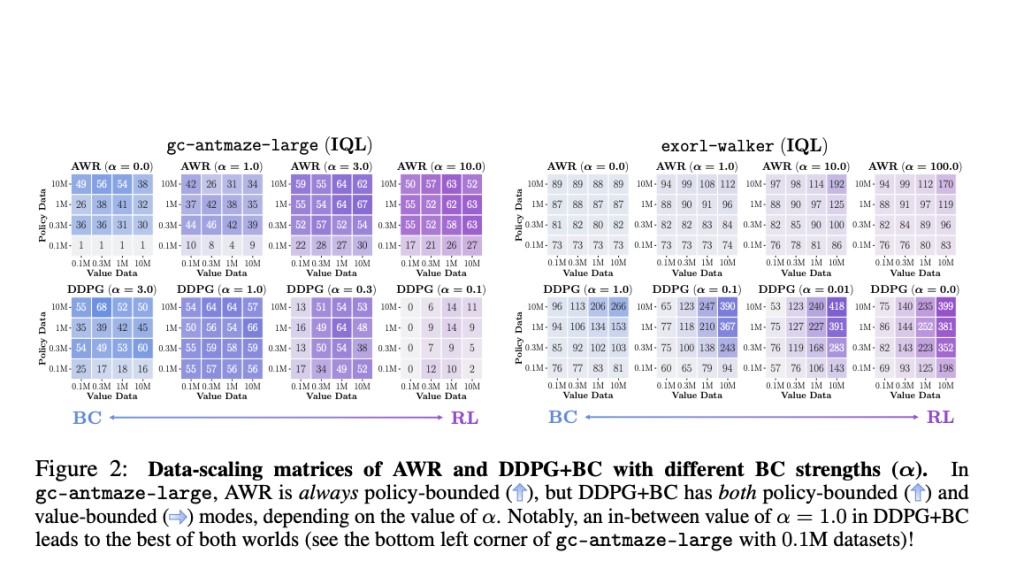

Researchers from the University of California Berkeley and Google DeepMind have made two surprising observations in offline RL, offering practical advice for domain-specific practitioners and future algorithm development. The first observation is that the choice of a policy extraction algorithm has a greater impact on performance compared to value learning algorithms. However, policy extraction is often overlooked when designing value-based offline RL algorithms. Among the different policy extraction algorithms, behavior-regularized policy gradient methods like DDPG+BC consistently perform better and scale more effectively with data than commonly used methods like value-weighted regression, such as AWR.

In the second observation, researchers noticed that offline reinforcement learning (RL) often faces challenges because the policy doesn’t perform well on test-time states instead of training states. The real issue is the policy’s accuracy on new states that the agent encounters at test time. This shifts the focus from previous concerns like pessimism and behavioral regularization to a new perspective on generalization in offline RL. To address this problem, researchers suggested two practical solutions, (a) using high-coverage datasets and, (b) using test-time policy extraction techniques.

Researchers have developed new techniques for improving policies on the fly, that refine the information from the value function into the policy during the evaluation process, leading to better performance. Among policy extraction algorithms, DDPG+BC achieves the best performance and scales well across various scenarios, followed by SfBC. However, the performance of AWR is bad compared to two extraction algorithms in multiple cases. Moreover, the data-scaling matrices of AWR always have vertical or diagonal color gradients, that utilize the value function partially. Simply selecting a policy extraction algorithm like weighted behavioral cloning can affect the use of learned value functions, limiting the performance of offline RL.

In conclusion, researchers found that the main challenge in offline RL is not just improving the quality of the value function, as previously thought. Instead, current offline RL methods often struggle with how accurately the policy is extracted from the value function and how well this policy works on new, unseen states during testing. For effective offline RL, a value function is trained on diverse data, and the policy is allowed to utilize the value function fully. For future research, this paper poses two questions in offline reinforcement learning RL, (a) What is the best way to extract a policy from the learned value function? (b) How can a policy be trained in a way that generalizes well on test-time states?

Check out the Paper and Project. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter.Â

Join our Telegram Channel and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 44k+ ML SubReddit

The post Exploring Offline Reinforcement Learning RL: Offering Practical Advice for Domain-Specific Practitioners and Future Algorithm Development appeared first on MarkTechPost.

Source: Read MoreÂ