One of the main challenges in current multimodal language models (LMs) is their inability to utilize visual aids for reasoning processes. Unlike humans, who draw and sketch to facilitate problem-solving and reasoning, LMs rely solely on text for intermediate reasoning steps. This limitation significantly impacts their performance in tasks requiring spatial understanding and visual reasoning, such as geometry, visual perception, and complex math problems. Addressing this challenge is crucial for advancing AI research, as it would enable LMs to mimic human-like reasoning more closely and improve their applicability in real-world scenarios.

Current methods to enhance LMs’ visual reasoning capabilities include text-to-image models and various multimodal tool-use paradigms. These methods allow LMs to generate visual content from text descriptions, aiming to facilitate better reasoning. However, they fall short in several aspects. Text-to-image models, for instance, do not enable dynamic interaction with the visual content created, which is essential for tasks requiring iterative reasoning. Additionally, existing methods often have high computational complexity, making them unsuitable for real-time applications. They also lack the flexibility to incorporate specialist vision models during the reasoning process, limiting their ability to handle diverse and complex visual tasks effectively.

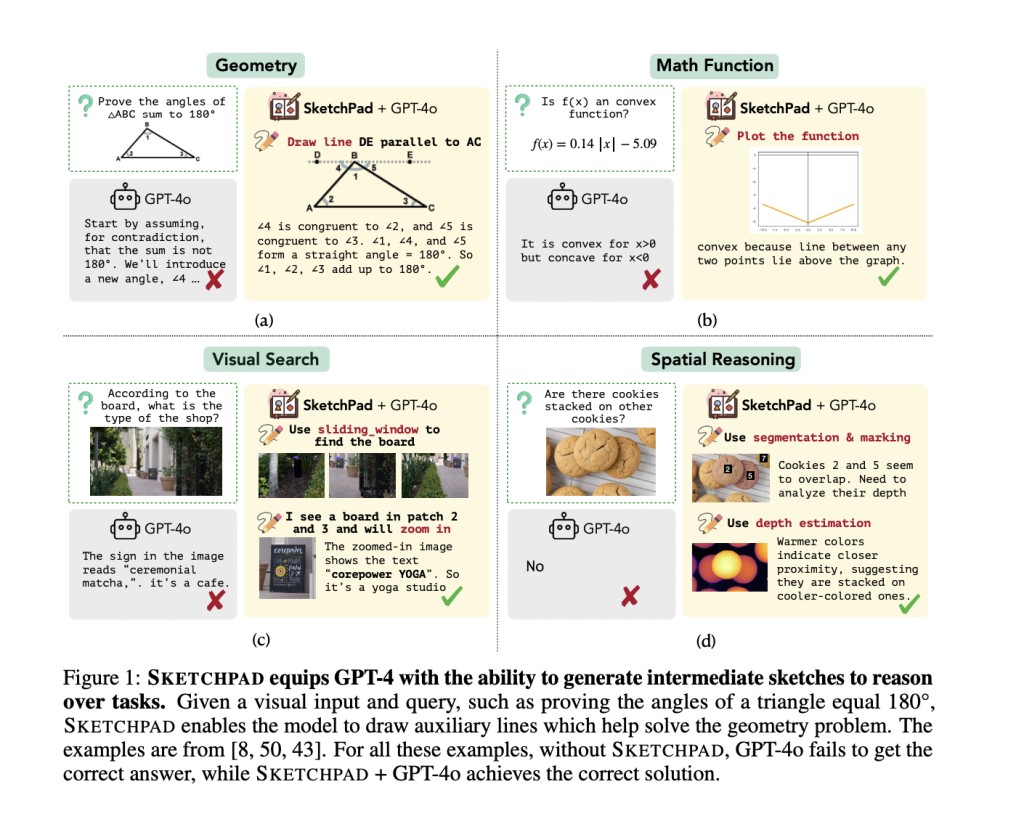

A team of researchers from the University of Washington, the Allen Institute for AI, and the University of Pennsylvania propose SKETCHPAD, a novel framework that equips multimodal LMs with a visual sketchpad and the tools necessary for dynamic sketching. This approach addresses the limitations of existing methods by allowing LMs to draw lines, boxes, and marks, facilitating reasoning processes closer to human sketching. SKETCHPAD can integrate specialist vision models, such as object detection and segmentation models, to enhance visual perception and reasoning further. This innovative approach enables LMs to generate and interact with visual artifacts during reasoning, significantly improving their performance on various tasks. By providing a scaffold for sketch-based reasoning, SKETCHPAD represents a significant contribution to the field, offering a more efficient and accurate solution compared to existing methods.

The proposed method operates by synthesizing programs that generate visual sketches as intermediate reasoning steps. It uses common Python packages like Matplotlib and NetworkX for mathematical tasks and integrates specialist vision models for computer vision tasks. For instance, in geometry problems, SKETCHPAD enables the LM to draw auxiliary lines on diagrams to aid problem-solving. In tasks involving mathematical functions, it allow the LM to plot functions and analyze their properties visually. The framework requires no fine-tuning or training, making it readily applicable to existing multimodal LMs. SKETCHPAD’s ability to use specialist models for tasks like object detection and segmentation further enhances its visual reasoning capabilities.

The researchers present extensive experiments demonstrating SKETCHPAD’s effectiveness across a wide range of tasks, including geometry, graph algorithms, and complex visual reasoning tasks. Key performance metrics such as accuracy, precision, and recall are significantly improved with SKETCHPAD. For example, on math tasks, SKETCHPAD achieves an average gain of 12.7%, and on vision tasks, it yields an average gain of 8.6%. The table below from the paper showcases SKETCHPAD’s effectiveness in geometry problems, where it improves accuracy from 37.5% to 45.8% on geometry tasks using GPT-4 Turbo. The table compares different methods, including the proposed approach and existing baselines, with performance metrics columns. The improvement of the proposed method is statistically significant, highlighting its superiority.

In conclusion, the proposed method presents SKETCHPAD, a novel framework that significantly enhances the reasoning capabilities of multimodal LMs by integrating visual sketching tools. The proposed solution overcomes the critical limitations of existing methods, offering a more efficient and accurate approach to visual reasoning. The results demonstrate substantial performance gains across various tasks, indicating SKETCHPAD’s potential impact on the field of AI research by enabling more human-like multimodal intelligence.

Check out the Paper and Project. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter.Â

Join our Telegram Channel and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 44k+ ML SubReddit

The post Sketchpad: An AI Framework that Gives Multimodal Language Models LMs a Visual Sketchpad and Tools to Draw on the Sketchpad appeared first on MarkTechPost.

Source: Read MoreÂ