Large Language Models (LLMs) have made substantial progress in the field of Natural Language Processing (NLP). By scaling up the number of model parameters, LLMs show higher performance in tasks such as code generation and question answering. However, most modern LLMs, like Mistral, Gemma, and Llama, are dense models, which means that during inference, they use every parameter. Even while this dense architecture is strong, it requires a lot of processing power, which makes it difficult to create AI that is both affordable and widely available.

Conditional computation has been studied as a solution to increase efficiency. By only turning on some of the model’s neurons in response to the input, this technique cuts down on pointless calculations. Conditional computation can be implemented using two primary methods. The Mixture-of-Experts (MoE) strategy is the first method. By predefining constraints on the model’s structure prior to training, such as determining the number of experts to activate for a particular input, MoE introduces conditional computation. This expert routing technique increases efficiency by selectively activating specific model components without raising computing complexity.Â

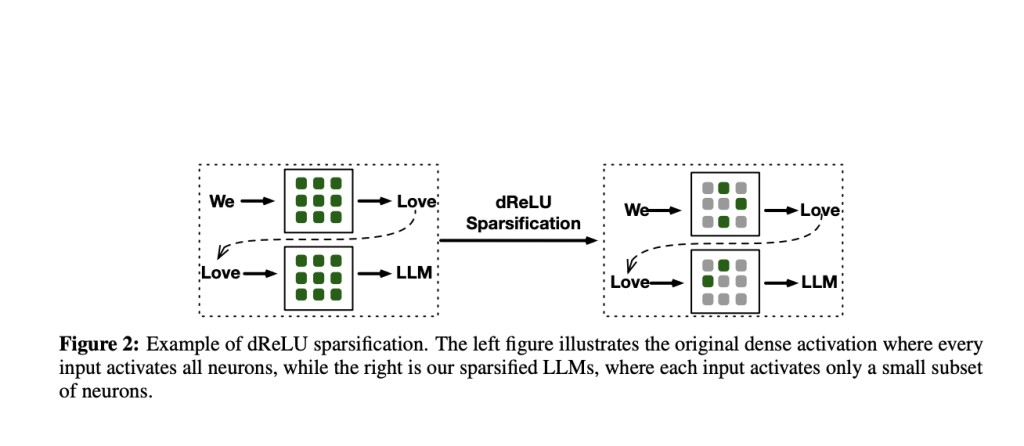

The second method uses activation functions such as ReLU’s intrinsic sparsity. For non-positive inputs, ReLU inherently produces zero, resulting in many dormant neurons that provide nothing to the computation. This inherent sparsity can improve inference efficiency.Â

Many LLMs, like activation functions like GELU and Swish, do not encourage as much sparsity and are more difficult to accelerate using conditional computation despite their efficiency advantages. ReLUfication, a technique that substitutes ReLU for the original activation function during pretraining, has been presented as a solution to this problem. However, performance may suffer, and this approach frequently falls short of achieving the appropriate degrees of sparsity.

There are two primary reasons for the inadequacies of current ReLUfication techniques. First, substituting ReGLU for SwiGLU alone only slightly improves sparsity, indicating the necessity for more significant architectural adjustments. Second, the model’s skills may not fully recover due to the small amount and limited variety of pretraining data.

In a recent study, a team of researchers from China has suggested dReLU, a new activation function that tackles the inefficiencies of negative activations in the GLU component, as a solution to these problems. The tests on small-scale LLMs pretrained with dReLU in addition to SwiGLU have demonstrated that models with dReLU perform on par with SwiGLU models, with sparsity levels approaching 90%. The team has improved the ReLUfication process by gathering heterogeneous pretraining data from other sources, such as code, web, and mathematical datasets.

The team has also performed a sparsity analysis on MoE-based LLMs and discovered that the experts’ feed-forward networks show sparse activation that is comparable to dense LLMs. This observation suggests that combining MoE approaches with ReLU-induced sparsity may yield additional efficiency advantages.

The researchers have created TurboSparse-Mistral-47B and TurboSparse-Mixtral-47B by applying this method to the Mistral-7B and Mixtral-47B models to validate the methodology. The rigorous tests have shown that the performance of these improved models is not only comparable to that of their original versions but frequently better. The TurboSparse-Mixtral-47B model enhanced sparsity from 75% to 97% while greatly reducing processing requirements during inference, and the TurboSparse-Mistral-7B model achieved an average FFN sparsity of 90% while improving capabilities.

Merging these models with PowerInfer demonstrated an average 2.83× acceleration in the generation tasks, verifying the effectiveness of the suggested approach in augmenting both productivity and performance.

The team has summarized their primary contributions as follows.

dReLU function has been introduced, which enhances activation sparsity. Only 150B tokens, or less than 1% of the usual pretraining tokens (about 15T tokens) have been used in this technique.

The release of TurboSparse-Mistral7B and TurboSparse-Mixtral-47B models has been announced. These sparsely activated models demonstrate superior performance compared to their original, dense versions.

Evaluation has revealed that a 2-5× speedup can be achieved with these models for practical inference. With TurboSparse-Mixtral-47B, up to 10 tokens can be accomplished without the need for a GPU.

Check out the Paper and Models. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter.Â

Join our Telegram Channel and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 44k+ ML SubReddit

The post This AI Paper from China Proposes a Novel dReLU-based Sparsification Method that Increases Model Sparsity to 90% while Maintaining Performance, Achieving a 2-5× Speedup in Inference appeared first on MarkTechPost.

Source: Read MoreÂ