As AI-generated data increasingly supplements or even replaces human-annotated data, concerns have arisen about the degradation in model performance when models are iteratively trained on synthetic data. Model collapse refers to this phenomenon where a model’s performance deteriorates significantly when trained on synthesized data generated using the model. This problem is significant because it hinders the development of more efficient and effective methods for developing high-quality summaries from large volumes of text data.

Current methods to counteract model collapse involve several approaches, including using Reinforcement Learning with Human Feedback (RLHF), data curation, and prompt engineering. RLHF leverages human feedback to ensure the data quality used for training, thereby maintaining or enhancing model performance. RLHF has successfully improved model performance by ensuring that the model learns from high-quality, human-approved data. However, this approach is costly and not scalable, as it relies heavily on human annotators.

Another method involves careful curation and filtering of synthesized data. This can include using heuristics or pre-defined rules to discard low-quality or irrelevant data before it is used for training. While this method can help mitigate the negative impact of low-quality synthesized data, it often requires significant effort to maintain the quality of the training dataset, and it only partially eliminates the risk of model collapse if the filtering criteria are robust enough. Additionally, prompt engineering is a technique that involves crafting specific prompts that guide the model to generate higher-quality outputs. Prompt engineering is not a foolproof method and can be limited by the inherent biases and weaknesses of the model itself. And it often requires expert knowledge and iterative experimentation to achieve optimal results.

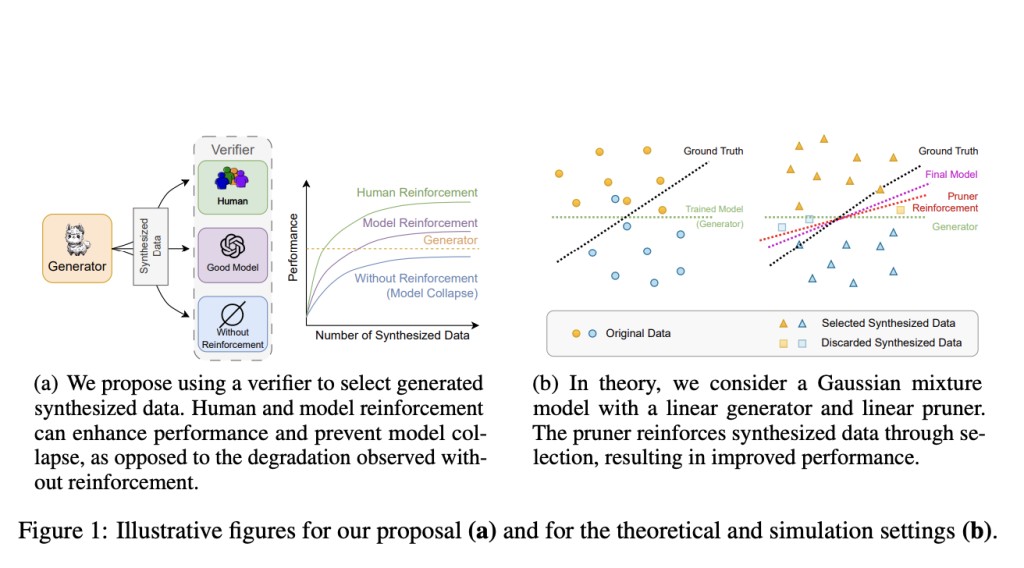

To address these limitations, a team of researchers from Meta AI, NYU, and Peking University propose a method that incorporates feedback on synthesized data, aiming to prevent model collapse through reinforcement techniques. Their approach involves using feedback mechanisms to select or prune synthesized data, ensuring that only high-quality data is used for further training. This method is posited as a more efficient and scalable alternative to RLHF, as it can be partially or fully automated.

The core of the proposed methodology lies in enhancing synthesized data through feedback mechanisms, which can be from humans or other models. The researchers provide a theoretical framework demonstrating that a Gaussian mixture classification model can achieve optimal performance when trained on feedback-augmented synthesized data.

Two practical experiments validate the theoretical predictions. The first experiment involves training transformers to compute matrix eigenvalues, a task that experiences model collapse when trained on purely synthesized data. The model’s performance significantly improves by pruning incorrect predictions and selecting the best guesses from synthesized data, demonstrating the effectiveness of reinforcement through data selection. The second experiment focuses on news summarization with large language models (LLMs) such as LLaMA-2. Here, feedback-augmented data prevents performance degradation, even when the volume of synthesized data increases, supporting the hypothesis that reinforcement is crucial for maintaining model integrity.

The researchers employ a decoding strategy to generate summaries and assess their performance using the Rouge-1 metric. They also use a strong verifier model, Llama-3, to select the best-synthesized data for training. The results show that the proposed method significantly outperforms the original model trained on the full dataset, even when using only 12.5% of the data. It was observed that the model trained with synthesized data selected by the oracle achieves the best performance, indicating that the proposed method effectively mitigates model collapse. This is a significant finding, as it suggests that when properly reinforced, high-quality synthetic data can match and potentially exceed the quality of human-generated data.

The research offers a promising solution to the problem of model collapse in LLMs trained on synthesized data. By incorporating feedback mechanisms to enhance the quality of synthetic data, the proposed method ensures sustained model performance without the need for extensive human intervention. This approach provides a scalable, cost-effective alternative to current RLHF methods, paving the way for more robust and reliable AI systems in the future.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter.Â

Join our Telegram Channel and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 44k+ ML SubReddit

The post Scaling AI Models: Combating Collapse with Reinforced Synthetic Data appeared first on MarkTechPost.

Source: Read MoreÂ