Analogical reasoning, fundamental to human abstraction and creative thinking, enables understanding relationships between objects. This capability is distinct from semantic and procedural knowledge acquisition, which contemporary connectionist approaches like deep neural networks (DNNs) typically handle. However, these techniques often need help to extract relational abstract rules from limited samples. Recent advancements in machine learning have aimed to enhance abstract reasoning capabilities by isolating abstract relational rules from object representations, such as symbols or key-value pairs. This approach, known as the relational bottleneck, leverages attention mechanisms to capture relevant correlations between objects, thus producing relational representations.

The relational bottleneck approach helps mitigate catastrophic interference between object-level and abstract-level features; a problem also referred to as the curse of compositionality. This issue arises from the overuse of shared structures and low-dimensional feature representations, leading to inefficient generalization and increased processing requirements. Neuro-symbolic approaches have partially addressed this problem by using quasi-orthogonal high-dimensional vectors for storing relational representations, which are less prone to interference. However, these approaches often rely on explicit binding and unbinding mechanisms, necessitating prior knowledge of abstract rules.

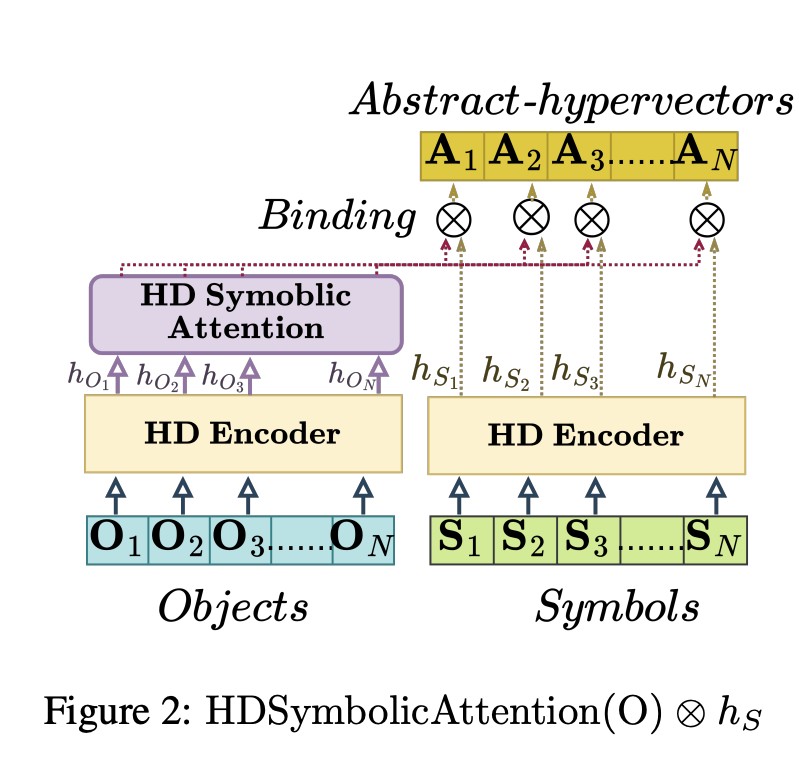

This paper from Georgia Institute of Technology introduces LARS-VSA (Learning with Abstract RuleS) to address these limitations. This novel approach combines the strengths of connectionist methods in capturing implicit abstract rules with the neuro-symbolic architecture’s ability to manage relevant features with minimal interference. LARS-VSA leverages vector symbolic architecture to address the relational bottleneck problem by performing explicit bindings in high-dimensional space. This captures relationships between symbolic representations of objects separately from object-level features, providing a robust solution to the issue of compositional interference.

A key innovation of LARS-VSA is implementing a context-based self-attention mechanism that operates directly in a bipolar high-dimensional space. This mechanism develops vectors representing relationships between symbols, eliminating the need for prior knowledge of abstract rules. Furthermore, the system significantly reduces computational costs by simplifying attention score matrix multiplication to binary operations. This offers a lightweight alternative to conventional attention mechanisms, enhancing efficiency and scalability.

To evaluate the effectiveness of LARS-VSA, its performance was compared with the Abstractor, a standard transformer architecture, and other state-of-the-art methods on discriminative relational tasks. The results demonstrated that LARS-VSA maintains high accuracy and offers cost efficiency. The system was tested on various synthetic sequence-to-sequence datasets and complex mathematical problem-solving tasks, showcasing its potential for real-world applications.

In conclusion, LARS-VSA represents a significant advancement in abstract reasoning and relational representation. Combining connectionist and neuro-symbolic approaches addresses the relational bottleneck problem and reduces computational costs. Its robust performance on a range of tasks highlights its potential for practical applications, while its resilience to weight-heavy quantization underscores its versatility. This innovative approach paves the way for more efficient and effective machine learning models capable of sophisticated abstract reasoning.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter. Join our Telegram Channel, Discord Channel, and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 44k+ ML SubReddit

The post This AI Paper from Georgia Institute of Technology Introduces LARS-VSA (Learning with Abstract RuleS): A Vector Symbolic Architecture For Learning with Abstract Rules appeared first on MarkTechPost.

Source: Read MoreÂ