This is a guest post co-written with Ratnesh Jamidar and Vinayak Trivedi from Sprinklr.

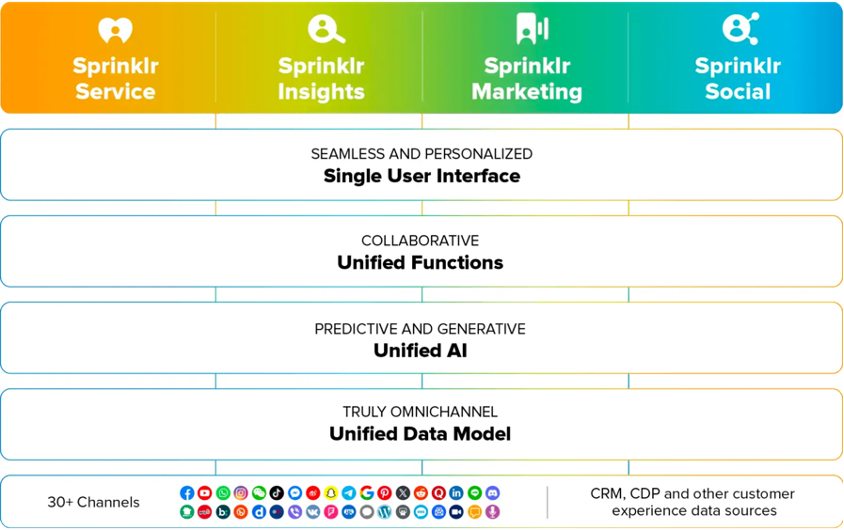

Sprinklr’s mission is to unify silos, technology, and teams across large, complex companies. To achieve this, we provide four product suites, Sprinklr Service, Sprinklr Insights, Sprinklr Marketing, and Sprinklr Social, as well as several self-serve offerings.

Each of these products are infused with artificial intelligence (AI) capabilities to deliver exceptional customer experience. Sprinklr’s specialized AI models streamline data processing, gather valuable insights, and enable workflows and analytics at scale to drive better decision-making and productivity.

In this post, we describe the scale of our AI offerings, the challenges with diverse AI workloads, and how we optimized mixed AI workload inference performance with AWS Graviton3 based c7g instances and achieved 20% throughput improvement, 30% latency reduction, and reduced our cost by 25–30%.

Sprinklr’s AI scale and challenges with diverse AI workloads

Our purpose-built AI processes unstructured customer experience data from millions of sources, providing actionable insights and improving productivity for customer-facing teams to deliver exceptional experiences at scale. To understand our scaling and cost challenges, let’s look at some representative numbers. Sprinklr’s platform uses thousands of servers that fine-tune and serve over 750 pre-built AI models across over 60 verticals, and run more than 10 billion predictions per day.

To deliver a tailored user experience across these verticals, we deploy patented AI models fine-tuned for specific business applications and use nine layers of machine learning (ML) to extract meaning from data across formats: automatic speech recognition, natural language processing, computer vision, network graph analysis, anomaly detection, trends, predictive analysis, natural language generation, and similarity engine.

The diverse and rich database of models brings unique challenges for choosing the most efficient deployment infrastructure that gives the best latency and performance.

For example, for mixed AI workloads, the AI inference is part of the search engine service with real-time latency requirements. In these cases, the model sizes are smaller, which means the communication overhead with GPUs or ML accelerator instances outweighs their compute performance benefits. Also, inference requests are infrequent, which means accelerators are more often idle and not cost-effective. Therefore, the production instances were not cost-effective for these mixed AI workloads, causing us to look for new instances that offer the right balance of scale and cost-effectiveness.

Cost-effective ML inference using AWS Graviton3

Graviton3 processors are optimized for ML workloads, including support for bfloat16, Scalable Vector Extension (SVE), twice the Single Instruction Multiple Data (SIMD) bandwidth, and 50% more memory bandwidth compared to AWS Graviton2 processors, making them an ideal choice for our mixed workloads. Our goal is to use the latest technologies for efficiency and cost savings, so when AWS released Graviton3-based Amazon Elastic Compute Cloud (Amazon EC2) instances, we were excited to try them in our mixed workloads, especially given our previous Graviton experience. For over 3 years, we have run our search infrastructure on Graviton2-based EC2 instances and our real-time and batched inference workloads on AWS Inferentia ML-accelerated instances, and in both cases we improved latency by 30% and achieved up to 40% price-performance benefits over comparable x86 instances.

To migrate our mixed AI workloads from x86-based instances to Graviton3-based c7g instances, we took a two-step approach. First, we had to experiment and benchmark in order to determine that Graviton3 was indeed the right solution for us. After that was confirmed, we had to perform the actual migration.

First, we started by benchmarking our workloads using the readily available Graviton Deep Learning Containers (DLCs) in a standalone environment. As early adopters of Graviton for ML workloads, it was initially challenging to identify the right software versions and the runtime tunings. During this journey, we collaborated with our AWS technical account manager and the Graviton software engineering teams. We collaborated closely and frequently for the optimized software packages and detailed instructions on how to tune them to achieve optimum performance. In our test environment, we observed 20% throughput improvement and 30% latency reduction across multiple natural language processing models.

After we had validated that Graviton3 met our needs, we integrated the optimizations into our production software stack. The AWS account team assisted us promptly, helping us ramp up quickly to meet our deployment timelines. Overall, migration to Graviton3-based instances was smooth, and it took less than 2 months to achieve the performance improvements in our production workloads.

Results

By migrating our mixed inference/search workloads to Graviton3-based c7g instances from the comparable x86-based instances, we achieved the following:

Higher performance – We realized 20% throughput improvement and 30% latency reduction.

Reduced cost – We achieved 25–30% cost savings.

Improved customer experience – By reducing the latency and increasing throughput, we significantly improved the performance of our products and services, providing the best user experience for our customers.

Sustainable AI – Because we saw a higher throughput on the same number of instances, we were able to lower our overall carbon footprint, and we made our products appealing to environmentally conscious customers.

Better software quality and maintenance – The AWS engineering team upstreamed all the software optimizations into PyTorch and TensorFlow open source repositories. As a result, our current software upgrade process on Graviton3-based instances is seamless. For example, PyTorch (v2.0+), TensorFlow (v2.9+), and Graviton DLCs come with Graviton3 optimizations and the user guides provide best practices for runtime tuning.

So far, we have migrated PyTorch and TensorFlow based Distil RoBerta-base, spaCy clustering, prophet, and xlmr models to Graviton3-based c7g instances. These models are serving intent detection, text clustering, creative insights, text classification, smart budget allocation, and image download services. These services power our unified customer experience (unified-cxm) platform and conversional AI to allow brands to build more self-serve use cases for their customers. Next, we are migrating ONNX and other larger models to Graviton3-based m7g general purpose and Graviton2-based g5g GPU instances to achieve similar performance improvements and cost savings.

Conclusion

Switching to Graviton3-based instances was quick in terms of engineering time, and resulted in 20% throughput improvement, 30% latency reduction, 25–30% cost savings, improved customer experience, and a lower carbon footprint for our workloads. Based on our experience, we will continue to seek new compute from AWS that will reduce our costs and improve the customer experience.

For further reading, refer to the following:

Optimized PyTorch 2.0 Inference with AWS Graviton processors

(Beta) PyTorch Inference Performance Tuning on AWS Graviton Processors

AWS Graviton Technical Guide: Machine Learning

About the Authors

Sunita Nadampalli is a Software Development Manager at AWS. She leads Graviton software performance optimizations for Machine Learning and HPC workloads. She is passionate about open source software development and delivering high-performance and sustainable software solutions with Arm SoCs.

Gaurav Garg is a Sr. Technical Account Manager at AWS with 15 years of experience. He has a strong operations background. In his role he works with Independent Software Vendors to build scalable and cost-effective solutions with AWS that meet the business requirements. He is passionate about Security and Databases.

Ratnesh Jamidar is a AVP Engineering at Sprinklr with 8 years of experience. He is a seasoned Machine Learning professional with expertise in designing, implementing large-scale, distributed, and highly available AI products and infrastructure.

Vinayak Trivedi is an Associate Director of Engineering at Sprinklr with 4 years of experience in Backend & AI. He is proficient in Applied Machine Learning & Data Science, with a history of building large-scale, scalable and resilient systems.

Source: Read MoreÂ