Db2 is an IBM relational database that supports large-scale transactional and analytical workloads. Amazon Relational Database Service (Amazon RDS) for Db2 is a new RDS engine that makes it straightforward to set up, operate, and scale Db2 databases in the cloud, freeing you to focus on your applications and business.

When you’re migrating your mission-critical Db2 database from on premises or Amazon Elastic Compute Cloud (Amazon EC2) to Amazon RDS for Db2, one of the key requirements is to have near-zero downtime. The following are possible reasons for this:

Unloading tables can’t disrupt normal business hours. Shutting down access to unload databases during the workday would negatively impact operations.

Some critical tables contain billions of records, exceeding 1 TB in size. These massive datasets can’t be unloaded within the limited maintenance windows available outside business hours.

You can address these challenges using logical data replication. By capturing changes while the table is being loaded, no outage is required and there is no impact on the source database applications.

Migrating a database requires recreating all database objects first, and then for each table doing a full load, after which changes can be captured from the database transaction logs.

The Db2 Migration Tool (Db2MT) is a tool developed in collaboration with IBM and AWS which can help migrate data into RDS for Db2. Db2MT is distributed and supported on GitHub. You can open issues and feature requests directly in this repository. The tool simplifies and greatly speeds up the process of migration by using parallelism for unloading all database definitions and data, uploading the data and definitions to the cloud and loading the data directly into Amazon RDS for DB2.

Solution overview

This post demonstrates how to use IBM InfoSphere Data Replication (IIDR) Q Replication to migrate data with minimal downtime. We walk you through the process of setting up Q Replication to replicate data from the source database (on premises) to the target database (Amazon RDS for Db2). The migration happens in the background while the source system remains online. After the target database is fully loaded and kept in sync with the source by the replication process, we switch over to the new target database with only a brief outage during the cutover, which is required only to verify all data has been replicated and is consistent.

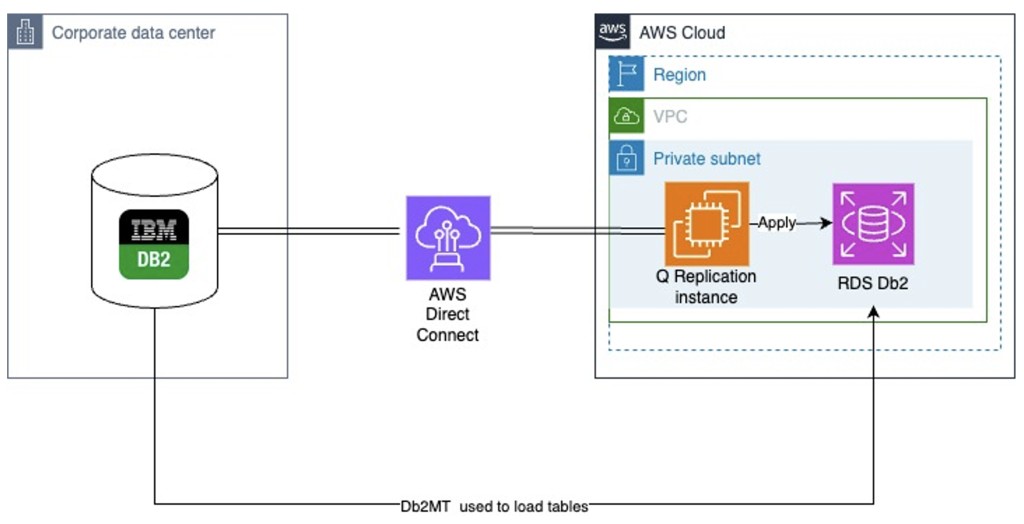

The following diagram illustrates the solution architecture.

In this diagram, we use Amazon EC2 to host a Q Replication server instance. The Q Replication instance remotely captures changes from the source database and applies those changes to the target RDS for Db2 database. The Q Replication process uses IBM MQ for staging changes extracted from the source database recovery log and a Db2 instance for holding its metadata. IBM MQ, Db2, and Q Replication executables are installed on the EC2 instance.

Prerequisites

You should have the following prerequisites:

Connectivity from on premises to the AWS Cloud using AWS Direct Connect

An RDS for Db2 instance

The Db2MT for data migration

A method to retain source Db2 recovery logs until all data is migrated

Set up the Q Replication environment

You can download IBM MQ and Db2 software images from IBM Passport Advantage with a valid account. In this post, we use IBM MQ and a Db2 enterprise trial license, which is valid for 90 days.

To set up the Q Replication environment, complete the following steps:

Follow the prerequisites to configure the EC2 instance (Linux) and install IBM MQ.

Accept MQ license and create a queue manager (for this post, we call ours QMRDS):

Start the queue manager:

Install Db2:

Create a Db2 client instance:

Set up database connections

Database names can be the same on the source and target, but the alias what we create here in our Q Replication instance must be different. In this example, the actual database name is bench10k for both instances, but we catalog the source as BENCHS and the target as BENCHT to differentiate them for replication purposes:

Create MQ resources

To create your MQ resources, complete the following steps:

Add MQ paths to your shell environment so you can invoke MQ commands:

Add the following lines in .bash_profile:

Q Replication uses IBM MQ queues for exchanging messages between the Capture and Apply programs and for keeping track of the data captured from the recovery log by the Capture program. Because both Capture and Apply are running on the same instance, all queues can be local queues. We create the following queues:

RESTARTQ – The restart queue is used by the Capture program to keep track of its progress replicating changes so it can determine where to restart if stopped. It contains the Db2 log sequence number (LSN) of the oldest inflight transaction and the maximum Commit LSN for transactions already replicated.

ADMINQ – This administrative queue is used for communication between the Q Capture and Q Apply processes.

DATAQ1 – The transactions captured by the Capture process are staged in this queue for the Apply process to replicate. We set the QDEPTH to 999999999 (default is only 5000) so that an unlimited amount of data can be staged in case the Apply program is stopped or the target database is temporarily unavailable.

We use the following code to create the queues:

Create Q replication control tables

The Q Replication metadata as well as the monitoring data and all messages it produces are stored in Db2 tables. We use a Q Replication asnclp script to create the Q Replication control tables and the replication subscriptions for the tables we want to migrate. Creating subscriptions inserts data into the control tables. An asnclp script is run as: asnclp -f filename.

For Amazon RDS for Db2, the Q Apply control tables require their own tablespaces, which need to be created manually through a stored procedure because they can’t be created directly on Amazon RDS for Db2.

Connect to the RDS for Db2 instance with the admin user name and password that were used for creating the instance and run the following commands:

You can now create the tables that Q Replication requires by running an asnclp script with the CREATE CONTROL TABLES command:

Q Replication requires creating a QMAP object, which identifies the queues used for staging and transmitting the transactions to replicate from the source database.

In our configuration, both Capture and Apply run on the same system and we can use a single local queue, therefore the source and target queue names are both QASN.DATAQ1 in the CREATE REPLMAP command:

With a QMAP defined that identifies the MQ queues, we’re ready to create replication subscriptions.

The following script creates subscriptions for all tables under one schema. The HAS LOAD PHASE N option (for no load) is specified in the CREATE QSUB command, because we will load the tables at the target using Db2MT. If instead you had specified HAS LOAD PHASE I (for an internal load), then by default Q Replication would use a load from cursor and automatically load the tables when the subscriptions are activated.

You can consider an internal load instead of using the Db2MT utility if the target database already contains all required objects. In this post, we use Db2MT and define the subscriptions with no load because it’s the fastest way for loading very large tables as well as for synchronizing the target after it has been loaded. It’s the preferred method for migrating all tables at once. With no load, you restart Capture to read all the changes that took place during the load phase for all tables, instead of letting Capture run and spill those changes to MQ while the table is being loaded. If instead you had a replication configuration that is already operational and needed to incrementally add tables without disrupting active replication subscriptions, then the automatic load would be preferable.

The instructions in this post assume the source Db2 instance is at version 11.5FP8 or later, for which the procedure is significantly simpler because a timestamp can be used for later restarting the Capture program. If the source Db2 is at a lower version, define the subscription as follows; we mask replication exceptions while catching up after the data migration by specifying OKSQLSTATE on the subscription:

Start the replication to activate the subscriptions

We first start the capture and apply program to verify all subscriptions can be activated successfully. For pre-V11.5FP8, this will also set the restart point for reading the source Db2 logs.

You can issue the following SQL query to verify the state of the subscriptions:

Set up your RDS for Db2 environment

For instructions to create and configure your RDS for Db2 cluster, refer to here. Make sure to have Q Replication instance in the same subnet as of your RDS for Db2 cluster.

Data migration using the Db2MT

Before we start migrating all the data, we must get the time at which all in-flight transactions are committed. This is because we will capture changes that take place during the data migration from the Db2 recovery logs and must guarantee that all changes will be either included in the data migration or read from the log.

To obtain the start time of the earliest not-yet committed transaction, you can run the following query to see any running transactions. If the query is empty, there are no currently not-yet committed transactions and the current time of the query run should suffice.

The open source Db2MT simplifies data migration using Db2 databases. Written in Go, Db2MT has an extensible architecture to customize the scripts it generates. These scripts allow you to validate the migration process before running it.

Db2 backups can normally only be restored within the same platform family. However, on Linux and UNIX, you can restore backups from earlier versions if the endianness matches between the backup and restore systems. Otherwise, a complex process is required involving exporting DDL, data, and recreating objects in the target database.

With Db2MT, this entire migration process is streamlined. Db2MT generates the necessary scripts to export from the source database and import into the target, handling object recreation and data loading. This makes Db2 database migration simple and efficient.

Migrate the data using Db2MT from an AIX source database to an RDS for Db2 target

When you have direct connectivity to AWS, Db2MT can be installed either direct on AIX/Windows server or a Db2 client. The Db2MT pulls high-fidelity metadata using native db2look and builds parallel paths to unload data direct from the Db2 server to Amazon Simple Storage Service (Amazon S3) using multiple paths and chunks upload. The Db2MT uses the GO SDK to upload data directly to Amazon S3 because there is no AWS Command Line Interface (AWS CLI) available on AIX. Db2MT uses IXF format to retain the maximum data fidelity to transfer the data without getting affected by the code page of the client machine. For more details on migration using Db2MT to migrate from AIX to Amazon RDS for Db2, refer to here.

Migrate the data using Db2MT from a Linux source database to an RDS for Db2 target

Db2MT will take offline and online database backups direct to Amazon S3 from a Db2 client using multiple paths depending on the size of the database. The same or another Db2 client that has connectivity to Amazon RDS for Db2 can run the RDS for Db2 stored procedure RDSADMIN_RESTORE to restore the offline or online database backup from Amazon S3. Optionally, you can apply the archive logs for online backup to synchronize changes. For more details on migration using Db2MT from Linux to Amazon RDS for Db2, refer to here.

Restart the replication to capture all changes that took place during the migration

When the data load using Db2MT is complete, you can restart the Q Replication to apply the backlog of changes to the target database (Amazon RDS for Db2).

You must make sure to restart the Capture program from before the data migration started and go back in the recovery log far enough to capture any non-yet committed (in-flight) transactions at the time the Db2MT was started. With Db2 V11.5FP8 or later, a timestamp obtained previously can be provided to Q Capture, which will map this timestamp into an LSN from where to start reading log records. For prior versions, the LSN parameter requires an LSN, which can be difficult to obtain. For pre-V11FP8, instead of providing a restart LSN, we use a configuration with OKSQLSTATES on the subscription to disregard any exception. The caveat is that if you want to continue using replication after the migration is complete, you have to change the subscription to remove this option—otherwise, replication exceptions can’t be detected.

When you restart Capture with the LSN parameter (with either a timestamp or an actual LSN), instead of letting it restart from where it was last stopped (also called a warm start), Q Replication goes into force mode, for which changes are applied with conflict resolution to restore consistency of the target database. During that catchup period, data mismatches are expected because the data was being read and copied while the source application was still running. No exceptions are reported during that catchup period. After all changes have been replicated up to the capture start time, normal processing resumes, and any data mismatch exceptions are reported.

For example, if you started Db2MT at 10:22 and the longest not-yet committed transaction had started 10 minutes earlier, then you can restart Capture to read the logs from 10:12:

You can track the progress of the replication process by querying from the monitor tables:

The END2END_LATENCY is reported in milliseconds. It’s the average elapsed time from the commit at the source and the commit time at the target for all transactions applied during the monitor interval, which by default is 30 seconds.

The OLDEST_TRANS is the current point-in-time consistency of the target RDS database; all transactions from the source have been applied up to that time. When OLDEST_TRANS approaches the current time, you know that all data is consistent with the source, and you can initiate your cutover.

Conclusion

In this post, we showed the steps involved in migrating on-premises Db2 on POWER to Amazon RDS for Db2, including the high-level solution overview and the detailed steps in configuring the Q Replication using remote Capture and Apply, creating the RDS for Db2 instances, and using Db2MT for data migration.

If you have any questions, comments, or suggestions, please leave a comment.

About the authors

Ramesh Kumar Venkatraman is a Senior Solutions Architect at AWS who is passionate about containers and databases. He works with AWS customers to design, deploy, and manage their AWS workloads and architectures. In his spare time, he loves to play with his two kids and follows cricket.

Serge Bourbonnais has worked at IBM for over 30 years, with the last 19 at the Silicon Valley Lab focusing on database replication and international assignments. Originally from Montreal, he enjoys cooking, walking, and spending time with friends and family.

Feng Cai is a Database Consultant at AWS. He has a wide background in providing database services to clients. Feng is currently a member of the AWS Global Competency Centre, providing homogeneous and heterogenous database migration support to public sector clients.

Sumit Kumar is a Senior Solutions Architect at AWS, he enjoys solving complex problems. He has been helping customers across various industries to build and design their workloads on AWS Cloud. He enjoys cooking, playing chess and spend time with his family.

Source: Read More