Natural language processing (NLP) involves using algorithms to understand and generate human language. It is a subfield of artificial intelligence that aims to bridge the gap between human communication and computer understanding. This field covers language translation, sentiment analysis, and language generation, providing essential tools for technological advancements and human-computer interaction. NLP’s ultimate goal is to enable machines to perform various language-related tasks with human-like proficiency, making it an integral part of modern AI research and applications.

There is still a critical challenge of planning tasks using large language models (LLMs). Despite significant advancements in NLP, the planning capabilities of these models need to catch up to human performance. This performance gap is critical as planning is a complex task that involves decision-making and organizing actions to achieve specific goals, which are fundamental aspects of many real-world applications. Efficient planning is essential for activities ranging from daily scheduling to strategic business decisions, highlighting the importance of improving LLMs’ planning abilities.

Currently, planning in AI is extensively studied in robotics and automated systems, using algorithms that rely on predefined languages like PDDL (Planning Domain Definition Language) and ASP (Answer Set Programming). These methods often require expert knowledge to set up and are not expressed in natural language, limiting their accessibility and applicability in real-world scenarios. Recent efforts have attempted to adapt LLMs for planning tasks, but these approaches need more realistic benchmarks and capture the complexities of real-world scenarios. Thus, there is a need for benchmarks that reflect practical planning challenges.

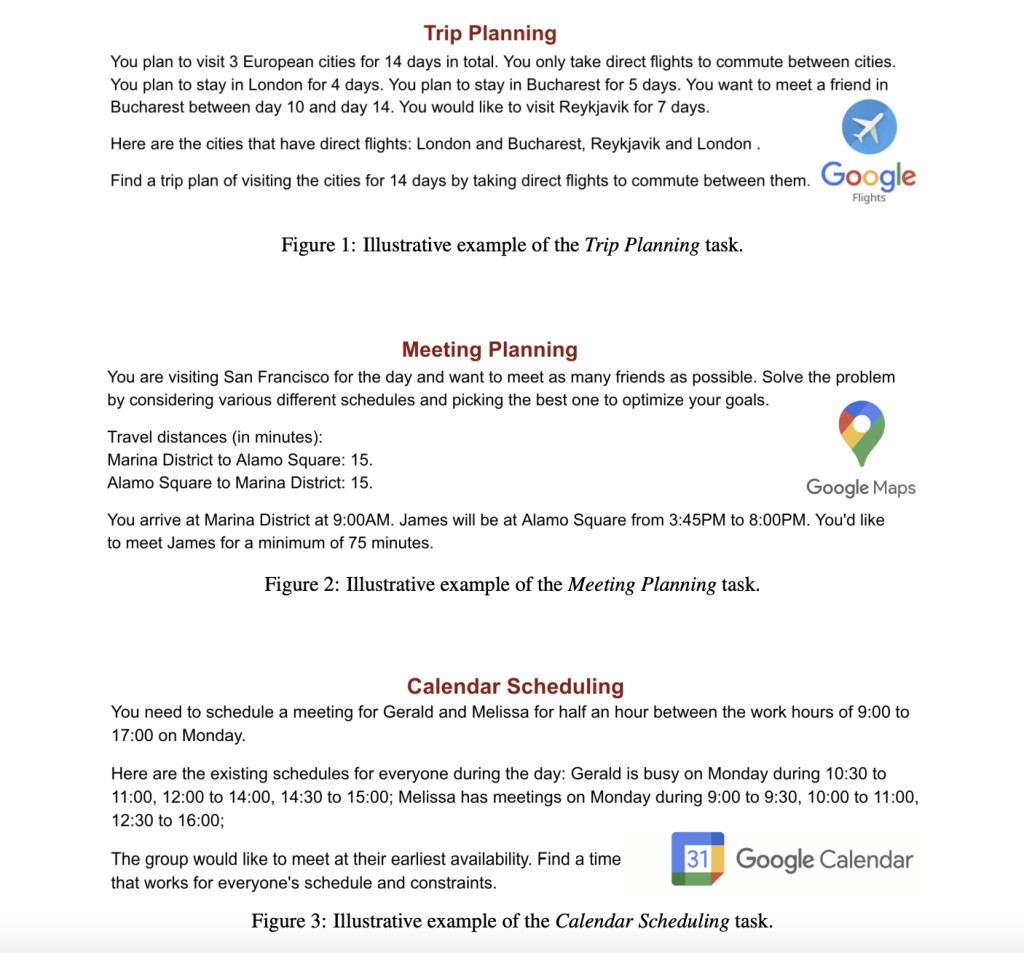

A research team from Google DeepMind has introduced NATURAL PLAN, a new benchmark designed to evaluate the planning capabilities of LLMs in natural language contexts. This benchmark focuses on three main tasks: Trip Planning, Meeting Planning, and Calendar Scheduling. The dataset provides real-world information from tools like Google Flights, Google Maps, and Google Calendar, aiming to simulate realistic planning tasks without needing a tool-use environment. NATURAL PLAN decouples tool use from the reasoning task by providing outputs from these tools as context, which helps focus the evaluation on the planning capabilities of the models.

NATURAL PLAN is meticulously designed to assess how well LLMs can handle complex planning tasks described in natural language. For Trip Planning, the task involves planning an itinerary under given constraints, such as visiting multiple cities within a set duration, using direct flights only. Meeting Planning requires scheduling meetings under various constraints, including travel times and availability of participants. Calendar Scheduling focuses on arranging work meetings based on existing schedules and constraints. The dataset construction involves synthetically creating tasks using real data from Google tools and adding constraints to ensure a single correct solution. This approach provides a robust and realistic benchmark for evaluating LLMs’ planning abilities.

The evaluation revealed that current state-of-the-art models, such as GPT-4 and Gemini 1.5 Pro, face significant challenges with NATURAL PLAN tasks. In Trip Planning, GPT-4 achieved a 31.1% success rate, while Gemini 1.5 Pro reached 34.8%. Performance significantly dropped as task complexity increased, with models performing below 5% when planning trips involving ten cities. GPT-4 achieved 47.0% accuracy for Meeting Planning, while Gemini 1.5 Pro reached 39.1%. In Calendar Scheduling, Gemini 1.5 Pro outperformed others with a 48.9% success rate. These results underscore the difficulty of planning in natural language and the need for improved methods, highlighting the significance of the research findings.

The researchers also conducted various experiments to better understand the models’ limitations and strengths. They found that model performance decreases as task complexity increases, such as with more cities, people, or meeting days involved. Furthermore, models performed worse in hard-to-easy generalization scenarios compared to easy-to-hard, indicating challenges in learning from complex examples. Self-correction experiments showed that prompting models to identify and fix their errors often led to performance drops, especially in stronger models like GPT-4 and Gemini 1.5 Pro. However, long-context capabilities experiments demonstrated promise, with Gemini 1.5 Pro showing steady improvement with more in-context examples, achieving up to 39.9% accuracy in Trip Planning with 800 shots.

In conclusion, the research underscores a significant gap in the planning capabilities of current LLMs when confronted with complex, real-world tasks. However, it also illuminates the potential of LLMs, offering a glimmer of hope for the future. NATURAL PLAN provides a valuable benchmark for evaluating and enhancing these capabilities. The findings suggest that while LLMs have room for improvement, they hold promise. Substantial advancements are needed to bridge the performance gap with human planners. These advancements could revolutionize the practical applications of LLMs in various fields, making them more effective and reliable tools for planning tasks.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter. Join our Telegram Channel, Discord Channel, and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 44k+ ML SubReddit

The post Can Machines Plan Like Us? NATURAL PLAN Sheds Light on the Limits and Potential of Large Language Models appeared first on MarkTechPost.

Source: Read MoreÂ