Apple made a significant announcement, strongly advocating for on-device AI through its newly introduced Apple Intelligence. This innovative approach emphasizes the integration of a ~3 billion parameter language model (LLM) on devices like Mac, iPhone, and iPad, leveraging fine-tuned LoRA adapters to perform specialized tasks. This model claims to outperform larger models, such as the 7 billion and 3 billion parameter LLMs, marking a major step forward in on-device AI capabilities.

Technological Advancements

On-Device Model

Apple’s on-device model is designed with grouped-query-attention, activation, and embedding quantization running on the neural engine. This setup allows the iPhone 15 Pro to achieve impressive performance metrics, including a time-to-first-token of just 0.6 milliseconds and a token generation rate of 30 tokens per second. Despite the smaller model size, Apple’s fine-tuned LoRA adapters enable dynamic loading, caching, and model swapping as needed, optimizing performance for various tasks.

Server Model

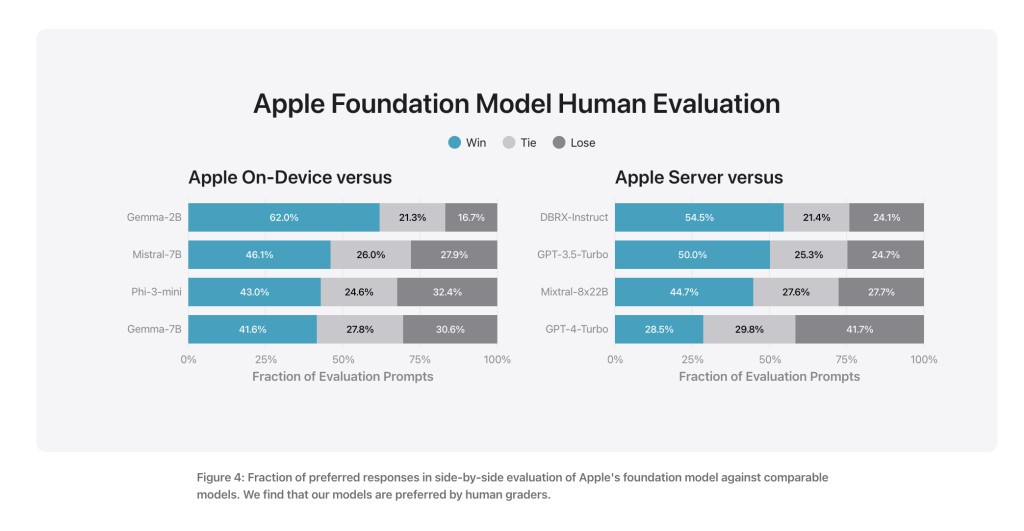

While specific details about the server model size remain undisclosed, it supports a larger vocabulary size of 100,000 tokens than the on-device model’s 49,000 tokens. The server model matches the performance of GPT-4-Turbo, indicating Apple’s ability to compete with some of the most advanced AI systems currently available.

Training and Optimization

Apple uses its AXLearn framework, built on JAX and FSP, to train these models on TPUs and GPUs. The training process incorporates rejection sampling, descent policy optimization, and leave-one-out advantage for reinforcement learning from human feedback (RLHF). This combination ensures that the models are highly capable, efficient, and robust in real-world applications.

Synthetic Data and Evaluation

Apple utilizes synthetic data generation to enhance model training for tasks like summarization, ensuring high accuracy and efficiency. Evaluation samples are extensive, with 750 samples used for each production use case to rigorously test the models’ performance.

Privacy and Security

A cornerstone of Apple’s AI strategy is privacy. The models are designed to run on-device, ensuring user data remains secure and private. Using fine-tuned adapters also means addressing specific user needs without compromising overall model integrity or user privacy.

Performance and User Experience

The combination of Apple’s on-device and server models delivers a seamless user experience. The on-device model achieves significant milestones in summarization tasks, outperforming competitors like Phi-3 mini. The server model also excels, demonstrating comparable performance to GPT-4-Turbo. Apple’s models are noted for their low violation rates in handling adversarial prompts, underscoring their robustness and safety.

Conclusion

Apple’s foray into on-device AI with Apple Intelligence represents a major technological leap. By leveraging fine-tuned LoRA adapters and focusing on privacy and efficiency, Apple is setting new standards in the AI landscape. The detailed integration of these models across iPhone, iPad, and Mac promises to enhance daily user activities, making AI a more integral part of Apple’s ecosystem.

The post Apple Intelligence: Leading the Way in On-Device AI with Advanced Fine-Tuned Models and Privacy appeared first on MarkTechPost.

Source: Read MoreÂ