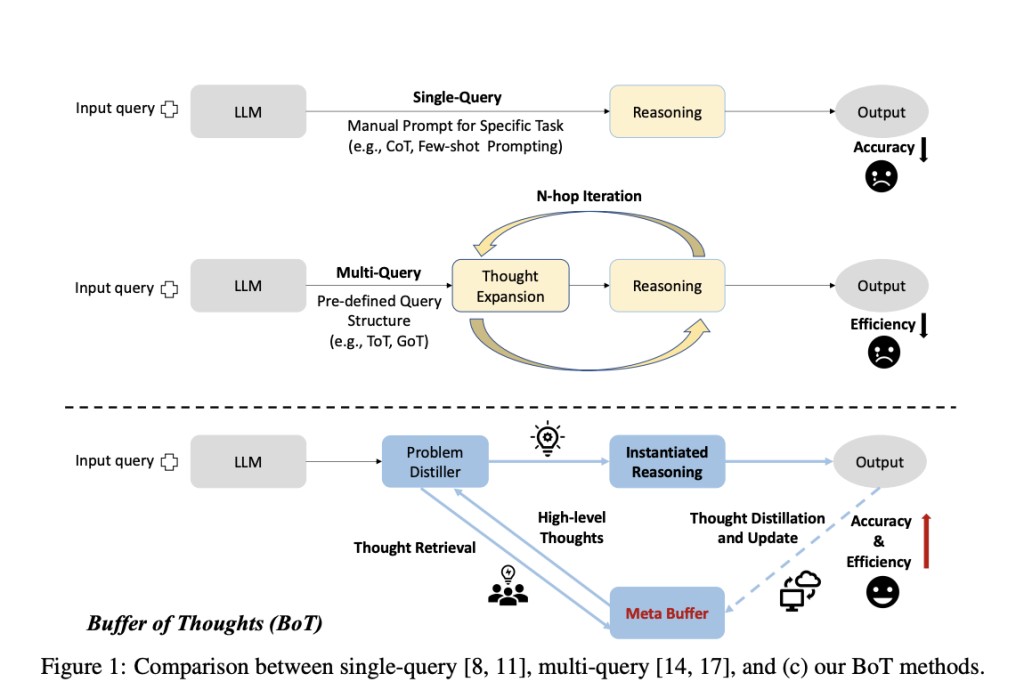

The remarkable performance in different reasoning tasks has been demonstrated by several Large Language Models (LLMs), such as GPT-4, PaLM, and LLaMA. To further increase the functionality and performance of LLMs, there are more effective prompting methods and increasing the model size, both of which boost reasoning performance. The approaches are classified as follows: (i) methods that rely on a single query to complete the reasoning process, such as those that are used for prompt engineering; (ii) methods that use multiple LLM queries to produce different plausible reasoning paths, breaking down complex problems into smaller ones; examples of this type of reasoning include Least-to-Most, ToT, and GoT.

Nevertheless, there are limitations to both types of methods:Â

It is impractical to manually design single-query reasoning systems task by task because they typically rely on prior assumptions or relevant exemplars of reasoning processes.

Multi-query reasoning systems are computationally intensive because they recursively expand reasoning paths to find a unique intrinsic structure for each task.Â

Both single-query and multi-query reasoning systems are limited by their reasoning structures and exemplars. They fail to derive general and high-level guidelines or thoughts from previously completed tasks, which could be useful for improving efficiency and accuracy when solving similar problems.

Introducing a novel approach to address these limitations, a team of researchers from Peking University, UC Berkeley, and Stanford University have developed the Buffer of Thoughts (BoT). This innovative and flexible framework for thought-augmented reasoning is designed to enhance the reasoning accuracy, efficiency, and resilience of LLMs across a wide range of tasks. A key component of BoT is the meta-buffer, a small library that stores a set of generalizable, high-level ideas (thought-templates) extracted from various problem-solving procedures. These thought-templates can be reused for other tasks, facilitating effective thought-augmented reasoning and configuring with a specific reasoning structure.

BoT is designed to be stable and scalable, so the team included a buffer manager to update the meta-buffer dynamically. This way, the meta-buffer’s capacity effectively increases as more jobs are performed. The three main benefits of this approach are:Â

Enhanced Precision: By utilizing the shared thought-templates, it is possible to instantiate high-level thoughts to tackle various tasks adaptively. This eliminates the requirement to construct reasoning structures from the beginning, dramatically enhancing the precision of reasoning.Â

Streamlined Reasoning: By directly utilizing informative historical reasoning structures, the proposed thought-augmented reasoning might streamline reasoning processes and eliminate cumbersome multi-query procedures.Â

BoT’s approach to retrieving and instantiating thoughts mirrors human brain processes, enhancing LLMs’ ability to consistently solve similar issues. This improves the model’s robustness and, when applied to various tasks, experimental results demonstrate that BoT significantly enhances accuracy, efficiency, and resilience. These practical benefits make BoT a promising tool for improving the performance of LLMs in real-world applications.

The researchers build a buffer manager to extract ideas from different solutions, and it enhances the meta-buffer’s capacity as more chores are finished. They perform comprehensive experiments on ten difficult tasks that require a lot of reasoning. With an average cost of only 12% of multi-query prompting approaches, BoT outperforms prior SOTA methods by 51% on Checkmate-in-One, 11% on Game of 24, and 20% on Geometric Shapes.

The proposed approach greatly improves accuracy while keeping reasoning efficient and robust. However, when it comes to problems that require human-like ingenuity, the method doesn’t has little to offer because these problems frequently don’t have a precise thought-template. Moreover, the resulting thought-templates might not be the best quality if BoT uses a less robust model to initialize the meta-buffer. This is because the weaker model has restricted reasoning and instruction-following capabilities. Taken together, the following are the paths forward that BoT reveals: 1. Creating an open-domain system, such as an agent model, by combining BoT with external resources. 2. optimizing the distillation of thought-templates, which could greatly improve their capability as templates for increasingly complicated activities.Â

Check out the Paper and GitHub. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter. Join our Telegram Channel, Discord Channel, and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 44k+ ML SubReddit

The post Buffer of Thoughts (BoT): A Novel Thought-Augmented Reasoning AI Approach for Enhancing Accuracy, Efficiency, and Robustness of LLMs appeared first on MarkTechPost.

Source: Read MoreÂ