Chain-of-Thought (CoT) reasoning enhances the capabilities of LLMs, allowing them to perform more complex reasoning tasks. Despite being primarily trained for next-token prediction, LLMs can generate detailed steps in their responses when prompted to explain their thought process. This ability, which resembles logical reasoning, is paradoxical since LLMs are not explicitly designed for reasoning. Studies have shown that while LLMs struggle with solving problems through single-token predictions, they excel when they can generate a sequence of tokens, effectively using these sequences as a form of computational tape to handle more intricate problems.

Researchers from FAIR, Meta AI, Datashape, INRIA, and other institutions explore how CoT reasoning arises in transformers. They introduce “iteration heads,†specialized attention mechanisms crucial for iterative reasoning, and track their development and function within the network. The study reveals how these heads allow transformers to solve complex problems through multi-step reasoning by focusing on simple, controlled tasks, such as copying and polynomial iterations. Experiments show these skills transfer well between tasks, suggesting that transformers can develop internal circuits for reasoning influenced by their training data, which explains the strong CoT capabilities observed in larger models.

The study focuses on understanding how transformers, particularly in the context of language models, can learn and execute iterative algorithms, which involve sequential processing steps. By examining controlled tasks like the copying problem and polynomial iteration, the researchers aim to elucidate how transformers employ CoT reasoning to solve such tasks effectively. Through algorithmic representations and synthetic data, they investigate the emergence of CoT reasoning mechanisms, such as “iteration heads,†within transformer architectures. This allows for a detailed analysis of how transformers tackle iterative tasks, shedding light on their reasoning capabilities beyond simple token prediction.

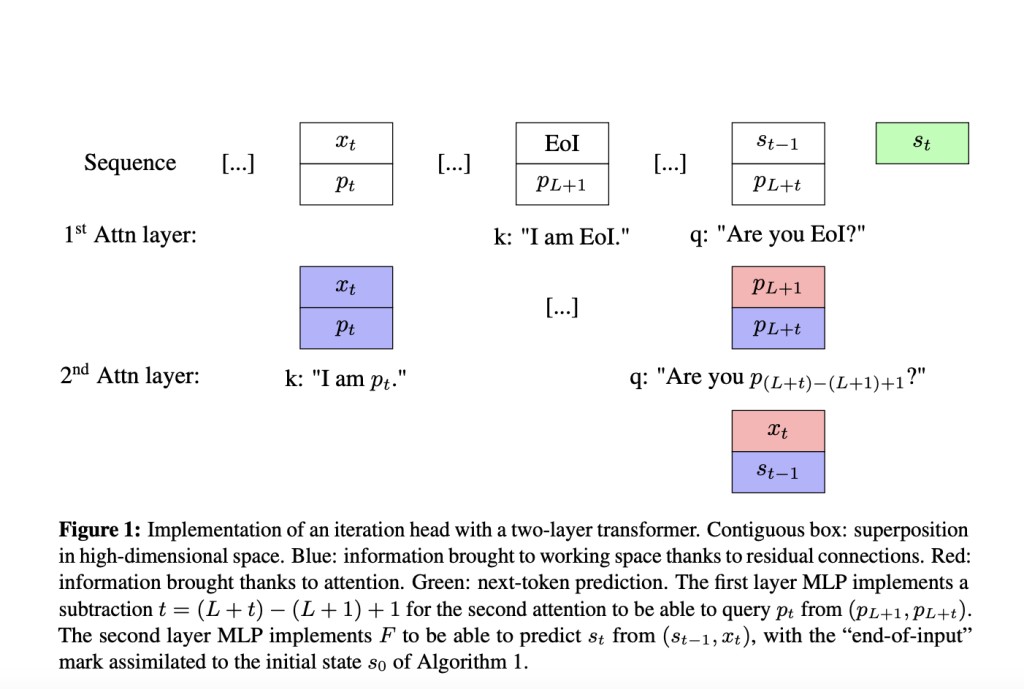

The researchers delve into how transformers, particularly in language models, can implement iterative algorithms effectively through CoT reasoning. It describes a theoretical framework, termed an “iteration head,†which enables a two-layer transformer to efficiently execute iterative tasks by employing attention mechanisms. Experimental results confirm the emergence of this theoretical circuit during training, highlighting its robustness across different tasks and model architectures. Additionally, ablation studies explore variations in the learned circuits and their impact on performance, shedding light on the mechanisms underlying CoT reasoning in transformers.

The study explores how strategic data curation can facilitate skill transfer and improve learning efficiency in language models. The model can leverage previously acquired knowledge by pretraining on a simpler task and then fine-tuning on a more complex one, resulting in faster convergence and improved performance. For instance, training on a polynomial iteration task before switching to the parity problem significantly reduces the training time required to learn the parity task. This controlled setup demonstrates the importance of data selection and the role of inductive biases in shaping learning dynamics and model performance.

In conclusion, the study delves into the emergence of CoT reasoning in LLMs by investigating their ability to handle iterative algorithms. It demonstrates how transformers, trained primarily on next-token prediction tasks, can efficiently tackle iterative tasks using CoT reasoning. Data curation is key in shaping model behavior, guiding them toward specific problem-solving circuits. While the study focuses on controlled scenarios, it suggests that transformers likely develop internal multistep reasoning circuits applicable across tasks. Additionally, it points out a limitation of transformers in maintaining internal states, which could impact their applicability to complex algorithms and language modeling.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter. Join our Telegram Channel, Discord Channel, and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 44k+ ML SubReddit

The post Unveiling Chain-of-Thought Reasoning: Exploring Iterative Algorithms in Language Models appeared first on MarkTechPost.

Source: Read MoreÂ