Large Language Models (LLMs) have advanced significantly in recent years. Models like ChatGPT and GPT-4 allow users to interact with and elicit natural language responses. To improve the human-machine interaction and accuracy of LLMs, it is essential to have a method to evaluate these interactions dynamically. While LLMs have shown remarkable capabilities in generating text, conventional evaluation methods fail to capture their performance in interactive human-machine interactions. The current evaluation method involves static pairs of inputs and outputs, which limits the understanding of language model capabilities.Â

Researchers from the University of Cambridge, Cambridge, the University of Oxford, Oxford, and the Massachusetts Institute of Technology, Cambridge, have introduced CheckMate to address challenges in evaluating large language models (LLMs), particularly in their use as problem-solving assistants. In domains like mathematics, where correctness is crucial, the static evaluation method limits the accuracy and helpfulness of LLMs. The proposed method aims to bridge this gap by enabling humans to interact with LLMs and evaluate their performance in real-time problem-solving scenarios, specifically focusing on undergraduate-level mathematics theorem proving.

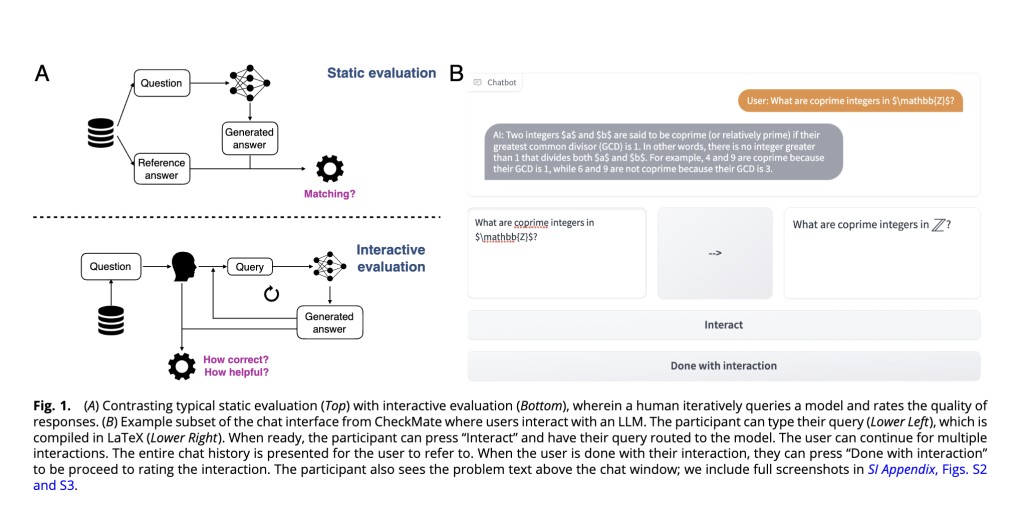

The current evaluation methods for LLMs predominantly rely on static assessments with predefined input-output pairs, which are insufficient for understanding their performance in interactive settings. In contrast, the proposed CheckMate platform facilitates dynamic and interactive evaluations by allowing humans to engage with LLMs in problem-solving tasks. CheckMate is designed to assess LLMs’ performance in theorem proving by enabling users to interact with models like InstructGPT, ChatGPT, and GPT-4. By collecting real-time interactions and evaluations from human participants, the platform provides a more sophisticated understanding of LLM capabilities, particularly in mathematics.

CheckMate’s methodology revolves around two key evaluation approaches: structured multistep interactive ratings and free-form instance-based evaluation. The platform collects data on user interactions with LLMs, capturing the correctness and perceived helpfulness of the generated responses. Through a mixed-cohort study involving participants ranging from undergraduate students to mathematics professors, CheckMate generates insights into how humans utilize LLMs for problem-solving and identifies patterns in user behavior. Additionally, domain experts conduct case studies to delve deeper into the strengths and weaknesses of LLMs in mathematical reasoning. The results obtained from CheckMate’s evaluations and case studies contribute to developing a taxonomy of user behaviors and provide actionable insights for ML practitioners and mathematicians.

In conclusion, the study bridges the gap in the evaluation of LLMs for machine responses for human interactions by introducing CheckMate. The interactive evaluation platform enables real-time assessment of LLM performance in problem-solving tasks. By incorporating human feedback and interaction, CheckMate offers a more comprehensive understanding of LLM capabilities, particularly in domains like mathematics. The proposed method highlights the importance of dynamic evaluation and the need for collaboration between ML practitioners and domain experts. CheckMate’s approach can inform the development and deployment of LLMs as problem-solving assistants, emphasizing the importance of calibrated uncertainty communication, reasoning, and conciseness in model responses.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter. Join our Telegram Channel, Discord Channel, and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 43k+ ML SubReddit | Also, check out our AI Events Platform

The post CheckMate: An Adaptable AI Platform for Evaluating Language Models by Their Interactions with Human Users appeared first on MarkTechPost.

Source: Read MoreÂ