The development of large language models (LLMs) has been a focal point in advancing NLP capabilities. However, training these models poses substantial challenges due to the immense computational resources and costs involved. Researchers continuously explore more efficient methods to manage these demands while maintaining high performance.

A critical issue in LLM development is the extensive resources needed for training dense models. Dense models activate all parameters for each input token, leading to significant inefficiencies. This approach makes it difficult to scale up without incurring prohibitive costs. Consequently, there is a pressing need for more resource-efficient training methods that can still deliver competitive performance. The primary goal is to balance computational feasibility and the ability to handle complex NLP tasks effectively.

Traditionally, LLM training has relied on dense, resource-intensive models despite their high performance. These models require the activation of every parameter for each token, leading to a substantial computational load. Sparse models, such as Mixture-of-Experts (MoE), have emerged as a promising alternative. MoE models distribute computational tasks across several specialized sub-models or “experts.†This approach can match or surpass dense models’ performance using a fraction of the resources. The efficiency of MoE models lies in their ability to selectively activate only a subset of the experts for each token, thus optimizing resource usage.

The Skywork Team, Kunlun Inc. research team introduced Skywork-MoE, a high-performance MoE large language model with 146 billion parameters and 16 experts. This model builds on the foundational architecture of their previously developed Skywork-13B model, utilizing its dense checkpoints as the initial setup. The Skywork-MoE incorporates two novel training techniques: gating logit normalization and adaptive auxiliary loss coefficients. These innovations are designed to enhance the model’s efficiency and performance. By leveraging dense checkpoints, the model benefits from pre-existing data, which aids in the initial setup and subsequent training phases.

Skywork-MoE was trained using dense checkpoints from the Skywork-13B model, initialized from dense models pre-trained for 3.2 trillion tokens, and further trained on an additional 2 trillion tokens. The gating logit normalization technique ensures a distinct gate output distribution, which enhances export diversification. This method involves normalizing the gating layer outputs before applying the softmax function, which helps achieve a sharper and more focused distribution. The adaptive auxiliary loss coefficients allow for layer-specific adjustment, maintaining a balanced load across experts and preventing any single expert from becoming overloaded. These adjustments are based on monitoring the token drop rate and adapting the coefficients accordingly.

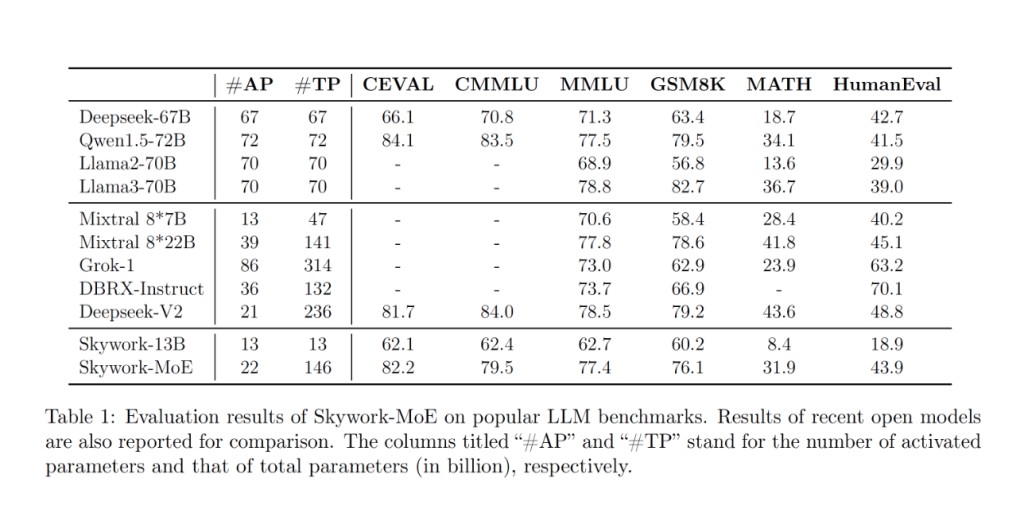

The performance of Skywork-MoE was evaluated across a variety of benchmarks. The model scored 82.2 on the CEVAL benchmark and 79.5 on the CMMLU benchmark, surpassing the Deepseek-67B model. The MMLU benchmark scored 77.4, which is competitive compared to higher-capacity models like Qwen1.5-72B. For mathematical reasoning tasks, Skywork-MoE scored 76.1 on GSM8K and 31.9 on MATH, comfortably outperforming models like Llama2-70B and Mixtral 8*7B. Skywork-MoE demonstrated robust performance in code synthesis tasks with a score of 43.9 on the HumanEval benchmark, exceeding all dense models in the comparison and slightly trailing behind the Deepseek-V2 model. These results highlight the model’s ability to effectively handle complex quantitative and logical reasoning tasks.

In conclusion, the research team from the Skywork team successfully addressed the issue of resource-intensive LLM training by developing Skywork-MoE, which leverages innovative techniques to enhance performance while reducing computational demands. Skywork-MoE, with its 146 billion parameters and advanced training methodologies, stands as a significant advancement in the field of NLP. The model’s strong performance across various benchmarks underscores the effectiveness of the gating logit normalization and adaptive auxiliary loss coefficients techniques. This research competes well with existing models and sets a new benchmark for the efficiency and efficacy of MoE models in large-scale language processing tasks.

The post Skywork Team Introduces Skywork-MoE: A High-Performance Mixture-of-Experts (MoE) Model with 146B Parameters, 16 Experts, and 22B Activated Parameters appeared first on MarkTechPost.

Source: Read MoreÂ