Sparse neural networks aim to optimize computational efficiency by reducing the number of active weights in the model. This technique is vital as it addresses the escalating computational costs associated with training and inference in deep learning. Sparse networks enhance performance without dense connections, reducing computational resources and energy consumption.

The main problem addressed in this research is the need for more effective training of sparse neural networks. Sparse models suffer from impaired signal propagation due to a significant number of weights being set to zero. This issue complicates the training process, challenging achieving performance levels comparable to dense models. Moreover, tuning hyperparameters for sparse models is costly and time-consuming because the optimal hyperparameters for dense networks are unsuitable for sparse ones. This mismatch leads to inefficient training processes and increased computational overhead.

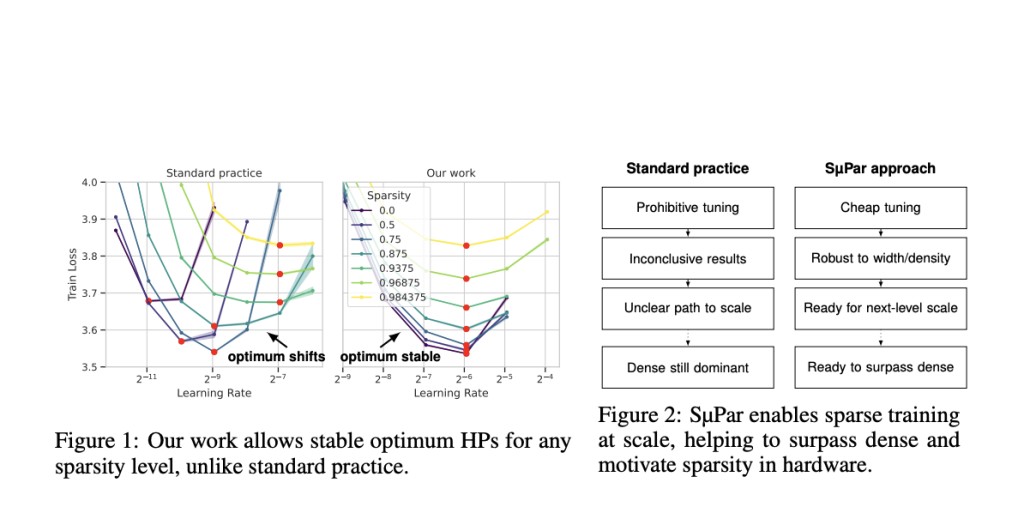

Existing methods for sparse neural network training often involve reusing hyperparameters optimized for dense networks, which could be more effective. Sparse networks require different optimal hyperparameters, and introducing new hyperparameters for sparse models further complicates the tuning process. This complexity results in prohibitive tuning costs, undermining the primary goal of reducing computation. Additionally, a lack of established training recipes for sparse models makes it difficult to train them at scale effectively.

Researchers at Cerebras Systems have introduced a novel approach called sparse maximal update parameterization (SμPar). This method aims to stabilize the training dynamics of sparse neural networks by ensuring that activations, gradients, and weight updates scale independently of sparsity levels. SμPar reparameterizes hyperparameters, enabling the same values to be optimal across varying sparsity levels and model widths. This approach significantly reduces tuning costs by allowing hyperparameters tuned on small dense models to be effectively transferred to large sparse models.

SμPar adjusts weight initialization and learning rates to maintain stable training dynamics across different sparsity levels and model widths. It ensures that the scales of activations, gradients, and weight updates are controlled, avoiding issues like exploding or vanishing signals. This method allows hyperparameters to remain optimal regardless of sparsity and model width changes, facilitating efficient and scalable training of sparse neural networks.

The performance of SμPar has been demonstrated to be superior to standard practices. SμPar improved training loss by up to 8.2% in large-scale language modeling compared to the common approach of using dense model standard parameterization. This improvement was observed across different sparsity levels, with SμPar forming the Pareto frontier for loss, indicating its robustness and efficiency. According to the Chinchilla scaling law, these improvements translate to a 4.1× and 1.5× gain in compute efficiency. Such results highlight the effectiveness of SμPar in enhancing the performance and efficiency of sparse neural networks.

In conclusion, the research addresses the inefficiencies in current sparse training methods and introduces SμPar as a comprehensive solution. By stabilizing training dynamics and reducing hyperparameter tuning costs, SμPar enables more efficient and scalable training of sparse neural networks. This advancement holds promise for improving the computational efficiency of deep learning models and accelerating the adoption of sparsity in hardware design. The novel approach of reparameterizing hyperparameters to ensure stability across varying sparsity levels and model widths marks a significant step forward in neural network optimization.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter. Join our Telegram Channel, Discord Channel, and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 43k+ ML SubReddit | Also, check out our AI Events Platform

The post Sparse Maximal Update Parameterization (SμPar): Optimizing Sparse Neural Networks for Superior Training Dynamics and Efficiency appeared first on MarkTechPost.

Source: Read MoreÂ