In Part 1 of this series, we demonstrated how to configure interval partitioning in an Amazon Aurora PostgreSQL-Compatible Edition database using PostgreSQL extensions such as pg_partman and pg_cron. The monitoring job was external to the database, thereby allowing a centralized monitoring solution. In this post, we demonstrate how you can monitor and send alerts using PostgreSQL extensions like pg_cron, aws_lambda, and services like AWS Lambda and Amazon Simple Notification Service (Amazon SNS), thereby controlling the monitoring solution from within the database.

Solution overview

We showcase the monitoring of a PostgreSQL partition maintenance job using pg_cron, and then use Lambda and Amazon SNS to send notifications when there are errors.

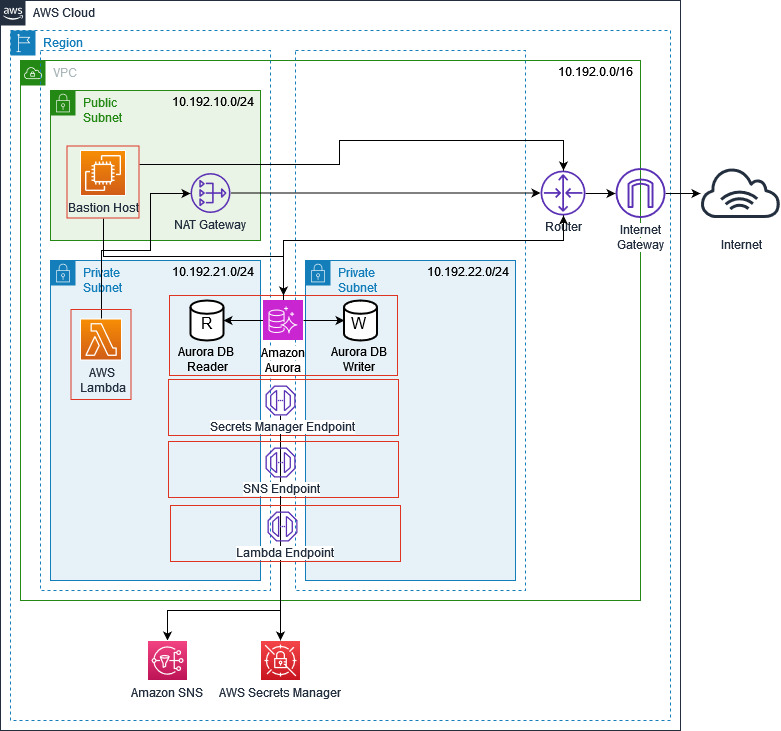

The following diagram illustrates the architecture used in this post.

The architecture consists of the following components:

An Aurora PostgreSQL database with aws_lambda, pg_cron, and pg_partman extensions. We use pg_cron to schedule the pg_partman function that performs the partition maintenance operations of adding new partitions, and we use the aws_lambda extension to invoke the Lambda function.

The Lambda function is invoked by a monitoring job scheduled in the PostgreSQL database using pg_cron that checks the status of the partition maintenance job. The job verifies the cron.job_run_details table for any job failures and invokes the Lambda function to send notifications to the SNS topic.

We use AWS Identity and Access Management (IAM) to provide necessary permission to Lambda so that it can access different AWS services.

We use Amazon SNS to send alerts when failures occur in the partition maintenance job running in the PostgreSQL database.

In the following sections, we walk you through the steps to set up aws_lambda and deploy the necessary resources using AWS Serverless Application Model (AWS SAM).

Prerequisites

To implement this solution, you must have an Aurora PostgreSQL instance with the latest minor version available for 12 and above, or an Amazon Relational Database Service (Amazon RDS) for PostgreSQL instance with the latest minor version available for 12 and above.

Furthermore, set up pg_partman and pg_cron as described in Part 1.

When creating partitions using pg_partman.create_parent with pg_partman version 5.0.0 or later, make sure that you use the range value for the p_type argument instead of native. Additionally, for partition intervals, specify interval formats as 1 day, 1 month, 3 months, or 1 year as needed. You need to use a supported interval time value from core PostgreSQL.

You also need an Amazon Elastic Compute Cloud (Amazon EC2) host or bastion server with an IAM role attached with permissions to create an IAM role, the parameter store, Lambda functions, interface VPC endpoints, security groups, Amazon EventBridge rule, and SNS topic.

Set up aws_lambda and the PostgreSQL procedure

The PostgreSQL procedure queries the cron.job_run_details table to check the status of the partition maintenance job. It uses the aws_lambda extension to invoke the Lambda function if the procedure finds any failures in the maintenance job.

Run the following steps to complete the setup:

Connect to your Aurora PostgreSQL DB instance as a user with privileges to create the schema and deploy the objects. The default postgres user is shown in the following example:

Install the aws_lambda extension in the postgres database:

Create a procedure in the postgres database that invokes the Lambda function to send an SNS notification in case of any failures in the partition maintenance job:

AWS SAM

In this section, we describe the AWS services that are deployed using the AWS SAM. To deploy the AWS services mentioned in this post, choose an Amazon EC2 host or bastion server with an IAM role attached with permissions to create an IAM role, Lambda functions, interface VPC endpoint, security groups, and SNS topic, and run Amazon RDS CLI commands.

Log in to the Amazon EC2 host and install the AWS SAM CLI.

Download the source code and deploy by running the following command:

Provide the following parameters:

Stack Name – Name for the AWS CloudFormation stack.

AWS Region – AWS Region where the stack is being deployed.

pNotificationEmail – Email ID that is configured to receive alerts.

pVpc – VPC ID where the Lambda function is deployed.

pPrivateSubnet1 – Private subnet 1 where the Lambda function is deployed.

pPrivateSubnet2 – Private subnet 2 where the Lambda function is deployed.

The following is the sample output when you run sam deploy –guided with the default setting arguments:

In the following sections, we describe the AWS services deployed with AWS SAM.

IAM role

AWS SAM creates an IAM policy with the permissions detailed in the following code. It also attaches an AWS managed IAM policy called AWSLambdaVPCAccessExecutionRole to the IAM role. This is required to create Lambda functions within the VPC.

This creates an IAM role called <rds-lambda-role-name> that grants permission to the PostgreSQL database cluster to invoke the Lambda function. The IAM policy looks like the following code:

Lambda function

AWS SAM also deploys the Lambda function. It uses Python 3.12 and is deployed in the same VPC as the PostgreSQL database. The PostgreSQL function calls the Lambda function, which sends SNS notifications in case of failures.

When failures occur in the partition maintenance job, the function sends notifications like in the following screenshot.

Interface VPC endpoints and security group

AWS SAM creates Amazon SNS and Lambda interface VPC endpoints. Interface VPC endpoints are used to allow communication between resources within a VPC without requiring internet access. This makes sure that the communication is private and secure. These are required to send notifications to the email ID configured in the SNS topic.

It also creates a self-referencing security group that is attached to the Amazon SNS interface endpoint and Lambda, thereby allowing connections between them.

SNS topic

AWS SAM configures an SNS topic with the email ID entered. Amazon SNS sends an email to the ID for verification. Choosing Confirm subscription completes the process and the email ID starts receiving notifications whenever Lambda sends one.

After the email ID is confirmed, the SNS topic looks like the following screenshot.

Configure the PostgreSQL cluster

Attach the IAM role <rds-lambda-role-name> (which you created during the AWS SAM deployment) to the PostgreSQL database cluster using the AWS Command Line Interface (AWS CLI) command:

Amazon Relational Database Service (Amazon RDS) sends the SNS notification in case of partition maintenance job failures using the Lambda function. Attach the security group to the RDS cluster to allow connectivity between the RDS instance and the Lambda function:

Configure the monitoring job

Configure a monitoring job that checks the status of the partition maintenance job and invokes the Lambda function to send an SNS notification in case of any failures. To do so, log in to the postgres database in the Aurora cluster and run the following command after replacing the highlighted section (the monitoring job is scheduled at 1:15 AM, which is after the partition maintenance job scheduled at 1:00 AM, as described in Part 1):

Design Considerations

As of this writing, Lambda has the capability to identify failures occurring when the partition maintenance job, managed by pg_cron within Amazon Aurora PostgreSQL, encounters any issues. However, if the scheduling frequency of pg_cron is low, there’s a likelihood of data being inserted into the default partition until the maintenance job executes and confronts a problem. In such scenarios, if data has been inadvertently directed to the default partition and the subsequent maintenance job fails due to the presence of this data, it’s typically expected that the data in the default partition would be relocated to the appropriate partitions before retrying the maintenance job.

Test the solution

To test the solution, you can use the provided CloudFormation template vpc_ec2_db.yml from the downloaded repository, which deploys a VPC, EC2 instance, and Aurora PostgreSQL database with the extension and tables provided in this post. It also creates and attaches an IAM role that grants permission to the EC2 instance to create an IAM role, Lambda function, interface VPC endpoints, security group, and SNS topic. As a security best practice, follow the principle of least privilege when granting permissions to the IAM role.

For the full instructions for deploying the template, refer to Creating a stack on the AWS CloudFormation console.

Download the source code from the GitHub repo.

Upload the source code to an Amazon Simple Storage Service (Amazon S3) bucket.

On the CloudFormation console, deploy the template vpc_ec2_db.yml (this file is within the .zip file downloaded from GitHub).

Modify the following parameters:

pEC2KeyPair – The Amazon EC2 key pair in the AWS account.

pEc2InstanceProfile – The EC2 instance profile that has the permission to create AWS services deployed by the CloudFormation template.

pS3CodeLocation – The path of the source code .zip file that is uploaded to the S3 bucket.

pSourceIp – The IP subnet of the system or laptop that is used to connect to Amazon EC2.

After you deploy the template, log in to Amazon EC2 using the key pair and run the following command to deploy the Lambda function:

Follow the steps mentioned earlier to configure the Aurora PostgreSQL cluster and schedule the monitoring job.

Clean up

To clean up the resources used in this post, complete the following steps:

Remove the security group and IAM role attached to the Aurora PostgreSQL cluster during setup:

Delete the CloudFormation stack:

Confirm that the stack has been deleted:

If you created the VPC, EC2 instance, and Aurora PostgreSQL database with the template provided in the previous section, delete that CloudFormation stack:

Conclusion

In this post, we showed the process to automate the creation of interval partitioning within an Aurora PostgreSQL and RDS for PostgreSQL database using PostgreSQL extensions like pg_cron and pg_partman. By using PostgreSQL extensions alongside Lambda and Amazon SNS, you can seamlessly integrate event monitoring and notifications, achieving proactive management of database operations and performance. As a best practice, we recommend thoroughly testing this solution in lower environments before deploying it into production.

Leave any thoughts or questions in the comments section.

About the Authors

Bhanu Ganesh Gudivada is a Database Consultant on the AWS Professional Services team at AWS and specializes in database migrations. He helps customers build highly available, cost-effective database solutions and migrate their commercial engines to the AWS Cloud. He is curious to learn and implement new technologies around databases and orchestrate migrations through automation.

Santhosh Kumar Adapa is a Sr. Database Consultant with the AWS Professional Services team at AWS. He works as a database migration specialist to help Amazon customers design and implement scalable, secure, performant, and robust database solutions in the cloud.

Suratwala, Mansi is a Database Consultant working at Amazon Web Services. She closely works with companies from different domains to provide scalable and secure database solutions in the AWS Cloud. She is passionate about collaborating with customers to achieve their cloud adoption goals.

Jeevan Shetty is a Consultant with Verisk. He has been supporting and enabling customers to migrate their database from on-premises data centers to the AWS Cloud and also migrate from commercial database engines to open source databases.

Source: Read More