Amazon Relational Database Service (Amazon RDS) for Db2 makes it easy to set up, operate, and scale Db2 deployments in the cloud. Db2 is an IBM relational database that supports large-scale transactional and analytical workloads. Amazon RDS for Db2 handles time-consuming database administrative tasks, such as hardware provisioning, software patching, and backup management, freeing you to focus on your applications and business.

When you’re migrating a self-managed Db2 database to Amazon RDS for Db2 one of the key requirements is to make sure that the performance is on par with that of their existing environments. This is often accomplished by provisioning the appropriate compute and storage configurations on Amazon RDS for Db2.

Typically, to assess performance, you run load tests using custom scripts or programs on RDS for Db2 databases. However, these tests often overlook certain real-world scenarios encountered in live production environments. For example, they might repeatedly test with just 100 customers from a table containing 1 billion rows. This post delves into capturing real workloads from the source DB2 LUW database and replaying them on Amazon RDS for DB2, providing appropriate sizing to meet performance requirements.

Solution overview

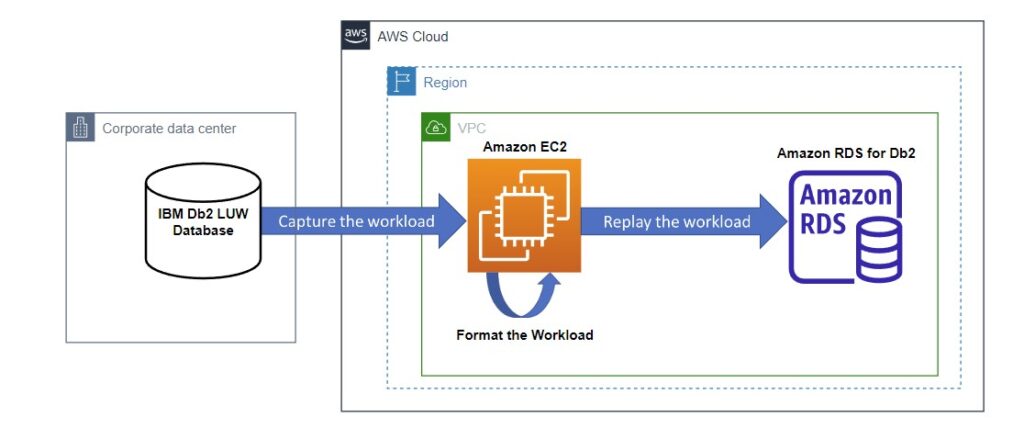

The following diagram illustrates the solution architecture.

The subsequent steps illustrate the process of capturing and replaying a production workload:

The first step is to capture a Db2 LUW production workload from the source system as described by IBM’s tutorial on Db2 workload management. This workload will be used to stress test the configuration of the target RDS for Db2 database.

In the second step, the workload consisting of SQL statements and the corresponding predicate values will be formatted via custom Python code. This is required as the output of the Db2 workload management capture is not compatible with IBM’s db2batch benchmarking tool.

After the SQL statements have been formatted, they will be replayed on the target Amazon RDS for Db2 database using IBM’s db2batch benchmarking tool.

Finally, this workload can also be used to stress test the RDS for Db2 database by scaling up the workload. This will simulate peaks in the source system’s workload caused by business cycles.

Prerequisites

You need to set up the following as prerequisites:

A self-managed Db2 LUW instance, either on premises or Amazon Elastic Compute Cloud (Amazon EC2)

An Amazon RDS for Db2 database instance, containing a database with pre-created objects and populated data.

An Amazon Linux EC2 instance with a Db2 client installed that can access the RDS for Db2 instance.

A database user account on both the source (self-managed Db2 database) and target (RDS for Db2 database) with admin privileges to perform activities like create tables, tablespaces, and event monitors

Capture the Db2 LUW workload

When migrating from self-managed Db2 databases to Amazon RDS for Db2, it is recommended to capture the workload from the source database to encompass all real-time scenarios to replay on Amazon RDS for Db2. To capture the workload, you can use Db2 workload management. Db2 workload management assists in efficiently managing SQL queries within the operational environment. Incoming SQL queries are defined into different workloads with an application name, connection ID, client address, and so on. A service class defines an environment in which work can run, and each workload is assigned to a service class. A default user workload (SYSDEFAULTUSERWORKLOAD) and a default user service class (SYSDEFAULTUSERCLASS) are always created for each database. You can use these default objects to take advantage of the Db2 workload management monitoring features without having to create any user-defined workloads or service classes. If no user-defined workloads and service classes are created, all user activities are associated with these default objects. Service classes allow you to monitor statistics and activities such as statement text, values for host variables, and more.

In this section, we capture the workload in the default user service class, which means capturing all queries running in the source database. This procedure may place additional strain on the database. To reduce the performance impact, you can employ the following standard practices:

Sampling – Collect data for a short period of time and stop. For example, turn on the capturing for 1 minute and turn it off for 9 minutes, then repeat the process.

Targeting a subset of activities – Define the workload into different services, and collect one service class at a time. The following is the sample DDL to create service classes and workloads on the source(self-managed) database:

Prepare to capture the workload

Run the following SQL statements in the source database to create a tablespace called TS_STMT and to gather workload information. Additionally, we need to create an event monitor named WLM_EVMON for capturing database activities, SQL statements, and host variables, and saving the results into separate tables.

Capture the workload

Run the following SQL statements in the source database to configure a service class to collect detailed activity data and activate the event monitor to gather the database workload information:

Stop capturing workload

Run the following SQL statements in the source database to disable workload capture immediately after capturing the workload is completed to avoid unintended database slowdown:

Extract data from the captured workload

The following statement joins the activity, statement, and host variable monitoring tables, and exports the result into a CSV file. Since not all SQL queries have host variables, LEFT OUTER JOIN is implemented. We use the character ^ as a delimiter (for example, modified by Codel^) in the export script. We use #$ to indicate the end of each line to assist the formatting script, which we will run later.

Format the Db2 LUW workload

After capturing the workload, the next step is to format the output to feed into db2batch for replaying the workload in the RDS for Db2 database. db2batch is a benchmarking tool that reads SQL and XQuery statements from either a flat file or standard input, dynamically prepares and describes the statements, and returns an answer set. Literals for the host variables are set in a parameter file. This is crucial because within a relational database management system such as Amazon RDS for Db2, using host variables instead of literals can result in different access paths for the same SQL statements. For example, if you want to select residents who are older than a certain age, the access paths and run costs of these three queries can be very different, as demonstrated below.

The following SQL statement with host variables determines how many rows will qualify:

In the following SQL statement with literals, very few rows will qualify as an age greater than 100 is uncommon:

In the following SQL statement with literals, most rows will qualify:

Using db2batch with a parameter file makes Db2 compile the SQL statement’s access path with host variables as most applications do, while providing literals to run the query.

The following is an example of a SQL statement file:

In the parameters file (parm.txt), parameters for one SQL statement are on a single line, separated by a space. If the SQL statement has no host variable, an empty line is used. The parameter file for the preceding three SQL statements contains three lines, with the second line empty because the second query has no host variables.

Automate the formatting of large captured workloads

As described in the previous step, the db2batch benchmark tool needs a SQL statement and parameter file. The challenge is that the data exported from the extraction step could contain thousands of SQL statements and the literals for their respective host variables.

For example, the SQL statement

is captured and exported as two lines in the CSV file:

A typical captured workload could contain many SQL statements. To automate the separation of the SQL statement and the host variables, we have created a Python program to generate the files required by db2batch. You can run this Python code on a Linux EC2 instance. The complete source code is available in the GitHub repo. This Python script processes SQL statements and their associated parameters from a raw data file. You can find more details of this script in README.md.

The input file sql-raw-data.txt contains data related to the captured workload.

Run the script using the following command with Python installed on it, For example, on EC2:

The successful run of the script will yield the following output.

The Python script generates two output files:

./sql-statement.txt – Contains the parsed SQL statements

./sql-parms.txt – Contains the corresponding parameters for each SQL statement

This data was transformed by the script. The first SQL statement does not contain any variables, which corresponds to an empty line in the sql-parms.txt file:

The second SQL statement from the input file has two lines. The first line contains the value of the first host variable, salary, and the second line contains the value of the second host variable, bonus. The script reduced these two lines to one SQL statement and placed the host variable values in the sql-parms.txt file:

Replay the Db2 LUW workload in Amazon RDS for Db2

In this step, we replay the workload we captured to assess the performance of Amazon RDS for Db2.

To replay the workload, you first need to set up the runtime environment. Go to the Amazon RDS console, navigate to your RDS for Db2 database and retrieve the endpoint and port number.

Catalog the RDS database on the EC2 instance where the Db2 client installed:

Now you can use db2batch to run the SQL statements with parameters. In the following command, we use db2batch to connect to the RDS for Db2 database (benck) remotely, and run the SQL statement in sql-statement.txt, along with literals for the host variable from sql-parms.txt:

The file sql-statement.out contains the output and runtime for each SQL statement. Due to its size, we display only the latter part of the code in the following screenshot.

Scale up the workload in RDS for Db2

You can also scale up the workload in Amazon RDS for Db2 to simulate what-if scenarios using db2batch with the following methods.

Run queries repeatedly by specifying a block number

The following example groups two SELECT queries together as a block, and runs the queries three times sequentially. We use -g on to group the performance information for each SQL run. If you want individual SQL statement performance information, you can use the -g off option.

The modified sql-statement.txt file is as follows:

The modified sql-parm.txt file contains different literals for each run:

The output shows the total time, the maximum and minimum time for each run, and the arithmetic mean and geometric mean times for the cycle.

Run multiple workloads in parallel

This script runs db2batch in the background, and starts the next run without waiting for the previous one to complete. In the following example, it runs the sql-statement.txt file 10 times simultaneously:

During the workload replay in Amazon RDS for Db2, you can gather performance metrics such as CPU usage, memory utilization, and IOPS from Amazon CloudWatch. You can then use these metrics to optimize the sizing of your RDS for Db2 database, making sure it meets performance criteria effectively. For more information, refer to Monitoring Amazon RDS metrics with Amazon CloudWatch.

Clean up

When you’re done testing this solution, delete any RDS instances and EC2 instances that you created.

Conclusion

In this post, we showcased the process of capturing workload data from a self-managed Db2 LUW database, formatting the output, and then replaying it in Amazon RDS for Db2. This method assists in accurately provisioning the appropriate size of RDS for Db2 instance, considering both compute and storage resources. Additionally, we provided examples of scaling up the captured workload for stress testing, making sure that Amazon RDS for Db2 can effectively manage the additional workload demands.

About the Authors

Feng Cai is a Database Consultant at AWS. He has a wide background in providing database services to different clients. Feng is currently a member of the AWS Global Competency Center, providing homogeneous and heterogeneous database migration support to customers.

Dean Capps is a Database Consultant at AWS and has experience providing database support on legacy, open systems, and cloud platforms. Dean is currently a member of the AWS Global Competency Center, providing homogeneous and heterogeneous database migration support to commercial and public sector clients.

Javeed Mohammed is a Database Specialist Solutions Architect with Amazon Web Services. He works with the Amazon RDS team, focusing on commercial database engines like Oracle and Db2. He enjoys working with customers to help design, deploy, and optimize relational database workloads on the AWS Cloud.

Source: Read More