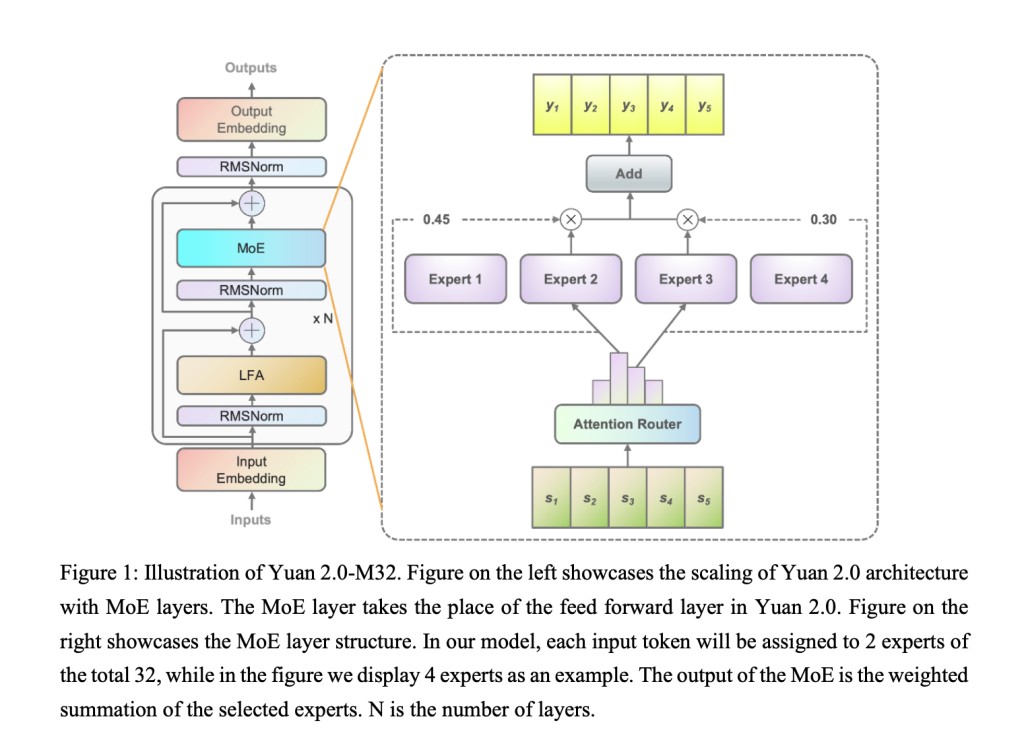

In recent research, a team of researchers from IEIT Systems has developed Yuan 2.0-M32, a sophisticated model built using the Mixture of Experts (MoE) architecture. Similar in base design to Yuan-2.0 2B, it is distinguished by its use of 32 experts. The model has an efficient computational structure because only two of these experts are active for processing at any given time.Â

In contrast to conventional router networks, this model presents a unique Attention Router network that improves expert selection and increases overall accuracy. In order to train the Yuan 2.0-M32, a sizable dataset of 2000 billion tokens was processed from the start. The computational consumption of the model for training, even with such a large amount of data, was only 9.25% of the requirements of a dense model with a similar parameter scale.Â

In terms of performance, Yuan 2.0-M32 showed remarkable ability in a number of areas, such as mathematics and coding. Using 7.4 GFlops of forward computation per token, the model used just 3.7 billion active parameters out of a total of 40 billion. Considering that these numbers only represent 1/19th of the Llama3-70B model’s requirements, they are quite efficient.Â

Yuan 2.0-M32 performed admirably in benchmarks, surpassing Llama3-70B with scores of 55.89 and 95.8, respectively, on the MATH and ARC-Challenge benchmarks while having a smaller active parameter set and a smaller computational footprint.Â

An important development is Yuan 2.0-M32’s adoption of the Attention Router. This routing mechanism improves the model’s precision and performance by optimizing the selection process by concentrating on the most pertinent experts for each task. In contrast to traditional techniques, this unique way of expert selection emphasizes the potential for enhanced accuracy and efficiency in MoE models.

The team has summarized their primary contributions as follows.

The team has presented the Attention Router, which considers the correlation between specialists. When compared to conventional routing techniques, this method yields a notable gain in accuracy.

The team has created and made available the Yuan 2.0-M32 model, which has 40 billion total parameters, 3.7 billion of which are active. Only two experts are active in every token in this paradigm, which uses a structure of thirty-two experts.

Yuan 2.0-M32’s training is extremely effective, using only 1/16 of the computing power required for a dense model with a comparable number of parameters. The computing cost at inference is comparable to that of a dense model with 3.7 billion parameters. This guarantees that the model maintains its efficiency and cost-effectiveness during training and in real-world scenarios.

Check out the Paper, Model, and GitHub. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter. Join our Telegram Channel, Discord Channel, and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 43k+ ML SubReddit | Also, check out our AI Events Platform

The post IEIT SYSTEMS Releases Yuan 2.0-M32: A Bilingual Mixture of Experts MoE Language Model based on Yuan 2.0. Attention Router appeared first on MarkTechPost.

Source: Read MoreÂ