The advent of deep neural networks (DNNs) has led to remarkable improvements in controlling artificial agents using the optimization of reinforcement learning or evolutionary algorithms. However, most neural networks show structural rigidity, binding their architectures to specific input and output space. This inflexibility is the major cause that prevents the optimization of neural networks across domains with distinct dimensions. The main challenge to achieve this flexibility is the Symmetry Dilemma, which involves balancing the benefits of inducing symmetry in optimized parameters.Â

Various methods are being explored to overcome the shortcomings of structural rigidity in DNNs. An existing method, Indirect Encoding, is used to optimize plasticity mechanisms instead of optimizing weight directly. This method can update synaptic weight values using Gated Recurrent Units (GRUs) through shared parameters. Another existing method, Graph Neural Networks (GNNs), a type of network is developed for data analysis represented as a graph. The network type that contains synapses with hidden state vectors connected with them is updated using local information and a global reward signal and works similarly to GNNs.

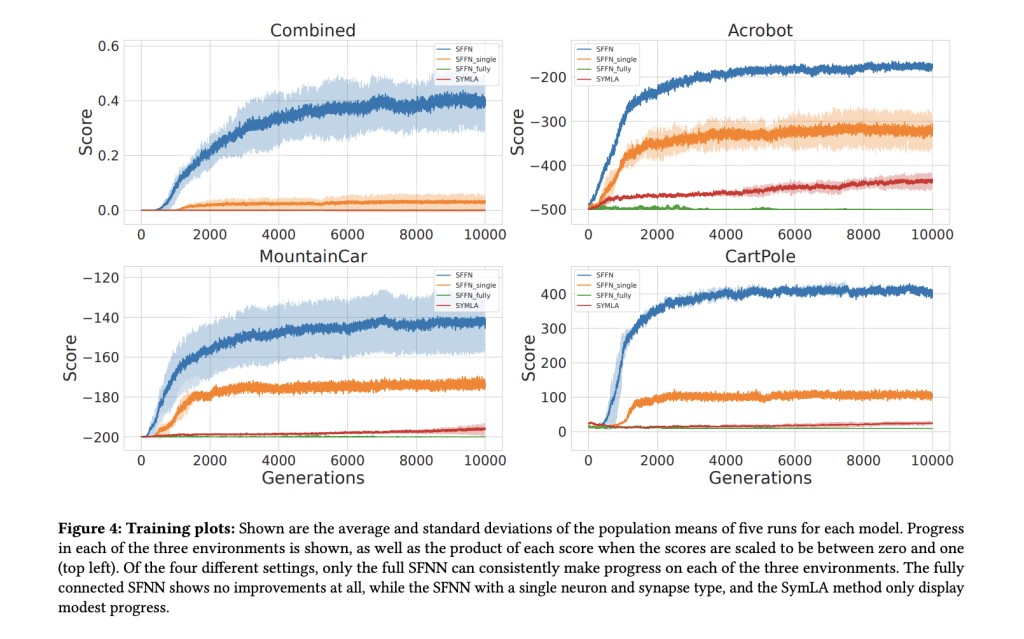

Researchers from IT University Copenhagen, Denmark introduced Structurally Flexible Neural Networks (SFNNs) that contain connected gated recurrent units (GRUs) as synaptic plasticity rules and linear layers as neurons. A major challenge, a symmetric dilemma can be solved by SFNNs that involves the problem of optimizing units along with shared parameters to show various representations during deployment. The proposed method also contains many different sets of parameterized building blocks, which shows its superiority compared to previous methods. SFFN learns to solve three classic control environments, demonstrating its ability to solve many environments simultaneously.

The experimental result carried out by researchers shows a single parameter set can solve tasks with various input and output sizes. The plastic synapses and neurons evolve and organize for better performance in the given environment. Moreover, the main idea is to develop a versatile learning algorithm that depends on structural flexibility, which removes the dependency of the model from being tied to specific input and output sizes. Researchers’ main aim of this experiment is to develop a set of building blocks to show fast adaptation across various environments to form a network irrespective of their dimensionality, and permutations of input and output.Â

The results include the calculation of SFNN for the first three columns (a) in the same setting as during the training, (b) in a setting with the same adjacency matrix used in all lifetimes, and (c) in a setting where a random permutation of the inputs and outputs are selected at the initial stage of each lifetime. The scores of the episodes in all three cases improve in the later stage of the agent’s life. This allows the network to organize itself into functional networks in a short amount of time. Moreover, Fixed_SFNN has the potential to obtain high scores only when using an adjacency matrix, but the standard SFNN is robust to input and output permutations.

In conclusion, researchers proposed SFNNs that contain connected gated recurrent units (GRUs) as synaptic plasticity rules and linear layers as neurons. SFFN is a type of network developed to optimize its parameters across various environments with different input and output shapes. This allows rapid improvement during the agent’s life, which it controls. Moreover, the SymLA method, with limited plastic parameters, cannot form networks that can handle multiple tasks, but SFNN_single is potentially under-parameterized regarding the evolved parameters. The limitation of this paper is that when input and output sizes used in the environments are increased, the symmetry dilemma gets magnified and becomes challenging.Â

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter. Join our Telegram Channel, Discord Channel, and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 43k+ ML SubReddit | Also, check out our AI Events Platform

The post Structurally Flexible Neural Networks: An AI Approach to Solve a Symmetric Dilemma for Optimizing Units and Shared Parameters appeared first on MarkTechPost.

Source: Read MoreÂ