Retrieval-augmented generation (RAG) is a potent strategy that improves the capabilities of Large Language Models (LLMs) by integrating outside knowledge. However, RAG is prone to a particular type of attack known as retrieval corruption. In these types of attacks, malicious actors introduce destructive sections into the collection of retrieved documents, which leads to the model producing replies that are either erroneous or deceptive. This vulnerability seriously threatens the dependability of systems that use RAG.

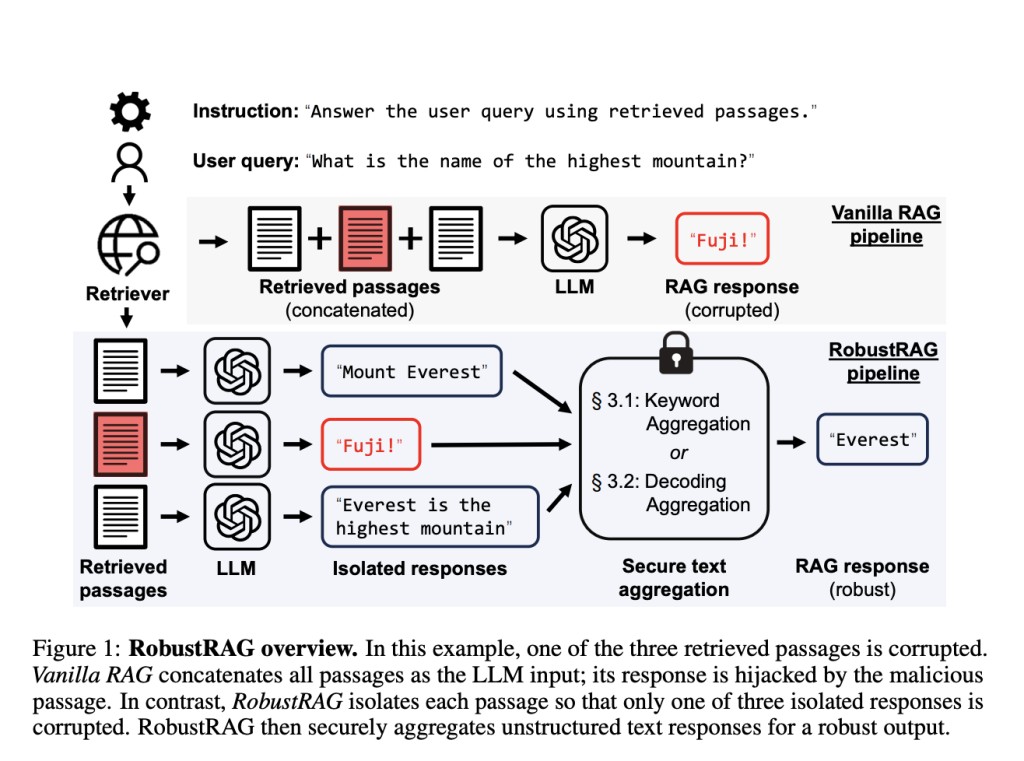

In recent research from Princeton University and UC Berkeley, RobustRAG, a unique defense framework, has been presented to counter these threats. The first of its type, RobustRAG, has been made specially to guard against retrieval corruption. RobustRAG’s primary tactic is an isolate-then-aggregate methodology. This indicates that in order to provide distinct responses, the model first analyses each retrieved text separately. The final solution is then created by safely combining these discrete responses.

Keyword-based and decoding-based algorithms have been devised to secure aggregate unstructured text answers and achieve RobustRAG. These algorithms guarantee that the influence of tainted passages can be limited and lessened during the aggregation process, even in the event that some are recovered.

RobustRAG’s capacity to achieve certifiable robustness is one of its key strengths. This means that for specific query types, it can be demonstrated using formal means that RobustRAG will always generate accurate results, even in the event that an attacker knows every detail about the defense measures and is able to introduce a finite number of harmful passages. This formal evidence offers a high degree of security regarding the dependability of the system in the event of an attack.

Thorough studies on a range of datasets, including open-domain question answering (QA) and long-form text production, have proven RobustRAG’s effectiveness and versatility. These tests have demonstrated that RobustRAG not only provides strong protection against retrieval corruption but also performs well in terms of generalization across various workloads and datasets. Because of this, RobustRAG is a strong option for enhancing retrieval-augmented generation systems’ security and dependability.

The team has summarized their primary contributions as follows.

RobustRAG is the first defense architecture created especially to oppose retrieval corruption attacks in retrieval-augmented generation systems.

Secure Text Aggregation Techniques: For RobustRAG, the team has created two robust text aggregation techniques: decoding-based and keyword-based algorithms. These methods have official certification that they will continue to be accurate and dependable even in the presence of certain threat scenarios involving retrieval corruption.

Verification of RobustRAG’s Performance: The team has conducted thorough testing to verify RobustRAG’s robustness and generalizability. Three distinct LLMs – Misttral, Llama, and GPT, as well as three different datasets – RealtimeQA, NQ, and Bio, have been evaluated. This illustrates how RobustRAG is widely applicable and efficient in a variety of settings and jobs.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter. Join our Telegram Channel, Discord Channel, and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 43k+ ML SubReddit | Also, check out our AI Events Platform

The post RobustRAG: A Unique Defense Framework Developed for Opposing Retrieval Corruption Attacks in Retrieval-Augmented Generation (RAG) Systems appeared first on MarkTechPost.

Source: Read MoreÂ