Despite the advancement of artificial intelligence in the field of medical science, these systems have limited application. This limitation creates a gap in developing AI solutions for specific tasks. Researchers from Harvard Medical School, USA; Jawaharlal Institute of Postgraduate Medical Education and Research, India; and Scripps Research Translational Institute, USA, proposed MedVersa to address the challenges in medical artificial intelligence systems, hindering their widespread adoption in clinical practice. The task-specific approach of the existing models is the key issue that causes their inability to adapt to healthcare settings’ diverse and complex needs. MedVersa, a generalist learner capable of multifaceted medical image interpretation, aims to solve these challenges.

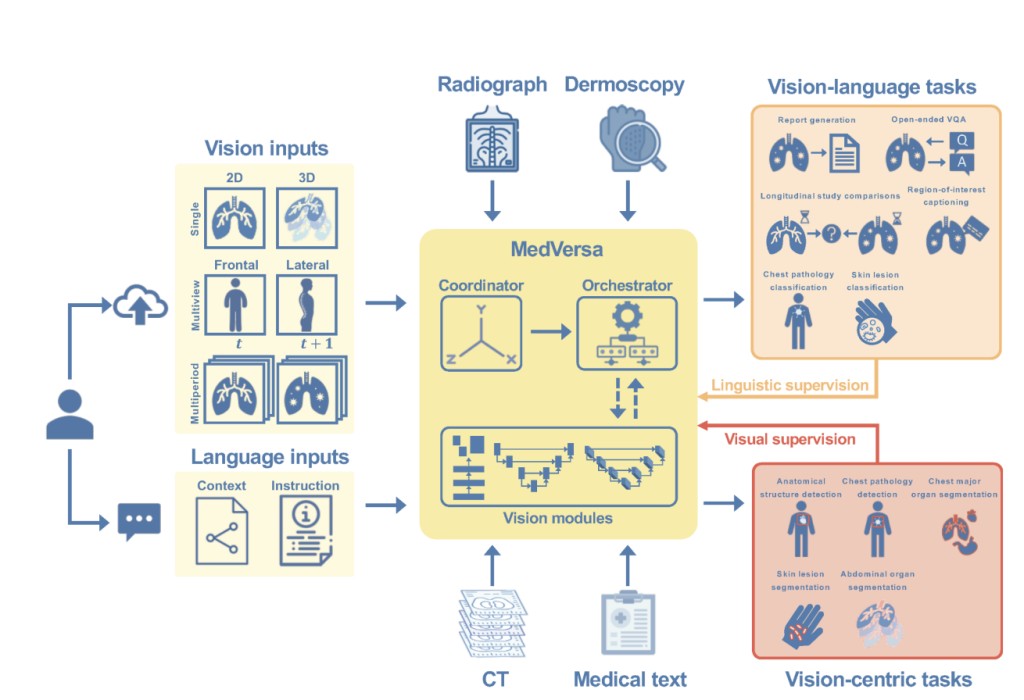

Current medical AI systems are predominantly designed for specific tasks, such as identifying chest pathologies or classifying skin diseases. However, these task-specific approaches limit their adaptability and usability in real-world clinical scenarios. In contrast, MedVersa, the proposed solution, is a generalist learner that leverages a large language model as a learnable orchestrator. The unique architecture of MedVersa enables it to learn from both visual and linguistic supervision, supporting multimodal inputs and real-time task specification. Unlike previous generalist medical AI models that focus solely on natural language supervision, MedVersa integrates vision-centric capabilities, allowing it to perform tasks such as detection and segmentation crucial for medical image interpretation.

MedVersa’s method involves three key components: the multimodal input coordinator, the large language model-based learnable orchestrator, and various learnable vision modules. The multimodal input coordinator processes both visual and textual inputs, while the large language model orchestrates the execution of tasks using language and vision modules. This architecture enables MedVersa to excel in both vision-language tasks, like generating radiology reports, and vision-centric challenges, including detecting anatomical structures and segmenting medical images. For training the model, researchers combined more than 10 publicly available medical datasets for various tasks, such as MIMIC-CXR, Chest ImaGenome, and Medical-Diff-VQA, into one multimodal dataset, MedInterp.Â

MedVersa employs advanced multimodal input coordination using distinct vision encoders and an orchestrator optimized for medical tasks. For the 2D and 3D vision encoders, researchers utilized the base version of the Swin Transformer pre-trained on ImageNet and the encoder architecture from the 3D UNet, respectively. They cropped 50–100% of the original images, resized them to 224 x 224 pixels with three channels, and further applied various augmentations for specific tasks. Additionally, the system implements two distinct linear projectors for 2D and 3D data. MedVersa uses the Low-Rank Adaptation (LoRA) strategy to train the orchestrator. LoRA uses the idea of low-rank matrix decomposition to achieve proximity to a large weight matrix in neural network layers. By setting the rank and alpha values of LoRA to 16, the method ensures efficient training while modifying only a fraction of the model parameters

MedVersa outperforms existing state-of-the-art across multiple tasks, in areas such as radiology report generation and chest pathology classification. MedVersa’s ability to adapt to impromptu task specifications, as well as its consistent performance across external cohorts, indicate its robustness and generalization. MedVersa demonstrates superior performance over DAM in chest pathology classification, with an average F1 score of 0.615, notably higher than DAM’s 0.580. For detection tasks, MedVersa surpasses YOLOv5 in detecting a variety of anatomical structures, with most IoU scores on certain structures, especially in detecting lung zones. By incorporating vision-centric training alongside vision-language training, the model achieved an average improvement of 4.1% compared to models trained solely on vision-language data.

In conclusion, the study presents a state-of-the-art generalist medical AI (GMAI) model to support multimodal inputs, outputs, and on-the-fly task specification. By integrating visual and linguistic supervision within its learning processes, MedVersa demonstrates superior performance across a wide range of tasks and modalities. Its adaptability and versatility make it an important resource in medical AI, paving the way for more thorough and efficient AI-assisted clinical decision-making.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter. Join our Telegram Channel, Discord Channel, and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 43k+ ML SubReddit | Also, check out our AI Events Platform

The post MedVersa: A Generalist Learner that Enables Flexible Learning and Tasking for Medical Image Interpretation appeared first on MarkTechPost.

Source: Read MoreÂ