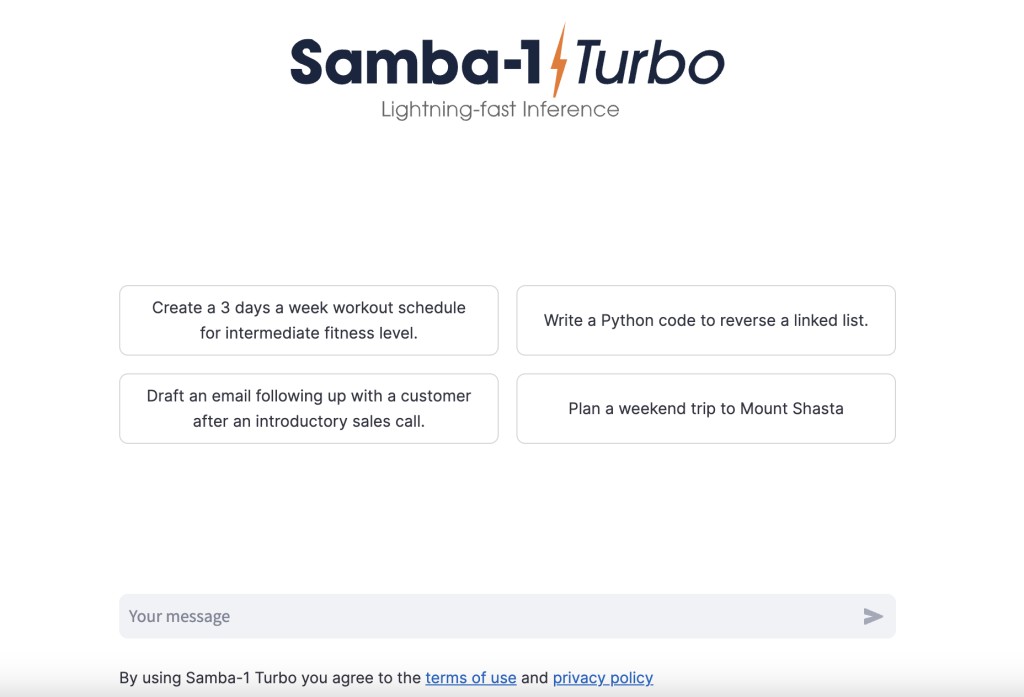

In an era where the demand for rapid and efficient AI model processing is skyrocketing, SambaNova Systems has shattered records with the release of Samba-1-Turbo. This groundbreaking technology achieves a world record of processing 1000 tokens per second at 16-bit precision, powered by the SN40L chip and running the advanced Llama-3 Instruct (8B) model. The Centre of Samba-1-Turbo’s performance is the Reconfigurable Dataflow Unit (RDU), a revolutionary piece of technology that sets it apart from traditional GPU-based systems.Â

Their limited on-chip memory capacity often hampered GPUs, necessitating frequent data transfers between GPU and system memory. This back-and-forth data movement leads to significant underutilization of the GPU’s compute units, especially when dealing with large models that can only fit partially on-chip. SambaNova’s RDU, however, boasts a massive pool of distributed on-chip memory through its Pattern Memory Units (PMUs). Positioned close to the compute units, these PMUs minimize the need for data movement, thus vastly improving efficiency.

Traditional GPUs execute neural network models in a kernel-by-kernel fashion. Each layer’s kernel is loaded and executed, and its results are returned to memory before moving on to the next layer. This constant context switching and data shuffling increase latency and result in underutilization. In contrast, the SambaFlow compiler maps the entire neural network model as a dataflow graph onto the RDU fabric, enabling pipelined dataflow execution. This means activations can flow seamlessly through layers without excessive memory accesses, greatly enhancing performance.

Handling large models on GPUs often requires complex model parallelism, partitioning the model across multiple GPUs. This process is not only intricate but also demands specialized frameworks and code. SambaNova’s RDU architecture automates data and model parallelism when mapping multiple RDUs in a system, eliminating manual intervention. This automation simplifies the process and ensures optimal performance.

The advanced Meta-Llama-3-8B-Instruct model, part of a series of impressive offerings, including Mistral-T5-7B-v1, v1olet_merged_dpo_7B, WestLake-7B-v2-laser-truthy-dpo, and DonutLM-v1 power the Samba-1-Turbo’s unprecedented speed and efficiency. Furthermore, SambaNova’s SambaLingo suite supports multiple languages, including Arabic, Bulgarian, Hungarian, Russian, Serbian (Cyrillic), Slovenian, Thai, Turkish, and Japanese, showcasing the system’s versatility and global applicability.

The tight integration of hardware & software in Samba-1-Turbo is the key to its success. This innovation makes generative AI more accessible and efficient for enterprises and is poised to drive significant advancements in AI applications, from natural language processing to complex data analysis.

In conclusion, SambaNova Systems has set a new benchmark with Samba-1-Turbo and paved the way for the future of AI. The world record-breaking speed, combined with the efficiency and automation of the RDU architecture, positions Samba-1-Turbo as a game-changer in the industry. Enterprises looking to leverage the full potential of generative AI now have a powerful new tool at their disposal, capable of unlocking unprecedented levels of performance and productivity.

Sources

https://fast.snova.ai/

https://x.com/IntuitMachine/status/1795570166706720909

https://x.com/SambaNovaAI/status/1795554540814565838

The post SambaNova Systems Breaks Records with Samba-1-Turbo: Transforming AI Processing with Unmatched Speed and Innovation appeared first on MarkTechPost.

Source: Read MoreÂ