An AI’s ability to comprehend and mimic the physical environment is based on its world model (WM), an abstract representation of that environment. The model includes objects, scenes, agents, physical laws, spatiotemporal information, and dynamic interactions. Specifically, it enables predicting world states in response to certain actions. Therefore, designing a generic world model can help with interactive content development, such as making realistic virtual scenes for movies and games, building VR and AR experiences, and making training and instructional simulations.

Modern LLMs may generate natural-sounding human speech and represent more traditional world models in specific reasoning jobs. Some parts of the world, including intuitive physics (such as predicting fluid flow from its viscosity), need to be amenable to and efficiently described by words alone. Also, LLMs depend on patterns in textual data without grasping the underlying realities they portray because they need a stronger grasp of physical and temporal dynamics in the actual world.

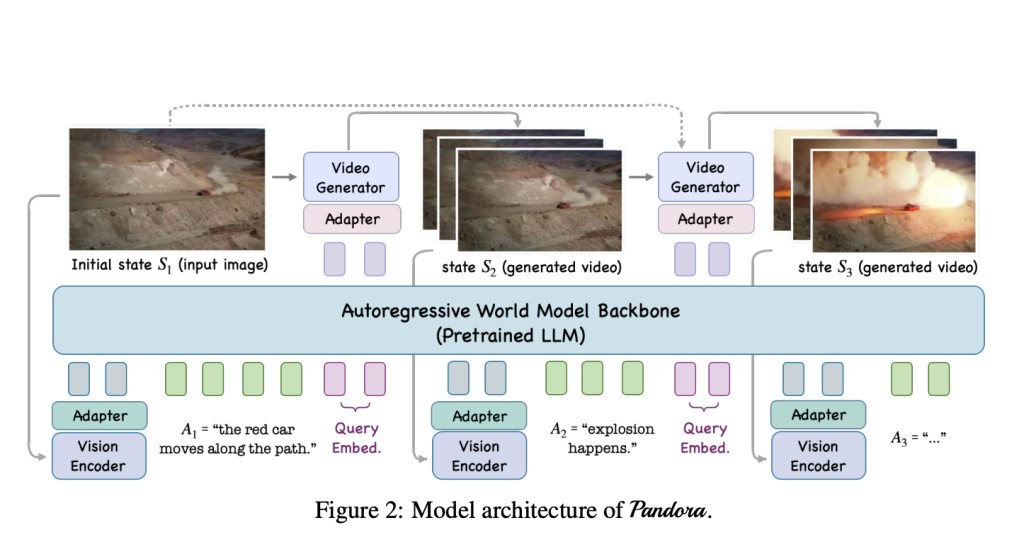

A study by Matrix.org introduces Pandora, a groundbreaking first step towards a generic world model. Pandora uses video generation to mimic world situations in different domains and permits real-time control by arbitrary actions described in a common language. The Pandora algorithm, an autoregressive model that inputs free-form text and previous video states and produces new video states as outputs, represents a significant leap in the field of AI and machine learning.Â

This ‘staged approach’ involves two main steps: massive video and text data for large-scale pretraining to learn a domain-general understanding of the world and how to make consistent video simulations, and high-quality text-video sequential data for instruction tuning to learn how to control the text during video generation at any time. It is essential to note that the pretraining stage enables the distinct training of video and text models. Since pre-existing pretrained LLMs and (text-to-)video generation models have attained domain generalizability and video consistency, they can be easily recycled. Following the above steps, all that is required is to combine the language and video models, add any needed extra modules, and perform some lightweight tuning. In particular, the ‘Vicuna-7B-v1.5 language model’ and the ‘DynamiCrafter text-to-video model’ serve as the foundation of this publication. The ‘Vicuna-7B-v1.5 language model’ is a state-of-the-art language model that provides a strong backbone for the text generation part of the world model, while the ‘DynamiCrafter text-to-video model’ is a cutting-edge model that enables the generation of realistic videos based on the text inputs.

Looking ahead, it is anticipated that pretrained models with larger and more advanced features, like GPT-4 and Sora, will produce even better results. The researchers are synthesizing numerous simulators for robotics, in-/out-of-door activities, driving, 2D games, and more, and re-captioning general-domain films to create a big heterogeneous set of action-state sequential data for the instruction tuning stage. These future advancements hold great promise for the continued development and application of the generic world model.

The researchers demonstrate Pandora’s wide range of outputs in several disciplines. This model displays several desired qualities not seen in earlier models. The results also show a lot of room for improvement regarding future training on a wider scale.

Pandora can generate videos in many general domains, including indoor/outdoor, natural/urban, human/robot, 2D/3D, and many more. The extensive use of video for pretraining is largely responsible for this domain’s generalizability.

To influence the planet’s future, Pandora uses natural language actions as inputs while creating videos. Crucially, this differs from earlier versions of text-to-video conversion, which could only accept text suggestions at the beginning of the video. The world model’s promise to facilitate interactive content development and improve robust reasoning and planning is realized through the on-the-fly control. It is made possible by the model’s autoregressive architecture, which allows text inputs at any moment; the pre-trained LLM backbone, which recognizes any text expressions; and the instruction tuning stage, significantly improving control efficacy.

Instruction tweaking using high-quality data makes it possible to learn efficient action control and transfer it to various unobserved domains. The team shows that rules defined in one domain can be easily extended to states in other, completely different domains.

Current video production methods that rely on diffusion topologies usually generate videos of a certain duration (say, 2 seconds).

Pandora may endlessly automatically increase the video length by combining the pretrained video model with the LLM autoregressive backbone.Â

The researchers highlight that Pandora is still in its early stages as a gateway to GWM. While it shows promising results, it also has some limitations. For instance, it needs help understanding physical rules and common sense, creating consistent videos, and simulating complicated scenarios. These are areas that require further research and development to enhance the model’s performance and applicability.

Nevertheless, the team believes that more extensive training with robust backbone models (such as GPT-4 and Sora) will result in better domain generalization, video consistency, and action controllability. They are also enthusiastic about expanding the model to include more modalities, such as audio, to improve its measurement and simulation capabilities. These future developments hold the potential to enhance the model’s performance and broaden its applications significantly.

Check out the Paper, Github, Model, and Project. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter. Join our Telegram Channel, Discord Channel, and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 43k+ ML SubReddit | Also, check out our AI Events Platform

The post Pandora: A Hybrid Autoregressive-Diffusion Model that Simulates World States by Generating Videos and Allows Real-Time Control with Free-Text Actions appeared first on MarkTechPost.

Source: Read MoreÂ