The InternLM research team delves into developing and enhancing large language models (LLMs) specifically designed for mathematical reasoning and problem-solving. These models are crafted to bolster artificial intelligence’s capabilities in tackling intricate mathematical tasks, encompassing formal proofs and informal problem-solving.

Researchers have noted that current AI models often need to catch up regarding the depth and precision required for complex mathematical computations and logical proofs. The need for improved performance in mathematical reasoning by AI is crucial, as existing models need help to match the accuracy and efficiency required for more sophisticated tasks.

Traditional methods for training these models involve extensive datasets of mathematical problems and solutions. Techniques like chain-of-thought and program-of-thought reasoning help simulate humans’ step-by-step processes to solve mathematical problems. However, these approaches often need more efficiency and precision for more complex mathematical tasks, underscoring the necessity for innovative solutions.

Researchers from Shanghai AI Laboratory, Tsinghua University, Fudan University, University of Southern California, and Shanghai Jiaotong University have introduced the InternLM2-Math-Plus. This model series includes variants with 1.8B, 7B, 20B, and 8x22B parameters, tailored to improve informal and formal mathematical reasoning through enhanced training techniques and datasets. These models aim to bridge the gap in performance and efficiency in solving complex mathematical tasks.

The four variants of InternLM2-Math-Plus introduced by the research team:

InternLM2-Math-Plus 1.8B: This variant focuses on providing a balance between performance and efficiency. It has been pre-trained and fine-tuned to handle informal and formal mathematical reasoning, achieving scores of 37.0 on MATH, 41.5 on MATH-Python, and 58.8 on GSM8K, outperforming other models in its size category.

InternLM2-Math-Plus 7B: Designed for more complex problem-solving tasks, this model significantly improves over state-of-the-art open-source models. It achieves 53.0 on MATH, 59.7 on MATH-Python, and 85.8 on GSM8K, demonstrating enhanced informal and formal mathematical reasoning capabilities.

InternLM2-Math-Plus 20B: This variant pushes the boundaries of performance further, making it suitable for highly demanding mathematical computations. It achieves scores of 53.8 on MATH, 61.8 on MATH-Python, and 87.7 on GSM8K, indicating its robust performance across various benchmarks.

InternLM2-Math-Plus Mixtral8x22B: The largest and most powerful variant, Mixtral8x22B, delivers unparalleled accuracy and precision. It scores 68.5 on MATH and an impressive 91.8 on GSM8K, making it the preferred choice for the most challenging mathematical tasks due to its extensive parameters and superior performance.

The InternLM2-Math-Plus models incorporate advanced techniques such as chain-of-thought reasoning, reward modeling, and a code interpreter. The models are pre-trained on diverse, high-quality mathematical data, including synthetic data for numerical operations and domain-specific datasets. Further fine-tuning through supervised learning on curated datasets enhances their problem-solving and verification abilities.

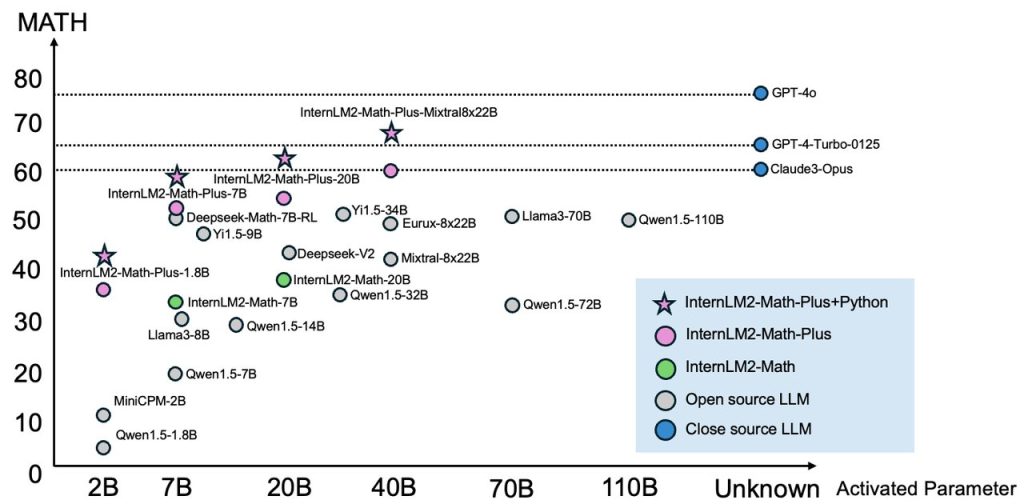

Regarding performance, the InternLM2-Math-Plus models show significant improvement over existing models. The 1.8B model, for example, outperforms the MiniCPM-2B in the smallest size category. Similarly, the 7B model surpasses the Deepseek-Math-7B-RL, previously state-of-the-art open-source math reasoning models. Notably, the largest model, Mixtral8x22B, achieves top scores on MATH and GSM8K, indicating superior problem-solving capabilities.

The InternLM2-Math-Plus 1.8B model shows notable performance improvements with scores of 37.0 on MATH, 41.5 on MATH-Python, and 58.8 on GSM8K. The 7B variant enhances these results further, achieving 53.0 on MATH, 59.7 on MATH-Python, and 85.8 on GSM8K. The 20B model also performs impressively, scoring 53.8 on MATH, 61.8 on MATH-Python, and 87.7 on GSM8K. The largest model, Mixtral8x22B, achieves 68.5 on MATH and 91.8 on GSM8K.

Each variant of InternLM2-Math-Plus is designed to address specific needs in mathematical reasoning. The 1.8B model balances performance and efficiency, which is ideal for applications requiring robust yet compact models. The 7B model provides enhanced capabilities for more complex problem-solving tasks. The 20B model further pushes the boundaries of performance, making it suitable for highly demanding mathematical computations. The Mixtral8x22B model, with its extensive parameters, delivers unparalleled accuracy and precision, making it the go-to choice for the most challenging mathematical tasks.

In conclusion, the research on InternLM2-Math-Plus signifies a substantial advancement in the mathematical reasoning capabilities of LLMs. The models effectively address key challenges by integrating sophisticated training techniques and leveraging extensive datasets, enhancing performance on various mathematical benchmarks.Â

Sources

https://arxiv.org/pdf/2402.06332

https://x.com/intern_lm/status/1795043367383859523

https://github.com/InternLM/InternLM-Math

https://huggingface.co/internlm/internlm2-math-plus-1_8b/

https://huggingface.co/internlm/internlm2-math-plus-7b/

https://huggingface.co/internlm/internlm2-math-plus-20b/

https://huggingface.co/internlm/internlm2-math-plus-mixtral8x22b/

The post InternLM Research Group Releases InternLM2-Math-Plus: A Series of Math-Focused LLMs in Sizes 1.8B, 7B, 20B, and 8x22B with Enhanced Chain-of-Thought, Code Interpretation, and LEAN 4 Reasoning appeared first on MarkTechPost.

Source: Read MoreÂ