In graph analysis, the need for labeled data presents a significant hurdle for traditional supervised learning methods, particularly within academic, social, and biological networks. To overcome this limitation, Graph Self-supervised Pre-training (GSP) techniques have emerged, leveraging the intrinsic structures and properties of graph data to extract meaningful representations without the need for labeled examples. GSP methods are broadly classified into two categories: contrastive and generative.Â

Contrastive methods, like GraphCL and SimGRACE, create multiple graph views through augmentation and learn representations by contrasting positive and negative samples. Generative methods like GraphMAE and MaskGAE focus on learning node representations via a reconstruction objective. Notably, generative GSP approaches are often simpler and more effective than their contrastive counterparts, which rely on meticulously designed augmentation and sampling strategies.

Current Generative graph-masked AutoEncoder (GMAE) models primarily concentrate on reconstructing node features, thereby capturing predominantly node-level information. This single-scale approach, however, needs to address the multi-scale nature inherent in many graphs, such as social networks, recommendation systems, and molecular structures. These graphs contain node-level details and subgraph-level information, exemplified by functional groups in molecular graphs. The inability of current GMAE models to effectively learn this complex, higher-level structural information results in diminished performance.

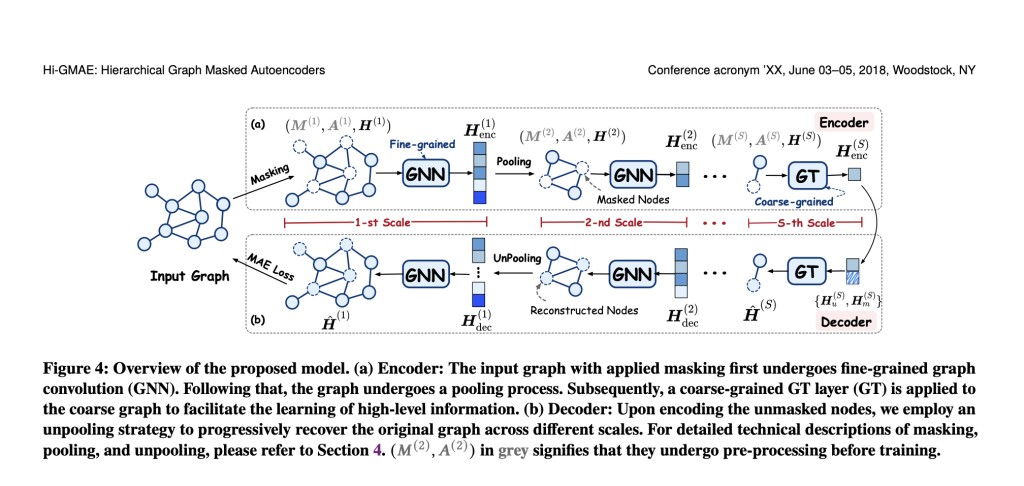

To address these limitations, a team of researchers from various institutions, including Wuhan University, introduced the Hierarchical Graph Masked AutoEncoders (Hi-GMAE) framework. Hi-GMAE comprises three main components designed to capture hierarchical information in graphs. The first component, multi-scale coarsening, constructs coarse graphs at multiple scales using graph pooling methods that cluster nodes into super-nodes progressively.Â

The second component, Coarse-to-Fine (CoFi) masking with recovery, introduces a novel masking strategy that ensures the consistency of masked subgraphs across all scales. This strategy starts with random masking of the coarsest graph, followed by back-projecting the mask to finer scales using an unspooling operation. A gradual recovery process selectively unmasks certain nodes to aid learning from initially fully masked subgraphs.

The third key component of Hi-GMAE is the Fine- and Coarse-Grained (Fi-Co) encoder and decoder. The hierarchical encoder integrates fine-grained graph convolution modules to capture local information at lower graph scales and coarse-grained graph transformer (GT) modules to focus on global information at higher graph scales. The corresponding lightweight decoder gradually reconstructs and projects the learned representations to the original graph scale, ensuring comprehensive capture and representation of multi-level structural information.

To validate the effectiveness of Hi-GMAE, extensive experiments were conducted on various widely-used datasets, encompassing unsupervised and transfer learning tasks. The experimental results demonstrated that Hi-GMAE outperforms existing state-of-the-art models in contrastive and generative pre-training domains. These findings underscore the advantages of the multi-scale GMAE approach over traditional single-scale models, highlighting its superior capability in capturing and leveraging hierarchical graph information.

In conclusion, Hi-GMAE represents a significant advancement in self-supervised graph pre-training. By integrating multi-scale coarsening, an innovative masking strategy, and a hierarchical encoder-decoder architecture, Hi-GMAE effectively captures the complexities of graph structures at various levels. The framework’s superior performance in experimental evaluations solidifies its potential as a powerful tool for graph learning tasks, setting a new benchmark in graph analysis.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter. Join our Telegram Channel, Discord Channel, and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 43k+ ML SubReddit | Also, check out our AI Events Platform

The post Hierarchical Graph Masked AutoEncoders (Hi-GMAE): A Novel Multi-Scale GMAE Framework Designed to Handle the Hierarchical Structures within Graph appeared first on MarkTechPost.

Source: Read MoreÂ