AWS has introduced a new feature in AWS Database Migration Service (AWS DMS) that simplifies the migration of data from IBM Db2 databases with the Database Partitioning Feature (DPF) databases to Amazon Simple Storage Service (Amazon S3), a highly scalable and durable object storage service. With this new capability, you can now migrate your data from IBM Db2 DPF databases to Amazon S3, paving the way for building robust data lakes in the cloud.

This new feature streamlines the migration process, provides data integrity, and minimizes the risk of data loss or corruption, even when dealing with large volumes of data distributed across multiple partitions and databases of varying sizes.

In this post, we delve into the intricacies of this new AWS DMS feature and demonstrate how to implement it. We explore best practices for orchestrating data flows and optimizing the migration process, achieving a smooth transition from on-premises IBM Db2 DPF databases to a cloud-based data lake on Amazon S3.

IBM Db2 DPF architecture overview

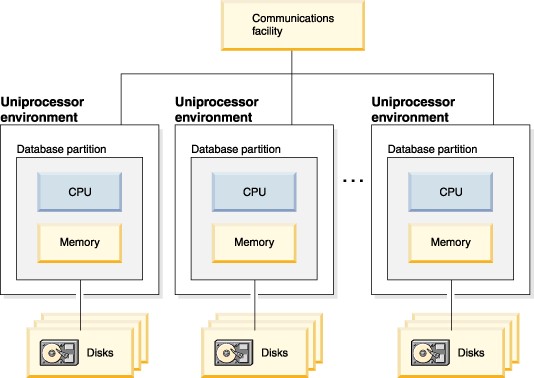

IBM DB2 DPF enables you to partition the database across multiple servers or within a large SMP server. For large data warehouses or datasets, DPF enables scalability (more CPU, memory, disk, and network). As new machines are added, you can spread your data across all the servers in the configuration.

There are many ways to configure DPF logical, such as multiple partitions on a on a single server or partitions across multiple servers etc. For more information, see Database partition and processor environments. For this post, we assume a physical multi-server multi-database partition DPF setup like the following diagram.

In this setup, data for a given table is spread across multiple servers, so in order to get all the data, AWS DMS needs to either connect to each one or use the Communications facility endpoint, which communicates with all the partition servers.

Now let’s turn our focus to the AWS DMS setup for IBM DB2 DPF source. The following diagram depicts how we need to setup DMS for the different styles of data movement tasks DMS supports.

AWS DMS full load

For an AWS DMS full-load task, the most straightforward approach is to connect the Communication facility corresponding to the catalog partition node, which coordinates tasks across logical nodes and pulls the data across to your target. For small and medium databases (less than 10 TB), this approach will work just fine. However, for larger DB2 DPF systems (over 10 TB), this may not be performant enough because you’re running through a single node. Therefore, you can use the parallelism of DPF partitions and unload the data in parallel. For this parallel unload for larger datasets, you can consider two approaches: IBM High Performance Unload or setting up multiple AWS DMS tasks to connect to each DB2 DPF partition endpoint directly. This engages all the parallelism of the DPF configuration and should enable faster bulk data transfer. This AWS DMS setup is similar to the change data capture (CDC) setup described in the next section.

AWS DMS ongoing replication

AWS DMS CDC (ongoing replication) requires a connection to each database partition in the IBM DB2 DPF cluster. The reason for this is AWS DMS doesn’t support coordination of transactions across nodes in a DB2 DPF environment when using the IBM DB2READLOG API. In DPF, a transaction may span multiple nodes, depending upon how the data is partitioned, and AWS DMS may not be fully aware of how that transaction is run. Therefore, there are some limitations to be aware of, but with the approach shown in this post, AWS DMS can capture local transactions for each DPF server.

AWS DMS needs a connection endpoint to each DPF server so it can retrieve local transactions from each individual node in the DPF cluster. This is done by using the AWS DMS extra connection attribute (ECA) connectNode (connectNode=0, connectNode=1). This should be configured on multiple AWS DMS source endpoints based on the number of logical nodes that exist in the database server db2nodes.cfg configuration file $DB2InstHome/sqllib/db2nodes.cfg. This driver configuration ODBC option specifies the database partition server to which a connection is to be made.

Considerations

There are several important considerations and limitations to be aware of during the implementation. For a complete list, see Limitations when using Db2 LUW as a source for AWS DMS.

Solution overview

A typical AWS DMS migration involves the following steps:

Create the source endpoint.

Create the target endpoint.

Create a replication instance.

Create migration tasks.

This post only discusses the creation of the source endpoint, which is specific to the extraction of the data from the IBM Db2 DPF database. For instructions on the remaining steps, see Migrate from self-managed Db2 to Amazon RDS for Db2 using AWS DMS.

Create the source endpoints

Complete the following steps to set up your DB2 source endpoints for each DPF node:

Get the existing DPF logical node numbers from DB2 database server db2nodes.cfg configuration file ($DB2InstHome/sqllib/db2nodes.cfg), as shown in the following screenshot.

On the AWS DMS console, choose Endpoints in the navigation pane.

Choose Create endpoint.

For Endpoint type, select Source endpoint.

Enter a name for your endpoint and an optional Amazon Resource Name (ARN).

For Source engine, choose IBM Db2 LUW.

For Access to endpoint database, select your preferred method of authentication to the Db2 LUW source: AWS Secrets Manager or manual authentication. For this post, we select Provide access information manually.

Enter the connection details, including the server name, port, user name, password.

For Extra connection attributes, enter the value for connectNode (reflecting a DPF node number) and StartFromContext (reflecting LRI, NOW, or Timestamp).

For ongoing replication, if you plan to start replication from a specific timestamp, you must set the StartFromContext connection attribute to the required timestamp.

Choose Create endpoint to finalize the setup of the source endpoint.

Repeat these steps to create an endpoint for each node.

Best practices

In this section, we share some best practices, frequently asked questions, and associated recommendations by the developer community.

How to check the current LSN for all logical nodes at once

Use the following code to check the current log sequence number (LSN) for all logical nodes at once (for this example, we use the database DB_DPF):

db2_all “; db2pd -logs -db DB_DPF”

How to check the current LSN for a separate node or database member

Use the following command to check the current LSN for a separate node or database member (the following example checks node 2):

db2pd -logs -db DB_DPF -member 2

How to get the LRI or LSN range of a log file

Each DPF node has its own translation logs and different path, so to get the log record identifier (LRI) or LSN range of a log file, complete the following steps (for this example, we use log file 13 for database member 2):

Get the main log path from the database config using the following command:

db2 get db cfg for DB_DPF |grep -i “Path to log files”

After you get the path, change the node number in the path. For example:

For node 0, use /home/db2inst1/db2inst1/NODE0000/SQL00001/LOGSTREAM0000/.

For node 2, use /home/db2inst1/db2inst1/NODE0002/SQL00001/LOGSTREAM0000/.

Get the LRI range of file 13 using the command that is used to get the file from the node 2 path:

db2flsn -db DB_DPF -lrirange 13 -logpath /home/db2inst1/db2inst1/NODE0002/SQL00001/LOGSTREAM0002

How to get the LRI or LSN range for multiple log files

Use the following command to get the LRI or LSN range of multiple log files (for this example, log files 9–13 for database member 2):

db2flsn -db DB_DPF -lrirange -startlog 9 -endlog 13 -logpath /home/db2inst1/db2inst1/NODE0002/SQL00001/LOGSTREAM0002

How to get the map estimate LRI to estimate timestamps included

Listing the transaction log files on the Linux file system using the command ls -l would show the write timestamp, which would give insight around the transactions timestamps written around that time. With this information, it’s possible to determine the LRI to be used to start ongoing replication from a specific node.

For example, we can use the preceding output to replicate the transactions starting from January 16 after 14:02 using the LRI. To do so, we have to get the LRI range of log file 7 S0000007.LOG using the following command:

db2flsn -db DB_DPF -lrirange 7 -logpath /home/db2inst1/db2inst1/NODE0002/SQL00001/LOGSTREAM0002

Use the lowest LRI to be included on the source ECA as follows:

StartFromContext=010000000000008D2600000000001A4591

Source latency tuning

If high source latency is persistent, using the source ECA MaxKBytesPerRead can help alleviate the latency. This ECA increases the size of the fetch buffer for db2ReadLog calls, thereby increasing the number of events read from the DB2 transaction log in a single call. Using this ECA will be more memory consumptive on the individual source DB2 nodes. For more details, see Endpoint settings when using Db2 LUW as a source for AWS DMS.

Amazon S3 target considerations

With multiple task and endpoints and the way Amazon S3 processes data, it’s recommended to have a separate folder for each task. This avoids the possibility of multiple AWS DMS tasks contending for the same file name in the target. Amazon S3 doesn’t have native multi-version concurrency control (MVCC) due to it being an object store.

For example, having a three-partition source writing 16 million records in under 5 minutes to the same S3 folder resulted in only 15,950,067 records landing at the target. Splitting out the target file paths amongst the individual nodes resulted in consistent data migration during the same CDC source load.

At a high level, when using DB2 DPF as a source and Amazon S3 as a target, make sure to use separate folders per node to achieve data consistency during full load or CDC.

Security in AWS DMS

Security is of paramount importance in any organization. Organizations need to make sure that strong security measures are in place while migrating the data from on premises to the cloud.

AWS DMS provides several security features, including encryption of data in transit using TLS, encryption of data at rest using AWS Key Management Service (AWS KMS), and secure connections using VPCs and security groups. It also integrates with AWS Identity and Access Management (IAM) for access control and auditing, and supports database activity streams for monitoring and auditing database activities.

Security is a shared responsibility between AWS and the customer. The shared responsibility model describes this as security of the cloud and security in the cloud. For more information about how to apply the shared responsibility model when using AWS DMS, see Security in AWS Database Migration Service.

Conclusion

In this post, we discussed how you can use AWS DMS to copy data from your IBM DB2 DPF databases to Amazon S3 for building a data lake. With the steps and best practices provided, you can set up your data copy and ongoing replication from your important IBM DB2 DPF sources and enable further analytic and generative artificial intelligence (AI) use cases.

Try this approach for yourself, and leave any comments in the comments section.

About the Authors

Yadukishore Tatavarthi is a Senior Partner Solutions Architect supporting Healthcare and life science customers at Amazon Web Services. He has been helping the customers over the last 20 years in building the enterprise data strategies, advising customers on Generative AI, cloud implementations, migrations, reference architecture creation, data modeling best practices, data lake/warehouses architectures.

David Gardner is a Principal Specialist Solution Architect who leverages his years of database and analytic experience assisting customers migrate, modernize and operate their databases & workload in AWS Cloud.

Ahmed Darwish  is a Database Engineer in Database Migration Service at AWS specialized in Oracle and DB2. His expertise contributes to the advanced technical aspects of database migration and management within AWS’s extensive service. He works with our customers to provide guidance and technical assistance on database migration projects, helping them improve the value of their solutions when using AWS.

Michael Newlin is a Cloud Support DBE with Amazon Web Services and Subject Matter Expert for AWS Database Migration Service. At AWS he works with Customers and Internal teams to ensure smooth and fast transitions of database workloads to AWS.

Source: Read More