Large Language Models (LLMs) are stepping into clinical and medical fields as they grow in capability and versatility. These models have a number of benefits, including the capacity to supplement or even replace the work that doctors typically do. This include providing medical information, keeping track of patient information, and holding consultations with patients.

In the medical profession, one of the main advantages of LLMs is their capacity to produce long-form text, which is necessary for giving thorough responses to patient inquiries. Responses that are accurate and instructive are essential, particularly in medical situations when providing false information might have detrimental effects. For instance, when a patient asks about the origins of a white tongue, the LLM must answer truthfully about possible causes, including bacterial accumulation, without spreading myths, such as the idea that the condition is invariably dangerous and irreversible.

In the medical area, there are numerous scenarios in which producing comprehensive, extended answers is necessary. This is particularly crucial when answering inquiries from patients, as the details given must be true and factual. To ensure the accuracy and consistency of these answers, an automated process for assessing the assertions made by LLMs is required.Â

To dive into this, in a recent study, a team of researchers has produced MedLFQA, a specialized benchmark dataset derived from pre-existing long-form question-answering datasets in the biomedical area. The goal of MedLFQA is to make it easier to automatically assess the factual accuracy of responses produced by LLMs. This dataset helps in determining the accuracy and dependability of the facts offered in these lengthy responses.

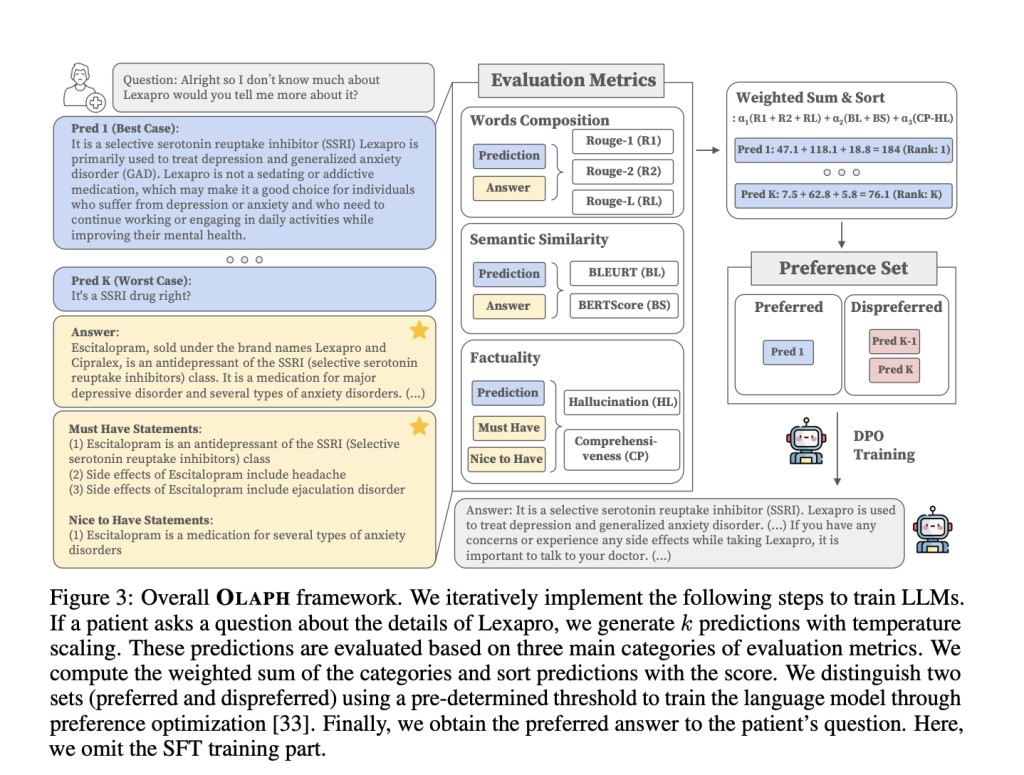

The team has offered a unique framework called OLAPH (Optimizing Large language models’ Answers with Preferences of reducing Hallucination). OLAPH uses a series of automated assessments to improve the factual accuracy of LLMs. The methodology uses an iterative training process to teach the LLM to favor responses with the greatest factual and assessment metrics scores.Â

For each question, the OLAPH framework generates several response samples. Then, using predetermined assessment criteria, the response with the greatest score is chosen. The LLM is then further trained using this preferred response, bringing its subsequent responses closer to the correct and preferred answers. The model would otherwise produce false information, but this iterative approach helps to limit the issue of hallucinations.

The results have shown considerable improvements in factual accuracy for LLMs trained with the OLAPH framework, even when measured against measures not expressly included in the training procedure. A 7-billion parameter LLM trained with OLAPH produced long-form responses on par with professional medical responses in terms of quality.

The team has summarized their primary contributions as follows.

The team has released MedLFQA, a reorganized benchmark dataset for automated assessment of the long-text generation produced by LLMs in the biomedical field.Â

In order to evaluate the veracity of medical claims provided in long-form responses, the team has developed two distinct statements that offer a comprehensive picture of the LLMs’ capacity to produce accurate data.

OLAPH framework has been introduced, which enhances LLM replies through iterative learning and automatic evaluation.Â

It has been demonstrated that LLMs with 7 billion parameters when trained using the OLAPH framework, can produce long-form answers that are comparable in factual accuracy to those provided by medical experts.

In conclusion, this study proposes the OLAPH architecture to enhance long-form medical responses by iterative training, and it introduces MedLFQA as a baseline for assessing the factual accuracy of these responses produced by LLMs. The findings show that OLAPH has the potential to greatly improve LLMs’ dependability in producing accurate medical information, which could be crucial for a number of medical applications.

Check out the Paper and Github. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter. Join our Telegram Channel, Discord Channel, and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 42k+ ML SubReddit

The post OLAPH: A Simple and Novel AI Framework that Enables the Improvement of Factuality through Automatic Evaluations appeared first on MarkTechPost.

Source: Read MoreÂ