Google Cloud AI Researchers have introduced LANISTR to address the challenges of effectively and efficiently handling unstructured and structured data within a framework. In machine learning, handling multimodal data—comprising language, images, and structured data—is increasingly crucial. The key challenge is the issue of missing modalities in large-scale, unlabeled, and structured data like tables and time series. Traditional methods often struggle when one or more types of data are absent, leading to suboptimal model performance.Â

Current methods for multimodal data pre-training typically rely on the availability of all modalities during training and inference, which is often not feasible in real-world scenarios. These methods include various forms of early and late fusion techniques, where data from different modalities is combined either at the feature level or the decision level. However, these approaches are not well-suited to situations where some modalities might be entirely missing or incomplete.Â

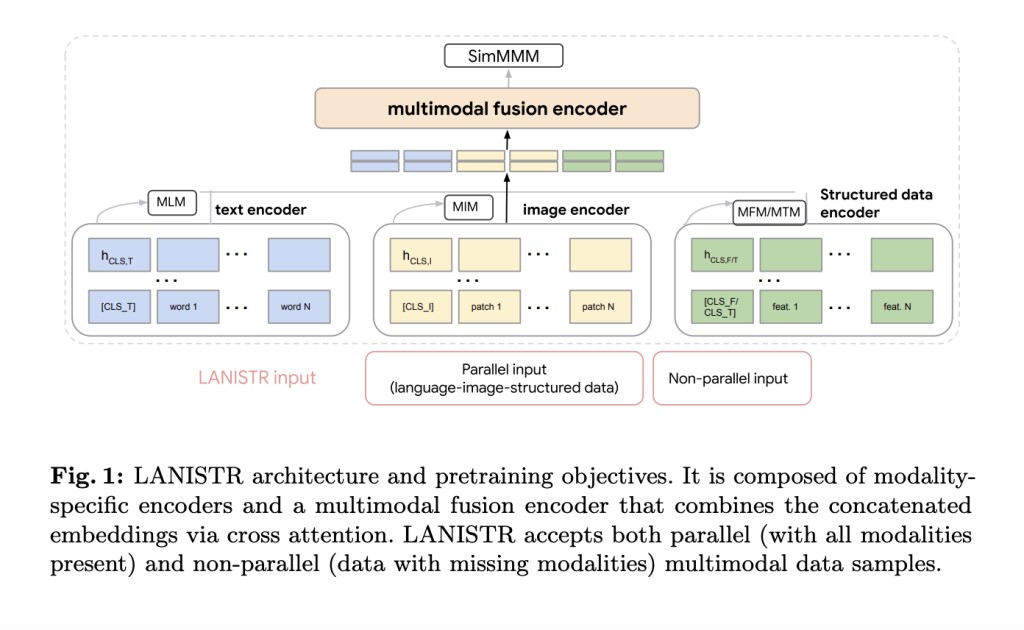

Google’s LANISTR (Language, Image, and Structured Data Transformer), a novel pre-training framework, leverages unimodal and multimodal masking strategies to create a robust pretraining objective that can handle missing modalities effectively. The framework is based on an innovative similarity-based multimodal masking objective, which enables it to learn from available data while making educated guesses about the missing modalities. The framework aims to improve the adaptability and generalizability of multimodal models, particularly in scenarios with limited labeled data.

The LANISTR framework employs unimodal masking, where parts of the data within each modality are masked during training. This forces the model to learn contextual relationships within the modality. For example, in text data, certain words might be masked, and the model learns to predict these based on surrounding words. In images, certain patches might be masked, and the model learns to infer these from the visible parts.Â

Multimodal masking extends this concept by masking entire modalities. For instance, in a dataset containing text, images, and structured data, one or two modalities might be entirely masked at random during training. The model is then trained to predict the masked modalities from the available ones. This is where the similarity-based objective comes into play. The model is guided by a similarity measure, ensuring that the generated representations for the missing modalities are coherent with the available data. The efficacy of LANISTR was evaluated on two real-world datasets: the Amazon Product Review dataset from the retail sector and the MIMIC-IV dataset from the healthcare sector.Â

LANISTR showed effectiveness in out-of-distribution scenarios, where the model encountered data distributions not seen during training. This robustness is crucial in real-world applications, where data variability is a common challenge. LANISTR achieved significant gains in accuracy and generalization even with the availability of labeled data.

In conclusion, LANISTR addresses a critical problem in the field of multimodal machine learning: the challenge of missing modalities in large-scale unlabeled datasets. By employing a novel combination of unimodal and multimodal masking strategies, along with a similarity-based multimodal masking objective, LANISTR enables robust and efficient pretraining. The evaluation experiment demonstrates LANISTR can effectively learn from incomplete data and generalize well to new, unseen data distributions, making it a valuable tool for advancing multimodal learning.

Check out the Paper and Blog. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter. Join our Telegram Channel, Discord Channel, and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 42k+ ML SubReddit

The post Google AI Propose LANISTR: An Attention-based Machine Learning Framework to Learn from Language, Image, and Structured Data appeared first on MarkTechPost.

Source: Read MoreÂ