Language models are fundamental to natural language processing (NLP), focusing on generating and comprehending human language. These models are integral to applications such as machine translation, text summarization, and conversational agents, where the aim is to develop technology capable of understanding and producing human-like text. Despite their significance, the effective evaluation of these models remains an open challenge within the NLP community.

Researchers often encounter methodological challenges while evaluating language models, such as models’ sensitivity to different evaluation setups, difficulties in making proper comparisons across methods, and the lack of reproducibility and transparency. These issues can hinder scientific progress and lead to biased or unreliable findings in language model research, potentially affecting the adoption of new methods and the direction of future research.

Existing evaluation methods for language models often rely on benchmark tasks and automated metrics such as BLEU and ROUGE. These metrics offer advantages like reproducibility and lower costs compared to manual human evaluations. However, they also have notable limitations. For instance, while automated metrics can measure the overlap between a generated response and a reference text, they may need to fully capture the nuances of human language or the correctness of the responses generated by the models.Â

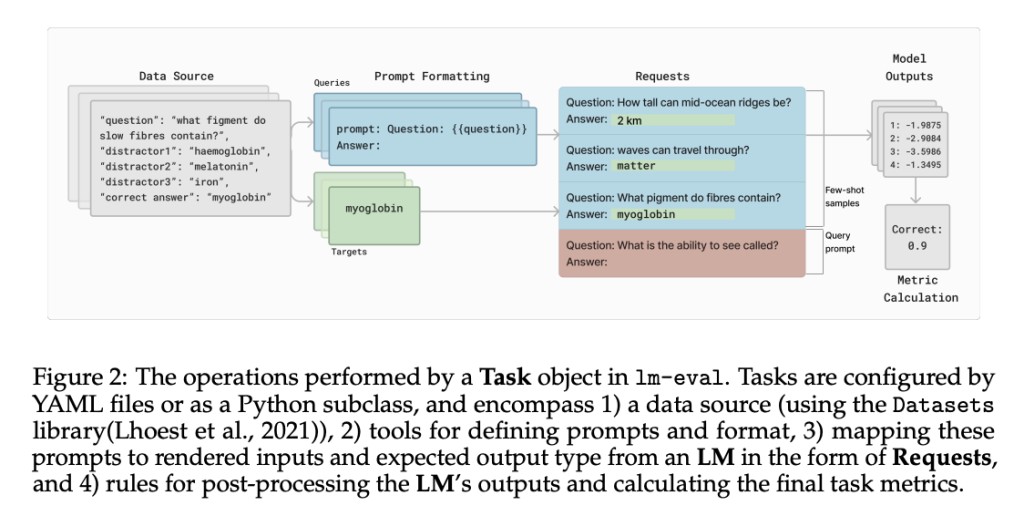

Researchers from EleutherAI and Stability AI, in collaboration with other institutions, introduced the Language Model Evaluation Harness (lm-eval), an open-source library designed to enhance the evaluation process. lm-eval aims to provide a standardized and flexible framework for evaluating language models. This tool facilitates reproducible and rigorous evaluations across various benchmarks and models, significantly improving the reliability and transparency of language model assessments.

The lm-eval tool integrates several key features to optimize the evaluation process. It allows for the modular implementation of evaluation tasks, enabling researchers to share and reproduce results more efficiently. The library supports multiple evaluation requests, such as conditional loglikelihoods, perplexities, and text generation, ensuring a comprehensive assessment of a model’s capabilities. For example, lm-eval can calculate the probability of given output strings based on provided inputs or measure the average loglikelihood of producing tokens in a dataset. These features make lm-eval a versatile tool for evaluating language models in different contexts.

Performance results from using lm-eval demonstrate its effectiveness in addressing common challenges in language model evaluation. The tool helps identify issues such as the dependence on minor implementation details, which can significantly impact the validity of evaluations. By providing a standardized framework, lm-eval ensures that researchers can perform evaluations consistently, regardless of the specific models or benchmarks used. This consistency is crucial for fair comparisons across different methods and models, ultimately leading to more reliable and accurate research outcomes.

lm-eval includes features supporting qualitative analysis and statistical testing, which are essential for thorough model evaluations. The library allows for qualitative checks of evaluation scores and outputs, helping researchers identify and correct errors early in the evaluation process. It also reports standard errors for most supported metrics, enabling researchers to perform statistical significance testing and assess the reliability of their results.Â

In conclusion, Key highlights of the research:

Researchers face significant challenges in evaluating LLMs, including issues with models’ sensitivity to evaluation setups, difficulties in making proper comparisons across methods, and a lack of reproducibility and transparency in results.

The research draws on three years of experience evaluating language models to provide guidance and lessons for researchers. It highlights common challenges and best practices to improve the rigor and communication of results in the language modeling community.

Lastly, it introduces lm-eval, an open-source library designed to enable independent, reproducible, and extensible evaluation of language models. It addresses the identified challenges and improves the overall evaluation process.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter. Join our Telegram Channel, Discord Channel, and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 42k+ ML SubReddit

The post EleutherAI Presents Language Model Evaluation Harness (lm-eval) for Reproducible and Rigorous NLP Assessments, Enhancing Language Model Evaluation appeared first on MarkTechPost.

Source: Read MoreÂ