Transformers have greatly transformed natural language processing, delivering remarkable progress across various applications. Nonetheless, despite their widespread use and accomplishments, ongoing research continues to delve into the intricate workings of these models, with a particular focus on the linear nature of intermediate embedding transformations. This less explored aspect poses significant implications for further advancements in the field.

Researchers from AIRI, Skoltech, SberAI, HSE University, and Lomonosov Moscow State University unveiled a unique linear property specific to transformer decoders, observed across models like GPT, LLaMA, OPT, and BLOOM. They identify a nearly perfect linear relationship in embedding transformations between sequential layers, challenging conventional understanding. Removing or approximating these linear blocks minimally affects model performance, prompting the development of depth-pruning algorithms and novel distillation techniques. Introducing cosine-similarity-based regularization during pretraining enhances model performance on benchmarks. It reduces layer linearity, offering insights into more efficient transformer architectures without compromising effectiveness, addressing a significant challenge in their deployment.

Research on sparsity for model pruning is a significant focus in machine learning. Previous studies have explored methods like backpropagation and fine-tuning to understand sparsity in convolutional neural networks. Techniques such as SquareHead distillation and WANDA have been developed to address challenges in sparse fine-tuning for LLMs. Understanding the inner structure of transformer models has led to insights into their linear complexity. The study investigates pruning techniques for LLMs, specifically leveraging the linearity of decoder-based layers. These methods aim to efficiently reduce model size while maintaining high performance on benchmark tasks.

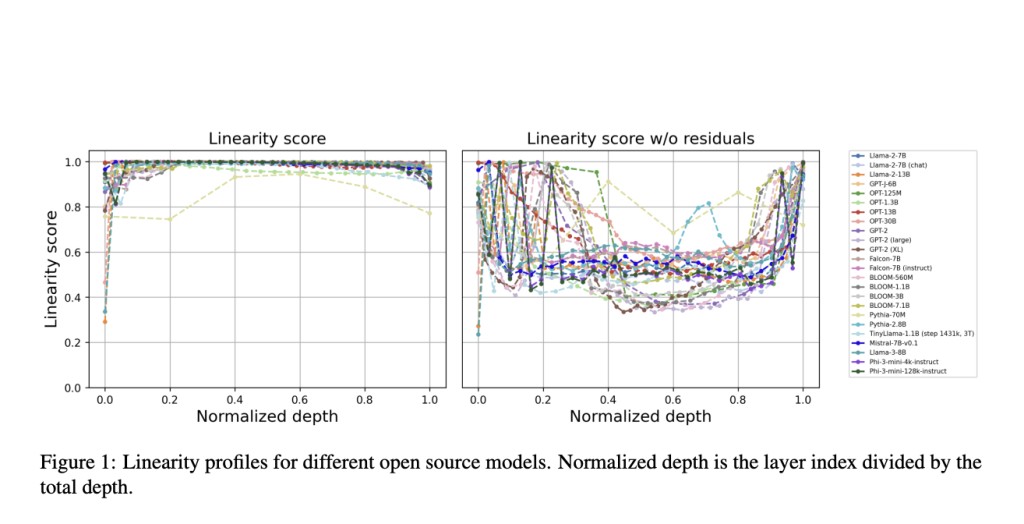

The researchers investigated the linearity and smoothness of transformations between sequential layers in transformer decoders. Using a metric derived from Procrustes similarity, they assessed the degree of linear dependence between sets of embeddings. Surprisingly, all tested transformer decoders exhibited high linearity scores, indicating strong linear characteristics in embedding transformations. However, the linearity dynamics varied during the pretraining and fine-tuning stages. While pretraining tended to decrease linearity, fine-tuning for specific tasks increased it. This phenomenon was consistent across diverse tasks, suggesting that task-specific fine-tuning reinforces and amplifies the linear characteristics of transformer models, as observed in various benchmarks.

To understand and leverage the linearity within transformer models, the researchers conducted pretraining experiments with the Mistral architecture using carefully selected datasets. Introducing specific regularization terms aimed at adjusting the relationships between embeddings within transformer layers, they observed significant improvements with a cosine-based approach. This approach encourages embeddings from sequential layers to converge, resulting in higher model performance. Furthermore, they explored a pruning strategy that sequentially removes the most linear layers, replacing them with linear approximations and incorporating distillation loss to minimize performance degradation. This approach effectively reduces model size without significant loss in performance, particularly when fine-tuned to mimic the original layers’ function.

In conclusion, the study provides a comprehensive investigation into the linearity of transformer decoders, revealing their innate near-linear behavior across various models. The researchers observe a paradoxical effect where pretraining increases nonlinearity while fine-tuning for specific tasks can reduce it. Introducing new pruning and distillation techniques, they show that transformer models can be refined without sacrificing performance. Additionally, the cosine-based regularization approach during pretraining enhances model efficiency and performance on benchmarks. However, the study is limited in its focus on transformer decoders. It requires further exploration into encoder-only or encoder-decoder architectures and the scalability of proposed techniques to different models and domains.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter. Join our Telegram Channel, Discord Channel, and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 42k+ ML SubReddit

The post Unveiling the Hidden Linearity in Transformer Decoders: New Insights for Efficient Pruning and Enhanced Performance appeared first on MarkTechPost.

Source: Read MoreÂ