Advancements in AI have led to proficient systems that make unclear decisions, raising concerns about deploying untrustworthy AI in daily life and the economy. Understanding neural networks is vital for trust, ethical concerns like algorithmic bias, and scientific applications requiring model validation. Multilayer perceptrons (MLPs) are widely used but lack interpretability compared to attention layers. Model renovation aims to enhance interpretability with specially designed components. Based on the Kolmogorov-Arnold Networks (KANs) offer improved interpretability and accuracy based on the Kolmogorov-Arnold theorem. Recent work extends KANs to arbitrary widths and depths using B-splines, known as Spl-KAN.

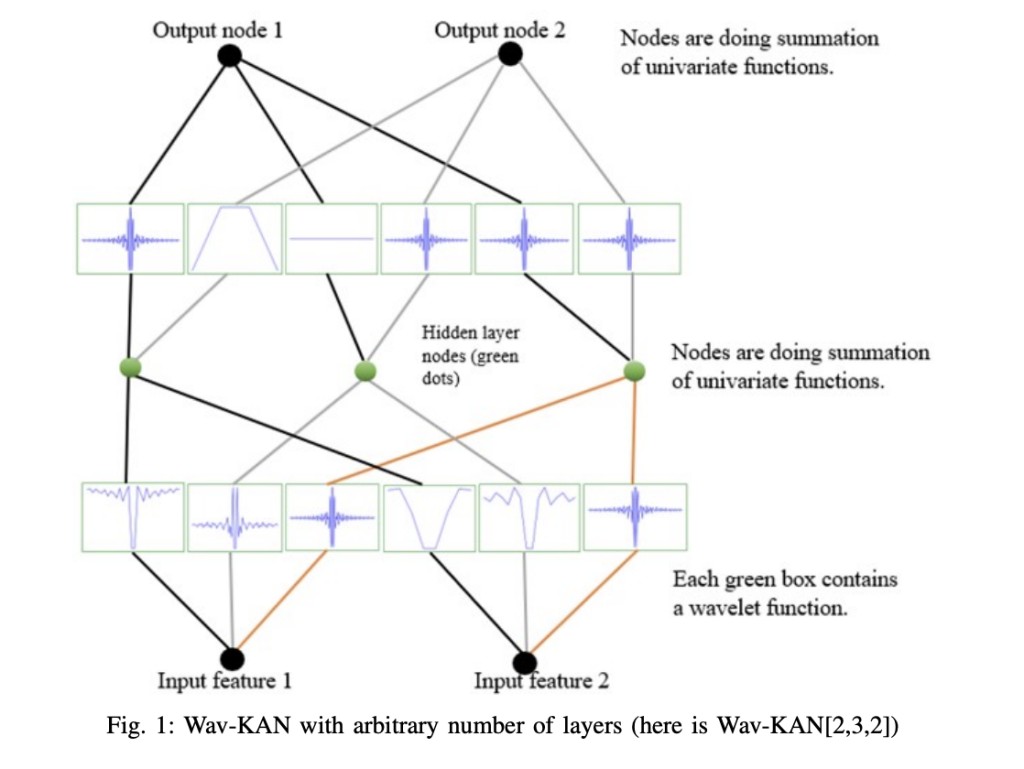

Researchers from Boise State University have developed Wav-KAN, a neural network architecture that enhances interpretability and performance by using wavelet functions within the KAN framework. Unlike traditional MLPs and Spl-KAN, Wav-KAN efficiently captures high- and low-frequency data components, improving training speed, accuracy, robustness, and computational efficiency. By adapting to the data structure, Wav-KAN avoids overfitting and enhances performance. This work demonstrates Wav-KAN’s potential as a powerful, interpretable neural network tool with applications across various fields and implementations in frameworks like PyTorch and TensorFlow.

Wavelets and B-splines are key methods for function approximation, each with unique benefits and drawbacks in neural networks. B-splines offer smooth, locally controlled approximations but struggle with high-dimensional data. Wavelets, excelling in multi-resolution analysis, handle both high and low-frequency data, making them ideal for feature extraction and efficient neural network architectures. Wav-KAN outperforms Spl-KAN and MLPs in training speed, accuracy, and robustness by using wavelets to capture data structure without overfitting. Wav-KAN’s parameter efficiency and lack of reliance on grid spaces make it superior for complex tasks, supported by batch normalization for improved performance.

KANs are inspired by the Kolmogorov-Arnold Representation Theorem, which states that any multivariate function can be decomposed into the sum of univariate functions of sums. In KANs, instead of traditional weights and fixed activation functions, each “weight†is a learnable function. This allows KANs to transform inputs through adaptable functions, leading to more precise function approximation with fewer parameters. During training, these functions are optimized to minimize the loss function, enhancing the model’s accuracy and interpretability by directly learning the data relationships. KANs thus offer a flexible and efficient alternative to traditional neural networks.

Experiments with the KAN model on the MNIST dataset using various wavelet transformations showed promising results. The study utilized 60,000 training and 10,000 test images, with wavelet types including Mexican hat, Morlet, Derivative of Gaussian (DOG), and Shannon. Wav-KAN and Spl-KAN employed batch normalization and had a structure of [28*28,32,10] nodes. The models were trained for 50 epochs over five trials. Using the AdamW optimizer and cross-entropy loss, results indicated that wavelets like DOG and Mexican hat outperformed Spl-KAN by effectively capturing essential features and maintaining robustness against noise, emphasizing the critical role of wavelet selection.

In conclusion, Wav-KAN, a new neural network architecture, integrates wavelet functions into KAN to improve interpretability and performance. Wav-KAN captures complex data patterns using wavelets’ multiresolution analysis more effectively than traditional MLPs and Spl-KANs. Experiments show that Wav-KAN achieves higher accuracy and faster training speeds due to its unique combination of wavelet transforms and the Kolmogorov-Arnold representation theorem. This structure enhances parameter efficiency and model interpretability, making Wav-KAN a valuable tool for diverse applications. Future work will optimize the architecture further and expand its implementation in machine learning frameworks like PyTorch and TensorFlow.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter. Join our Telegram Channel, Discord Channel, and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 42k+ ML SubReddit

The post Enhancing Neural Network Interpretability and Performance with Wavelet-Integrated Kolmogorov-Arnold Networks (Wav-KAN) appeared first on MarkTechPost.

Source: Read MoreÂ