Change data capture (CDC) is the process of capturing changes to data from a database and publishing them to an event stream, making the changes available for other systems to consume. Amazon DynamoDB CDC offers a powerful mechanism for capturing, processing, and reacting to data changes in near real time. Whether you’re building event-driven applications, integrating with other services, implementing data analytics or machine learning (ML) models, or providing data consistency and compliance, CDC can be a valuable tool in your DynamoDB toolkit.

In DynamoDB, CDC is implemented using a streaming model, which allows applications to capture item-level changes in a DynamoDB table in near real time as a stream of data records. The CDC stream of data records enables applications to efficiently process and respond to the data modifications in the DynamoDB table. DynamoDB offers two streaming models for CDC: Amazon DynamoDB Streams and Amazon Kinesis Data Streams for DynamoDB.

In this post, we discuss both DynamoDB Streams and Kinesis Data Streams for DynamoDB. We start off with an overview on DynamoDB Streams. Then we discuss why and when DynamoDB Streams can be helpful in building your event-driven application and integrating with other services to derive actionable insights. We also provide an overview of Amazon Kinesis Data Streams, with some scenarios where using Kinesis Data Streams for DynamoDB might be better for you. Finally, we conclude with a comparative summary of both options.

DynamoDB Streams

DynamoDB Streams captures a deduplicated, time-ordered sequence of item-level modifications in a table and stores this information in a log for up to 24 hours. Based on your DynamoDB Streams configuration, you can view the data items as they appear before and after they were modified. You can build applications that consume these stream events and invoke workflows based on the contents of the event stream.

DynamoDB Streams are useful for when you want to do the following:

Respond to data changes with triggers, using the native integration with AWS Lambda. Read requests made by Lambda-based consumers of DynamoDB Streams are available at no additional cost. Additionally, you can save costs on Lambda using event filtering with DynamoDB.

Track and analyze customer interactions, or monitor application performance in near real time. This can also benefit data warehousing or analytics use cases.

Capture ordered sequences of events, which benefits use cases in troubleshooting, debugging, or compliance mechanisms for supporting an audit trail. This is critical in many industries, such as ecommerce, financial services, and healthcare, to name a few.

Improve application resiliency through replicating item-level transactional data. This additionally provides mitigations for data availability concerns, in the event of Regional outages, deployment problems, or operational issues.

Example use case: Send a welcome email when a new user registers an account

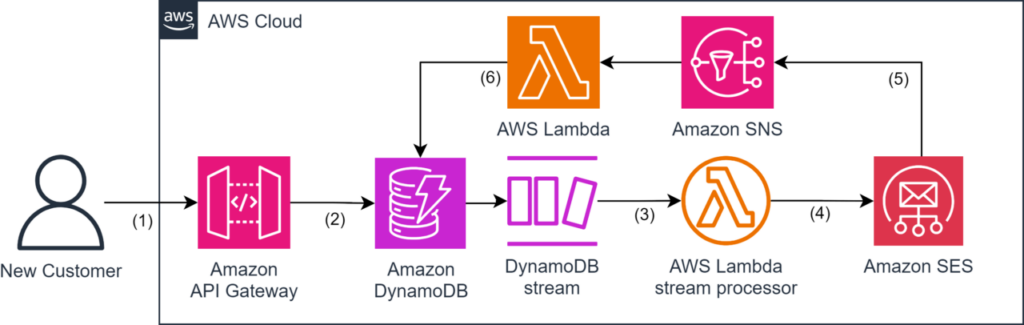

Let’s illustrate the first and second functions from the previous section with an example use case: you’re creating a web application, and you want to enable new users to register an account on your web application. After registering, the system should automatically invoke a welcome email to be sent to the new customer, and report the status of that welcome email delivery back to the database. The following diagram shows one way this could be built using AWS.

The workflow includes the following steps:

A new user signs up for a new account by providing their email address.

The PUT/POST request creates a new item in DynamoDB, generating a DynamoDB stream record.

A Lambda filters the new user event from the DynamoDB stream to be processed.

The Lambda function sends an email using Amazon Simple Email Service (Amazon SES) to the new user with welcome information.

Amazon SES reports the status of email delivery to Amazon Simple Notification Service (Amazon SNS), indicating a delivery success or failure.

A second Lambda function processes the message from the SNS topic and updates the delivery status for the newly registered user in the DynamoDB table.

To extend this solution to use DynamoDB Streams for anomaly and fraud detection, refer to Anomaly detection on Amazon DynamoDB Streams using the Amazon SageMaker Random Cut Forest algorithm. For an example of how another customer built a fraud detection system with DynamoDB, see How Getir build a comprehensive fraud detection system using Amazon Neptune and Amazon DynamoDB.

Example use case: Worldwide competitive gaming application

For the third and fourth functions discussed earlier, let’s walk through a different example, which demonstrates the replication of item-level changes across AWS Regions, and captures and processes item-level data changes in near real time. Consider a popular gaming application with players competing worldwide on leaderboards and real-time player statistics and achievements, where “first completion†would break a tie between two players with the same score. For an example for a sample gaming application, refer to Data modeling example. The following diagram shows how this can be built in AWS.

The workflow consists of the following steps:

Game players complete quests and add statistics about their game states through Amazon CloudFront and Amazon API Gateway, to their corresponding Regional gameplay endpoint.

A Lambda function handles and processes the API requests from API Gateway.

The Lambda function creates an item in DynamoDB, representing the player’s game state (game realm, Region, time of completion, and related quest statistics).

For each item created, updated, or deleted, a DynamoDB Stream record is pushed to represent the change in state.

The DynamoDB Stream record is pushed to Amazon EventBridge Pipes as the source event.

The source event in EventBridge Pipes is published to an Amazon Simple Queue Service (Amazon SQS) queue target in a separate Region. This Region centralizes the global game state, worldwide quest completion order, and scoring leaderboards.

Another Lambda function processes the SQS messages received from all gameplay Regions.

The Lambda function also creates an item in DynamoDB, representing the Regional game state of the player (similar information to Step 3, but with additional metadata).

The Regional and global Lambda functions filter stream records based on noteworthy statistics. The SNS topics can be further integrated with website applications or in-game displays.

Another Lambda function publishes global player statistics and analytics to monitor and track in-game progression and performance.

This workflow illustrates an example use case where DynamoDB Streams can help scale a complex worldwide competitive gaming application. Each game session is persisted Regionally, but replicated globally; completion order can be tracked across all Regions, and an updated global leaderboard can notify players worldwide whenever a new record is set.

With DynamoDB Streams, the app can reliably capture stateful gameplay updates while maintaining the following:

Strict ordering of events via sequence numbers, accurately reflecting completion order across users Regionally and globally.

Automatic deduplication of stream records sent during brief connectivity issues, preventing incorrect ordering and inflated statistics. If the stream record consumer (in our example, a Lambda function) fails to report the event consumption, another consumer (in our example, another Lambda function) may pick up and consume the same event. For more information, refer to How do I make my Lambda function idempotent?

Near real-time replication, allowing leaderboards and profiles to notify and update new achievements.

High availability inherited from DynamoDB, providing uninterrupted updates during peak hours.

Seamless shard scaling to handle increasing volumes, with parallel record processing across auto-provisioned shards.

By using DynamoDB Streams, the gaming app delivers a consistently responsive, real-time leaderboard experience as Regional and worldwide popularity grows, without operational overhead, on both mobile and desktop gaming devices.

Additional use case: Cross-service data replication and integration using DynamoDB Streams

One additional use case that customers using DynamoDB streams can benefit from is integrating with other AWS services, such as Amazon Managed Streaming for Apache Kafka (Amazon MSK), Amazon Neptune, or other integration targets using EventBridge Pipes for advanced processing, such as AWS Step Functions or Amazon SageMaker. This enables an additional use case on DynamoDB Streams: complex queries, analytics, and searches over petabyte-scale datasets. The following diagram shows a visualization of this workflow.

The workflow includes the following steps:

Various types of data are published as items into DynamoDB. Each new change is captured as an event in the DynamoDB stream.

The DynamoDB stream event invokes the Lambda function.

Alternatively, the DynamoDB stream event can act as a source when integrating with EventBridge Pipes.

The Lambda function translates the stream record into the destination target format and publishes the change to the intended service target.

Alternatively, the stream event can be transmitted through EventBridge Pipes to an integration target, such as Step Functions or SageMaker, for complex processing.

Using DynamoDB Streams to build big data analytics pipelines that enable cross-service data replication and integration using your existing data infrastructure can simplify your information architecture. This can enhance your ability to handle complex workloads, create scalable applications, and process analytics, queries, or searches across massive datasets that contain large files.

Kinesis Data Streams for Amazon DynamoDB

In the previous section, we introduced examples of when using DynamoDB Streams can help you. You should use Kinesis Data Streams for DynamoDB in the following use cases:

You want to integrate with the broader Kinesis ecosystem (such as the Kinesis Client Library, Amazon Managed Service for Apache Flink, or Amazon Data Firehose) for aggregating changelog events from other services

You require longer data retention and replayability (up to 365 days)

You require customized shard management

You require enhanced fanout for downstream consumption and streaming analytics, or for lowest latency with dedicated read throughput for all shards

When using Kinesis Data Streams for DynamoDB with Firehose, you can ingest DynamoDB data into a variety of external applications, such as Amazon Simple Storage Service (Amazon S3), Amazon Redshift, or Amazon OpenSearch Service. For more information about possible destinations, see Destination Settings.

Unlike DynamoDB Streams, Kinesis Data Streams for DynamoDB does not provide record ordering nor deduplication. Record ordering and deduplication must be implemented by client applications, using the ApproximateCreationDateTime field in the item-level record. Kinesis Data Streams for DynamoDB provides flexibility by allowing manual shard provisioning and monitoring, which enables up to 20 consumers to process stream events concurrently, because stream records are available to be consumed immediately after they are written.

Example use case: Process and analyze analytics data using Kinesis Data Streams for DynamoDB

We can demonstrate this with an example customer use case: an ecommerce platform needs to process and analyze clickstream data from various sources (web and mobile applications, IoT devices) in real time to gain insights into user behaviors, detect anomalies, and perform targeted marketing campaigns.

The following diagram demonstrates an example workflow for delivering analytics data from DynamoDB to a stream processor using Kinesis Data Streams, where it is aggregated and processed, then delivered to analytics storage and made available for big data querying and further analysis.

The workflow includes the following steps:

Clickstream data, customer behavior and session data, and order data is ingested using any ingestion proxy for DynamoDB (API Gateway). If the clickstream data schema is the same, multiple inputs can feed into the same DynamoDB table.

Item-level changes are published from the DynamoDB table into the Kinesis data stream.

Kinesis Data Streams sends the records to a stream processor (Firehose, Amazon MSK, Managed Service for Apache Flink) for storage.

The stream processor aggregates and stores the processed data into a data lake for further business intelligence (BI) and additional analysis.

This workflow illustrates an example use case where Kinesis Data Streams for DynamoDB can help manage the processing of multiple different types of customer behavior data (clickstream, session, and order information) using the broader Kinesis analytics ecosystem to analyze large amounts of real-time customer data at scale. To learn more about how to stream DynamoDB data into a centralized data lake using Kinesis Data Streams, see Streaming Amazon DynamoDB data into a centralized data lake.

Summary of streaming options for change data capture

As discussed in this post, DynamoDB offers two streaming models for change data capture: DynamoDB Streams and Kinesis Data Streams for DynamoDB. The following table shows a comparative summary between the streaming models.

Properties

DynamoDB Streams

Kinesis Data Streams for DynamoDB

Data retention

24 hours.

24 hours (by default), up to 1 year.

Number of consumers

Up to two simultaneous consumers per shard.

Up to 5 shared-throughput consumers per shard, or up to 20 simultaneous consumers per shard with enhanced fan-out.

Throughput quotas

Subject to throughput quotas by DynamoDB table and Region.

Unlimited.

Stream processing options

Process stream records using Lambda or DynamoDB Streams Kinesis adapter.

Process stream records using Lambda, Managed Service for Apache Flink, Firehose, or AWS Glue streaming ETL.

Kinesis Client Library (KCL) support

Supports KCL version 1.X.

Supports KCL versions 1.X and 2.X.

Record delivery model

Pull model over HTTP using GetRecords.

Pull model over HTTP using GetRecords and with enhanced fan-out, Kinesis Data Streams pushes the records over HTTP/2 by using SubscribeToShard.

Ordering of records

For each item that is modified in a DynamoDB table, the stream records appear in the same sequence as the actual modifications to the item.

The client must write application code to compare the ApproximateCreationDateTime timestamp attribute on each stream record to identify the actual order in which changes occurred in the DynamoDB table.

Duplicate records

No duplicate records appear in the stream.

Duplicate records might occasionally appear in the stream. If needed, clients must write application logic for filtering.

Shard management

Automated, not user-configurable.

Two options: provisioned (self-managed) or on-demand (automatically managed).

Available Metrics

ReplicationLatency (global tables)

ReturnedBytes

ReturnedRecordsCount

SuccessfulRequestLatency

SystemErrors

UserErrors

IncomingBytes

IncomingRecords

OutgoingBytes

OutgoingRecords

WriteProvisionedThroughputExceeded

ReadProvisionedThroughputExceeded

AgeOfOldestUnreplicatedRecord

FailedToReplicateRecordCount

ThrottledPutRecordCount

Cost

Every month, the first 2.5 million read request units are free; $0.02 per 100,000 read request units thereafter.

These costs do not apply when using Lambda as the consumer.

$0.10 per million change data capture units (for each write – up to 1 KB), in addition to Kinesis Data Streams charges.

Conclusion

In this post, we discussed CDC in DynamoDB and explored the two streaming models it offers: DynamoDB Streams and Kinesis Data Streams for DynamoDB. We also examined their different capabilities and illustrated the optimal use cases for each. We briefly discussed integration patterns with DynamoDB Streams with other AWS services like Amazon MSK and Neptune, and using Kinesis Data Streams to tap into the broader Kinesis analytics ecosystem.

Both options offer rich capabilities to track item-level changes, process and analyze streaming data to derive actionable business insights, and power next-generation applications. Select the option that best aligns with your application requirements for deduplication, ordering, and downstream consumption and integrations.

To learn more, refer to Change data capture for DynamoDB Streams and Using Kinesis Data Streams to capture changes to DynamoDB.

About the Author

Michael Shao is the Senior Developer Advocate for Amazon DynamoDB. Michael spent the last 8 years as a Software Engineer at Amazon Retail, with a background in designing and building scalable, resilient software systems. His expertise in exploratory, iterative development helps drive his passion for helping AWS customers understand complex technical topics. With a strong technical foundation in system design and building software from the ground up, Michael’s goal is to empower and accelerate the success of the next generation of developers.

Source: Read More