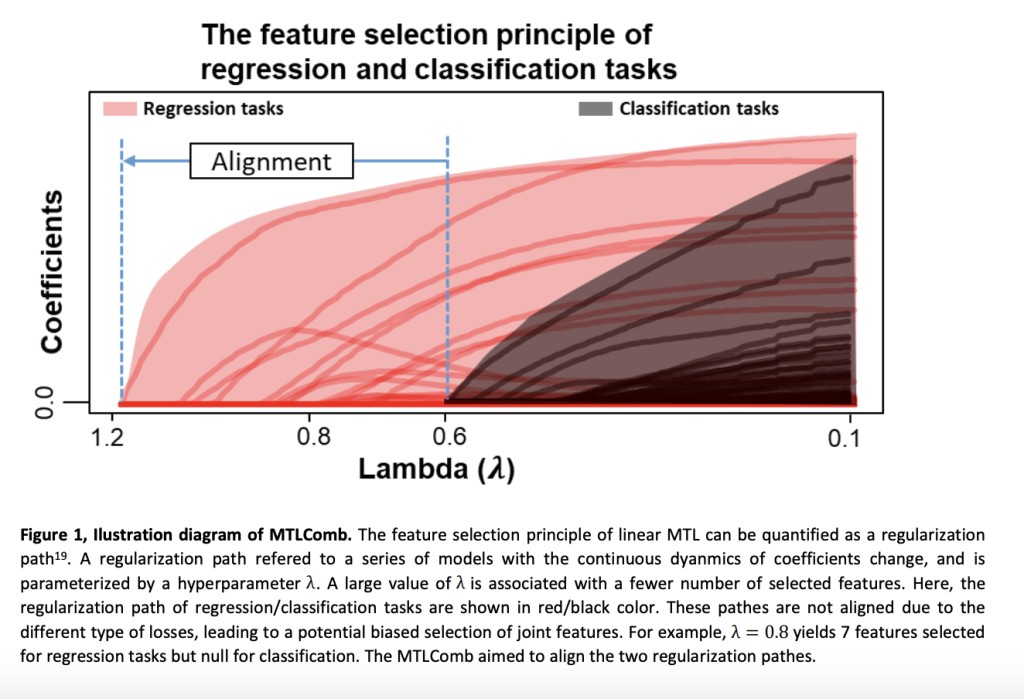

In machine learning, multi-task learning (MTL) has emerged as a powerful paradigm that enables concurrent training of multiple interrelated algorithms. By exploiting the inherent connections between tasks, MTL facilitates the acquisition of a shared representation, potentially enhancing a model’s generalizability. MTL has found widespread success in various domains, such as biomedicine, computer vision, natural language processing, and internet engineering. However, incorporating mixed types of tasks, such as regression and classification, into a unified MTL framework poses significant challenges. One of the primary hurdles is the misalignment of the regularization paths, which quantifies the feature selection principle between regression and classification tasks, leading to biased feature selection and suboptimal performance.

This misalignment arises due to the divergent magnitudes of losses associated with different task types. As illustrated in Figure 1, when the regularization parameter λ is varied, the subsets of selected features for regression and classification tasks can differ substantially, leading to biased joint feature selection. For instance, in the figure, when λ = 0.8, seven features are selected for regression tasks, while none are selected for classification tasks.

To tackle this challenge, researchers from Heidelberg University have introduced MTLComb, a novel MTL algorithm designed to address the challenges of joint feature selection across mixed regression and classification tasks. At its core, MTLComb employs a provable loss weighting scheme that analytically determines the optimal weights for balancing regression and classification tasks, mitigating the otherwise biased feature selection.

The intuition underlying MTLComb is deceptively simple. Consider a least-square loss of a regression problem weighted by α, minw α||Y – Xw||22, where the solution is w = α(XT X)-1 XT Y. This implies that the magnitude of w can be adjusted by α, leading to a movable regularization path. Extending this intuition to multiple types of losses, MTLComb allows for finding optimal weights for different losses, aligning the feature selection principles.

The researchers proved in Proposition 1 that the constant weights used in MTLComb are optimal. The formulation of MTLComb is shown in equation (1):

minW 2 × Z(W) + 0.5 × R(W) + λ||W||2,1 + α||WG||22 + β||W||22   (1)

where Z(W) is the logit loss to fit the classification tasks, and R(W) is the least-square loss to fit the regression tasks. The term ||W||2,1 is a sparse penalty term to promote joint feature selection, ||WG||22 is the mean-regularized term to promote the selection of features with similar cross-task coefficients, and ||W||22 aims to select correlated features and stabilize numerical solutions.

The researchers adopted the accelerated proximal gradient descent method to solve the objective function in equation (1), which features a state-of-the-art algorithmic complexity of O(1/k^2). Accurately determining the sequence of λ (a spectrum of sparsity levels) is crucial for capturing the highest likelihood while avoiding unnecessary explorations. Inspired by the glmnet algorithm, the researchers estimated the λ sequence from the data in three steps: estimating the largest λ (lam_max) leading to nearly zero coefficients, calculating the smallest λ using lam_max, and interpolating the entire sequence on the log scale.

Proposition 1 demonstrates that a consistent lam_max for both classification and regression tasks can be determined by weighting the regression and classification losses, as shown in formulation (1).Â

For evaluation, the researchers conducted a comprehensive simulation analysis to compare various approaches in the context of mixed regression and classification tasks. The results, illustrated in Figure 2, showcase the superior prediction performance and joint feature selection accuracy of MTLComb, especially in high-dimensional settings.

In the real-data analysis, MTLComb was applied to two biomedical case studies: sepsis and schizophrenia. For sepsis prediction, MTLComb exhibited competitive prediction performance, increased model stability, higher marker selection reproducibility, and greater biological interpretability compared to other methods. The selected features, such as SAPS II, SOFA total score, SIRS average λ, and SOFA cardiovascular score, align with the current understanding of sepsis risk factors and organ dysfunction.

In the schizophrenia analysis, MTLComb successfully captured homogeneous gene markers predictive of both age and diagnosis, validated in an independent cohort. The identified pathways, including voltage-gated channel activity, chemical synaptic transmission, and transsynaptic signaling, have previously been associated with schizophrenia and aging, potentially due to their relevance for synaptic plasticity.

While MTLComb has demonstrated promising results, it is important to acknowledge its limitations. As a regularization approach based on the linear model, MTLComb may have limited improvements in low-dimensional scenarios. Additionally, although MTLComb harmonizes the feature selection principle of different task types, differences in the magnitude of coefficients may persist, requiring further research and potential improvements. Future work may extend MTLComb by incorporating additional types of losses, broadening its application scope. For instance, adding a Poisson regression model in the sepsis analysis could support the prediction of count data, such as length of ICU stay.

In conclusion, MTLComb represents a significant advancement in multi-task learning. It enables the joint learning of regression and classification tasks and facilitates unbiased joint feature selection through a provable loss weighting scheme. Its potential applications span various fields, such as comorbidity analysis and the simultaneous prediction of multiple clinical outcomes of diverse types. By addressing the challenges of incorporating mixed task types into a unified MTL framework, MTLComb opens new avenues for leveraging the synergies between related tasks, enhancing model generalizability, and unlocking novel insights from heterogeneous datasets.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter. Join our Telegram Channel, Discord Channel, and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 42k+ ML SubReddit

The post Multi-Task Learning with Regression and Classification Tasks: MTLComb appeared first on MarkTechPost.

Source: Read MoreÂ