LLM watermarking embeds subtle, detectable signals in AI-generated text to identify its origin, addressing misuse concerns like impersonation, ghostwriting, and fake news. Despite its promise to distinguish humans from AI text and prevent misinformation, the field faces challenges. The numerous and complex watermarking algorithms, alongside varied evaluation methods, make it difficult for researchers and the public to experiment with and understand these technologies. Consensus and support are crucial for advancing LLM watermarking to ensure reliable identification of AI-generated content and maintain the integrity of digital communication.

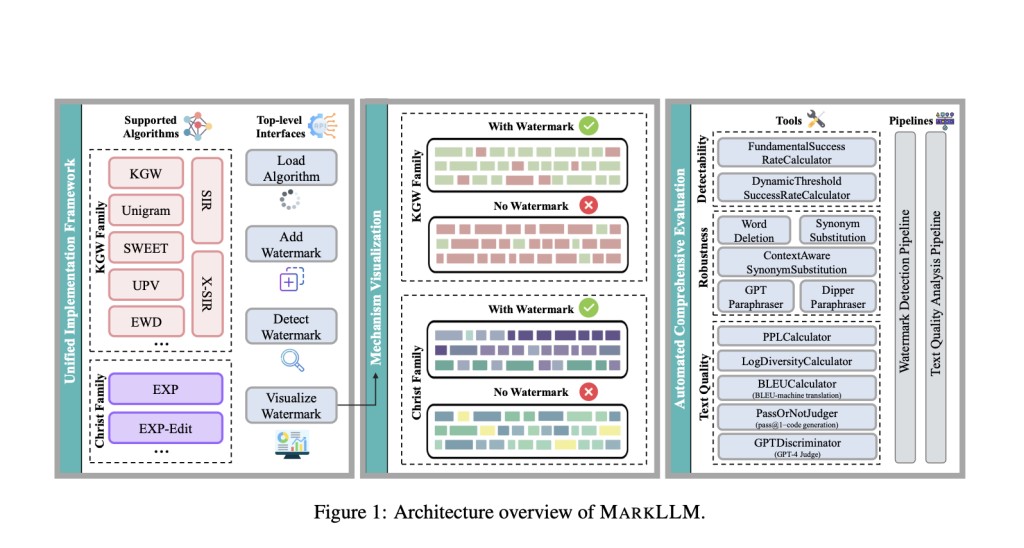

Researchers from Tsinghua University, Shanghai Jiao Tong University, The University of Sydney, UC Santa Barbara, the CUHK, and the HKUST have developed MARKLLM, an open-source toolkit for LLM watermarking. MARKLLM provides a unified, extensible framework for implementing watermarking algorithms, supporting nine specific methods from two major algorithm families. It offers user-friendly algorithm loading, text watermarking, detection, and data visualization interfaces. The toolkit includes 12 evaluation tools and two automated pipelines for assessing detectability, robustness, and impact on text quality. MARKLLM’s modular design enhances scalability and flexibility, making it a valuable resource for researchers and the general public to advance LLM watermarking technology.

LLM watermarking algorithms fall into two main categories: the KGW Family and the Christ Family. The KGW method modifies LLM logits to prefer certain tokens, creating watermarked text identified by a statistical threshold. Variations of this method improve performance, reduce text quality impact, increase watermark capacity, resist removal attacks, and enable public detection. The Christ Family uses pseudo-random sequences to guide token sampling, with methods like EXP-sampling correlating text with these sequences for detection. Evaluating watermarking algorithms involves assessing detectability, robustness against tampering, and impact on text quality using metrics like perplexity and diversity.

MARKLLM provides a unified framework to address issues in LLM watermarking algorithms, including lack of standardization, uniformity, and code quality. It allows easy invocation and switching between algorithms, offering a well-designed class structure. MARKLLM features a KGW and Christ family algorithms visualization module, highlighting token preferences and correlations. It includes 12 evaluation tools and two automated pipelines for assessing watermark detectability, robustness, and text quality impact. The toolkit supports flexible configurations, facilitating thorough and automated evaluations of watermarking algorithms across various metrics and attack scenarios.

Using MARKLLM, nine watermarking algorithms were evaluated for detectability, robustness, and impact on text quality. The C4 dataset was used for general text generation, WMT16 for machine translation, and HumanEval for code generation. OPT-1.3b and Starcoder served as language models. Dynamic threshold adjustment and various text tampering attacks were used for assessments, with metrics including PPL, log diversity, BLEU, pass@1, and GPT-4 Judge. Results showed high detection accuracy, algorithm-specific strengths, and varying results based on metrics and attacks. MARKLLM’s user-friendly design facilitates comprehensive evaluations, offering valuable insights for future research.

In conclusion, MARKLLM is an open-source toolkit designed for LLM watermarking, offering flexible configurations for various algorithms, text watermarking, detection, and visualization. It includes convenient evaluation tools and customizable pipelines for thorough assessments from multiple perspectives. While it supports a subset of methods, excluding recent approaches embedding watermarks in model parameters, future contributions are expected to expand its capabilities. The visualization solutions provided are useful but could benefit from more diversity. Additionally, while it covers key evaluation aspects, some scenarios, like retranslation and CWRA attacks, still need to be fully addressed. Developers and researchers are encouraged to contribute to MARKLLM’s robustness and versatility.

Check out the Paper and GitHub. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter. Join our Telegram Channel, Discord Channel, and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 42k+ ML SubReddit

The post MARKLLM: An Open-Source Toolkit for LLM Watermarking appeared first on MarkTechPost.

Source: Read MoreÂ