Artificial intelligence (AI) has revolutionized various fields by introducing advanced models for natural language processing (NLP). NLP enables computers to understand, interpret, and respond to human language in a valuable way. This field encompasses text generation, translation, and sentiment analysis applications, significantly impacting industries like healthcare, finance, and customer service. The evolution of NLP models has driven these advancements, continually pushing the boundaries of what AI can achieve in understanding and generating human language.

Despite these advancements, developing models that can effectively handle complex multi-turn conversations remains a persistent challenge. Existing models often fail to maintain context and coherence over long interactions, leading to suboptimal performance in real-world applications. Maintaining a coherent conversation over multiple turns is crucial for applications like customer service bots, virtual assistants, and interactive learning platforms.Â

Current methods for improving AI conversation models include fine-tuning diverse datasets and integrating reinforcement learning techniques. Popular models like GPT-4-Turbo and Claude-3-Opus have set benchmarks in performance, yet they still need to improve in handling intricate dialogues and maintaining consistency. These models often rely on large-scale datasets and complex algorithms to enhance their conversational abilities. However, maintaining context over long conversations remains a significant hurdle despite these efforts. While impressive, the performance of these models indicates the potential for further improvement in handling dynamic and contextually rich interactions.

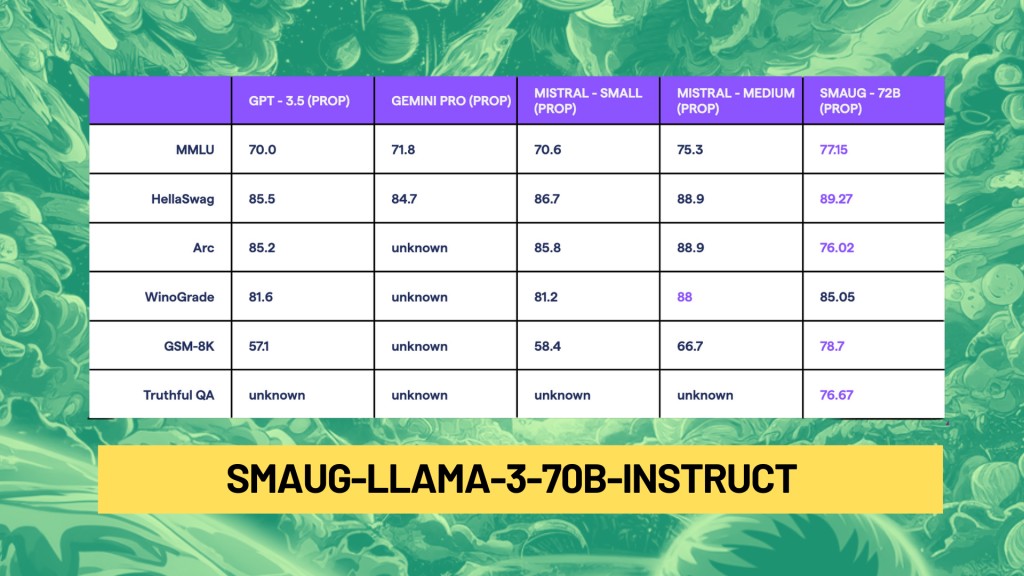

Researchers from Abacus.AI have introduced the Smaug-Llama-3-70B-Instruct model, which is very interesting and claimed to be one of the best open-source models rivaling GPT-4 Turbo. This new model aims to enhance performance in multi-turn conversations by leveraging a novel training recipe. Abacus.AI’s approach focuses on improving the model’s ability to understand & generate contextually relevant responses, surpassing previous models in the same category. Smaug-Llama-3-70B-Instruct builds on the Meta-Llama-3-70B-Instruct foundation, incorporating advancements that enable it to outperform its predecessors.Â

The Smaug-Llama-3-70B-Instruct model uses advanced techniques and new datasets to achieve superior performance. Researchers employed a specific training protocol emphasizing real-world conversational data, ensuring the model can handle diverse and complex interactions. The model integrates seamlessly with popular frameworks like transformers and can be deployed for various text-generation tasks. This allows the model to generate accurate & contextually appropriate responses. Transformers enable efficient processing of large datasets, contributing to the model’s ability to understand and develop detailed and nuanced conversational responses.

The performance of the Smaug-Llama-3-70B-Instruct model is demonstrated through benchmarks such as MT-Bench and Arena Hard. On MT-Bench, the model scored 9.4 in the first turn, 9.0 in the second turn, and an average of 9.2, outperforming Llama-3 70B and GPT-4 Turbo, which scored 9.2 and 9.18, respectively. These scores indicate the model’s robustness in maintaining context and delivering coherent responses over extended dialogues. The MT-Bench results, correlated with human evaluations, highlight Smaug’s ability to handle simple prompts effectively.

However, real-world tasks require complex reasoning and planning, which MT-Bench does not fully address. Arena Hard, a new benchmark measuring an LLM’s ability to solve complex tasks, showed significant gains for Smaug over Llama-3, with Smaug scoring 56.7 compared to Llama-3’s 41.1. This improvement underscores the model’s capability to tackle more sophisticated and agentic tasks, reflecting its advanced understanding and processing of multi-turn interactions.

In conclusion, Smaug-Llama-3-70B-Instruct by Abacus.AI addresses the challenges of maintaining context and coherence. The research team has developed a tool that improves performance and sets a new standard for future developments in the field. The detailed evaluation metrics and superior performance scores highlight the model’s potential to transform applications requiring advanced conversational AI. This new model represents a promising advancement, paving the way for more sophisticated and reliable AI-driven communication tools.

The post Abacus AI Releases Smaug-Llama-3-70B-Instruct: The New Benchmark in Open-Source Conversational AI Rivaling GPT-4 Turbo appeared first on MarkTechPost.

Source: Read MoreÂ