Machine learning models, which can contain billions of parameters, require sophisticated methods to fine-tune their performance efficiently. Researchers aim to enhance the accuracy of these models while minimizing the computational resources needed. This improvement is crucial for practical applications in various domains, such as natural language processing & artificial intelligence, where efficient resource utilization can significantly impact overall performance and feasibility.

A significant problem in fine-tuning LLMs is the substantial GPU memory required, making the process expensive and resource-intensive. The challenge lies in developing efficient fine-tuning methods without compromising the model’s performance. This efficiency is particularly important as the models must adapt to new tasks while retaining their previously learned capabilities. Efficient finetuning methods ensure that large models can be used in diverse applications without prohibitive costs.

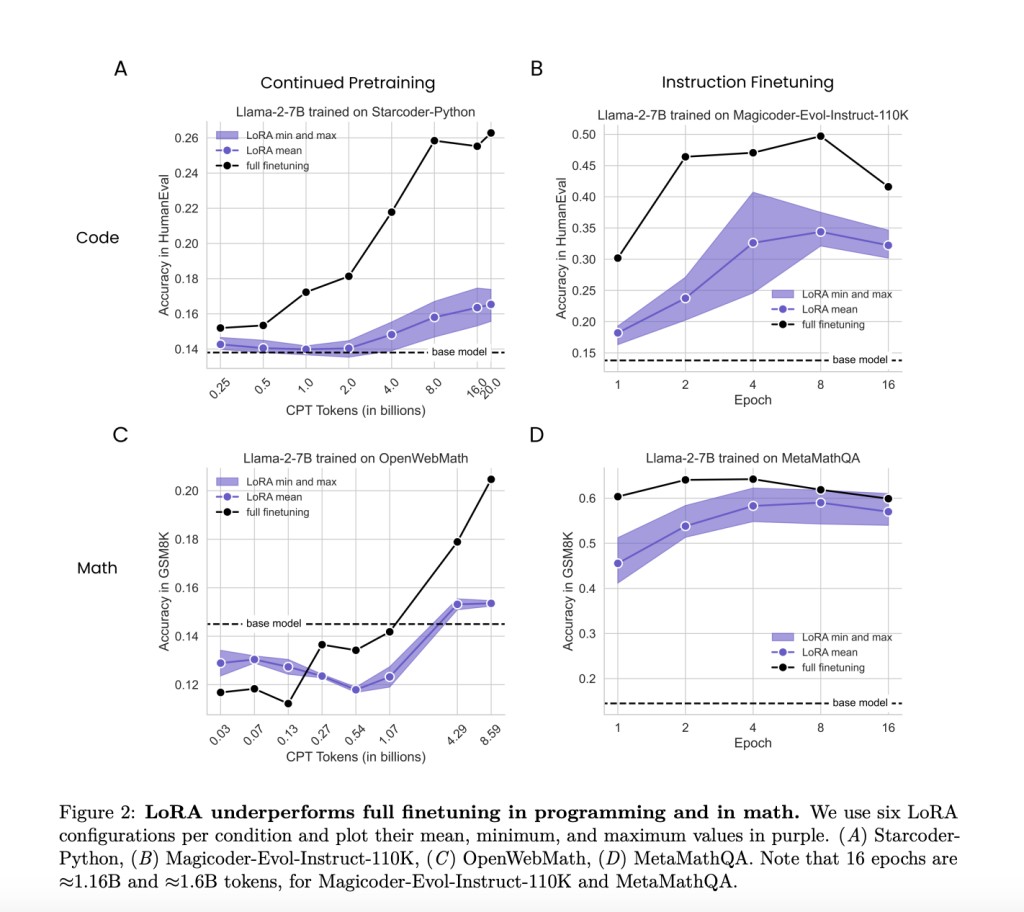

Researchers from Columbia University and Databricks Mosaic AI have explored various methods to address this issue, including full finetuning and parameter-efficient finetuning techniques like Low-Rank Adaptation (LoRA). Full finetuning involves adjusting all model parameters, which is computationally expensive. In contrast, LoRA aims to save memory by only modifying a small subset of parameters, thereby reducing the computational load. Despite its popularity, the effectiveness of LoRA compared to full finetuning has been a topic of debate, especially in challenging domains such as programming and mathematics, where precise performance improvements are critical.

The research compared the performance of LoRA and full finetuning across two target domains:Â

Programming

Mathematics

They considered instruction finetuning, involving approximately 100,000 prompt-response pairs, and continued pretraining with around 10 billion unstructured tokens. The comparison aimed to evaluate how well LoRA and full finetuning adapted to these specific domains, given the different data regimes and the complexity of the tasks. This comprehensive comparison provided a detailed understanding of the strengths and weaknesses of each method under various conditions.

The researchers discovered that LoRA generally underperformed compared to full finetuning in programming and mathematics tasks. For example, in the programming domain, full finetuning achieved a peak HumanEval score of 0.263 at 20 billion tokens, while the best LoRA configuration reached only 0.175 at 16 billion tokens. Similarly, in the mathematics domain, full finetuning achieved a peak GSM8K score of 0.642 at 4 epochs, whereas the best LoRA configuration achieved 0.622 at the same point. Despite this underperformance, LoRA provided a beneficial form of regularization, which helped maintain the base model’s performance on tasks outside the target domain. This regularization effect was stronger than common techniques like weight decay and dropout, making LoRA advantageous when retaining base model performance, which is crucial.

A detailed analysis showed that full finetuning resulted in weight perturbations that ranked 10 to 100 times greater than those typically used in LoRA configurations. For instance, full finetuning required ranks as high as 256, while LoRA configurations typically used ranks of 16 or 256. This significant difference in rank likely explains some of the performance gaps observed. The research indicated that LoRA’s lower rank perturbations contributed to maintaining more diverse output generations than full finetuning, often leading to limited solutions. This diversity in output is beneficial in applications requiring varied and creative solutions.

In conclusion, while LoRA is less effective than full finetuning in accuracy and sample efficiency, it offers significant advantages in regularization and memory efficiency. The study suggests that optimizing hyperparameters, such as learning rates and target modules, and understanding the trade-offs between learning and forgetting can enhance LoRA’s application to specific tasks. The research highlighted that although full finetuning generally performs better, LoRA’s ability to maintain the base model’s capabilities and generate diverse outputs makes it valuable in certain contexts. This research provides essential insights into balancing performance and computational efficiency in finetuning LLMs, offering a pathway for more sustainable and versatile AI development.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter. Join our Telegram Channel, Discord Channel, and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 42k+ ML SubReddit

The post Researchers from Columbia University and Databricks Conducted a Comparative Study of LoRA and Full Finetuning in Large Language Models appeared first on MarkTechPost.

Source: Read MoreÂ