Although recent multimodal foundation models are extensively utilized, they tend to segregate various modalities, typically employing specific encoders or decoders for each. This approach constrains their capacity to fuse information across modalities effectively and produce multimodal documents comprising diverse sequences of images and text. Consequently, there’s a limitation in their ability to seamlessly integrate different types of content within a single document.

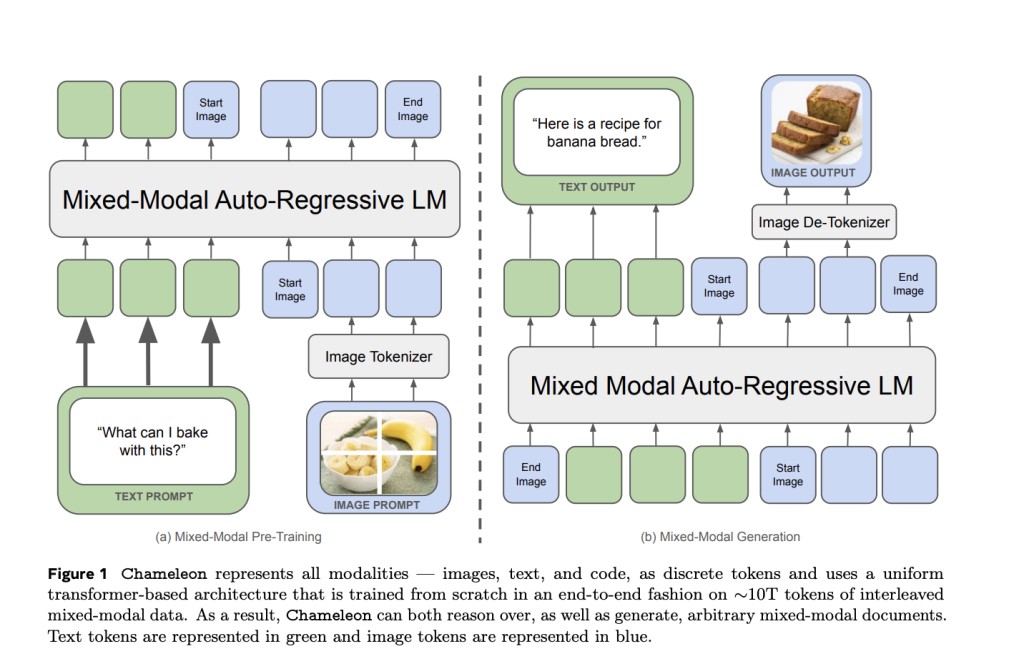

Meta researchers present Chameleon, a mixed-modal foundation model that facilitates generating and reasoning with interleaved textual and image sequences, enabling comprehensive multimodal document modeling. Unlike traditional models, Chameleon employs a unified architecture, treating both modalities equally by tokenizing images akin to text. This approach, termed early fusion, allows seamless reasoning across modalities but poses optimization challenges. To address these, the researchers propose architectural enhancements and training techniques. By adapting transformer architecture and finetuning strategies.

Researchers developed a novel image tokenizer, encoding 512 × 512 images into 1024 tokens from an 8192-codebook, focusing on licensed images and doubling face-containing images during pre-training. However, their tokenizer struggles with text-heavy image reconstruction. Also, they trained a BPE tokenizer with a 65,536-vocabulary, including image tokens, using the sentencepiece library, over a subset of training data. Chameleon addressed stability issues with QK-Norm, dropout, and z-loss regularization during training, facilitating successful training on Meta’s RSC. Inference streamlined processing for mixed-modal generation using PyTorch and xformers, supporting both streaming and non-streaming modes with token masking for conditional logic.

The alignment stage, fine-tunes on diverse datasets, including Text, Code, Visual Chat, and Safety, aiming to enhance model capabilities and safety. They curate high-quality images for Image Generation using an aesthetic classifier. Supervised Fine-Tuning (SFT) encompasses data balancing across modalities, utilizing a cosine learning rate schedule and a weight decay of 0.1. Each instance in SFT pairs prompts with corresponding answers, optimizing exclusively based on the latter. Dropout of 0.05 is applied, along with z-loss regularization. Images in prompts are resized with border padding, while those in answers are center-cropped for quality image generation.

Chameleon evaluates its text-only capabilities against state-of-the-art models, achieving competitive performance across various tasks like commonsense reasoning and math. It outperforms LLaMa-2 on many tasks, attributing gains to better pre-training and inclusion of code data. In image-to-text tasks, Chameleon excels in image captioning, matching or surpassing larger models like Flamingo-80B and IDEFICS-80B with fewer shots. In visual question answering (VQA), it approaches performance of top models, even though Llava-1.5 slightly outperforms VQA-v2. Chameleon’s versatility and efficiency make it competitive across different tasks, requiring fewer training examples and smaller model sizes.

To recapitulate, This study introduces Chameleon, a token-based model, achieves superior performance in vision-language tasks by integrating image and text tokens seamlessly. Its architecture enables joint reasoning over modalities, surpassing late-fusion models like Flamingo and IDEFICS in tasks like image captioning and visual question answering. Chameleon’s early-fusion approach introduces novel techniques for stable training, addressing previous scalability challenges. It unlocks new multimodal interaction possibilities, evident in its strong performance on mixed-modal open-ended QA benchmarks.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter. Join our Telegram Channel, Discord Channel, and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 42k+ ML SubReddit

The post Meta AI Introduces Chameleon: A New Family of Early-Fusion Token-based Foundation Models that Set a New Bar for Multimodal Machine Learning appeared first on MarkTechPost.

Source: Read MoreÂ