The evaluation of artificial intelligence models, particularly large language models (LLMs), is a rapidly evolving research field. Researchers are focused on developing more rigorous benchmarks to assess the capabilities of these models across a wide range of complex tasks. This field is essential for advancing AI technology as it provides insights into the strengths & weaknesses of various AI systems. By understanding these aspects, researchers can make informed decisions on improving and refining these models.

One significant problem in evaluating LLMs is the inadequacy of existing benchmarks in fully capturing the models’ capabilities. Traditional benchmarks, like the original Massive Multitask Language Understanding (MMLU) dataset, often fail to provide a comprehensive assessment. These benchmarks typically include limited answer options and focus predominantly on knowledge-based questions that do not require extensive reasoning. Consequently, they fail to reflect the true problem-solving and reasoning skills of LLMs accurately. This gap underscores the need for more challenging and inclusive datasets that can better evaluate the diverse capabilities of these advanced AI systems.

Current methods for evaluating LLMs, such as the original MMLU dataset, provide some insights but have notable limitations. The original MMLU dataset includes only four answer options per question, which limits the complexity and reduces the challenge for the models. The questions are mostly knowledge-driven, so they do not require deep reasoning abilities crucial for comprehensive AI evaluation. These constraints result in an incomplete understanding of the models’ performance, highlighting the necessity for improved evaluation tools.

Researchers from TIGER-Lab have introduced the MMLU-Pro dataset to address these limitations. This new dataset is designed to provide a more rigorous and comprehensive benchmark for evaluating LLMs. MMLU-Pro significantly increases the number of answer options from four to ten per question, enhancing the evaluation’s complexity and realism. Including more reasoning-focused questions addresses the shortcomings of the original MMLU dataset. This effort involves leading AI research labs and academic institutions, aiming to set a new standard in AI evaluation.

The construction of the MMLU-Pro dataset involved a meticulous process to ensure its robustness and effectiveness. Researchers began by filtering the original MMLU dataset to retain only the most challenging and relevant questions. They then augmented the number of answer options per question from four to ten using GPT-4, a state-of-the-art AI model. This augmentation process was not merely about adding more options; it involved generating plausible distractors that require discriminative reasoning to navigate. The dataset sources questions from high-quality STEM websites, theorem-based QA datasets, and college-level science exams. Each question underwent rigorous review by a panel of over ten experts to ensure accuracy, fairness, and complexity, making the MMLU-Pro a robust tool for benchmarking.

The MMLU-Pro dataset employs ten answer options per question, reducing the likelihood of random guessing and significantly increasing the evaluation’s complexity. By incorporating more college-level problems across various disciplines, MMLU-Pro ensures a robust and comprehensive benchmark. The dataset is less sensitive to different prompts, enhancing its reliability. While 57% of the questions are sourced from the original MMLU, they have been meticulously filtered for higher difficulty and relevance. Each question and its options have undergone rigorous review by over ten experts, aiming to minimize errors. Without chain-of-thought (CoT) prompting, the top-performing model, GPT-4o, achieves only a 53% score.

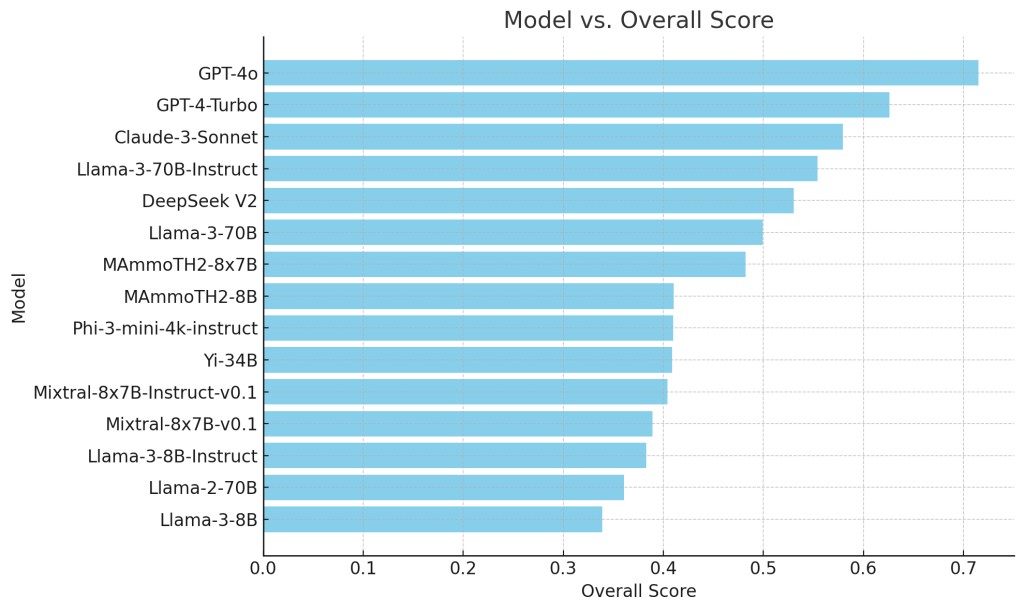

The performance of various AI models on the MMLU-Pro dataset was evaluated, revealing significant differences compared to the original MMLU scores. For example, GPT-4’s accuracy on MMLU-Pro was 71.49%, a notable decrease from its original MMLU score of 88.7%. This 17.21% drop highlights the increased difficulty and robustness of the new dataset. Other models, such as GPT-4-Turbo-0409, dropped from 86.4% to 62.58%, and Claude-3-Sonnet’s performance decreased from 81.5% to 57.93%. These results underscore the challenging nature of MMLU-Pro, which demands deeper reasoning and problem-solving skills from the models.Â

In conclusion, the MMLU-Pro dataset marks a pivotal advancement in AI evaluation, offering a rigorous benchmark that challenges LLMs with complex, reasoning-focused questions. By increasing the number of answer options and incorporating diverse problem sets, MMLU-Pro provides a more accurate measure of AI capabilities. The notable performance drops observed in models like GPT-4 underscore the dataset’s effectiveness in highlighting areas for improvement. This comprehensive evaluation tool is essential for driving future AI advancements, enabling researchers to refine and enhance the performance of LLMs.Â

The post TIGER-Lab Introduces MMLU-Pro Dataset for Comprehensive Benchmarking of Large Language Models’ Capabilities and Performance appeared first on MarkTechPost.

Source: Read MoreÂ