Named Entity Recognition (NER) is vital in natural language processing, with applications spanning medical coding, financial analysis, and legal document parsing. Custom models are typically created using transformer encoders pre-trained on self-supervised tasks like masked language modeling (MLM). However, recent years have seen the rise of large language models (LLMs) like GPT-3 and GPT-4, which can tackle NER tasks through well-crafted prompts but pose challenges due to high inference costs and potential privacy concerns.

NuMind team introduces an approach that suggests utilizing LLMs to minimize human annotations for custom model creation. Rather than employing an LLM to annotate a single-domain dataset for a specific NER task, the idea involves using the LLM to annotate a diverse, multi-domain dataset covering various NER problems. Subsequently, a smaller foundation model like BERT is further pre-trained on this annotated dataset. This pre-trained model can then be fine-tuned for any downstream NER task.

The team has introduced its three NER models, which are the following:

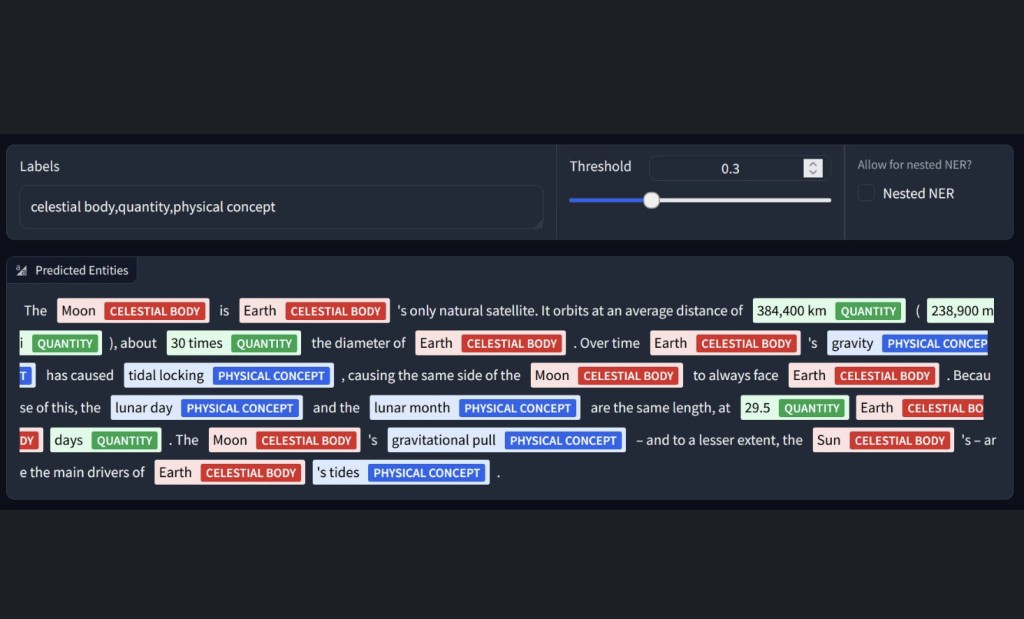

NuNER Zero: A zero-shot NER model adopts the GLiNER (Generalist Model for Named Entity Recognition using Bidirectional Transformer) architecture and requires input as a concatenation of entity types and text. Unlike GLiNER, NuNER Zero functions as a token classifier, enabling the detection of arbitrarily long entities. Trained on the NuNER v2.0 dataset, which merges subsets of Pile and C4 annotated via LLMs using NuNER’s procedure, NuNER Zero emerges as the leading compact zero-shot NER model, boasting a +3.1% token-level F1-Score improvement over GLiNER-large-v2.1 on GLiNER’s benchmark.

NuNER Zero 4k: NuNER Zero 4k is the long-context (4k tokens) version of NuNER Zero. It is generally less performant than NuNER Zero but can outperform NuNER Zero on applications where context size matters.

NuNER Zero-span: NuNER Zero-span is the span-prediction version of NuNER Zero, which shows slightly better performance than NuNER Zero but cannot detect entities larger than 12 tokens.

The key features of these three models are:

NuNER Zero: Originated from NuNER, convenient for moderate token size.

NuNER Zero 4K: A variation of NuNER performs better in scenarios where context size matters.

NuNER Zero-span: The span-prediction version of NuNER Zero is not convenient for entities larger than 12 tokens.

In conclusion, NER is crucial in natural language processing, yet creating custom models typically relies on transformer encoders trained via MLM. However, the rise of LLMs like GPT-3 and GPT-4 poses challenges due to high inference costs. The NuMind team proposes an approach utilizing LLMs to reduce human annotations by annotating a multi-domain dataset. They introduce three NER models: NuNER Zero, a compact zero-shot model; NuNER Zero 4k, emphasizing longer context; and NuNER Zero-span, prioritizing span prediction with slight performance enhancements but limited to entities under 12 tokens.

Sources

https://huggingface.co/numind/NuNER_Zero-4k

https://huggingface.co/numind/NuNER_Zero

https://huggingface.co/numind/NuNER_Zero-span

https://arxiv.org/pdf/2402.15343

https://www.linkedin.com/posts/tomaarsen_numind-yc-s22-has-just-released-3-new-state-of-the-art-activity-7195863382783049729-kqko/?utm_source=share&utm_medium=member_ios

The post NuMind Releases Three SOTA NER Models that Outperform Similar-Sized Foundation Models in the Few-shot Regime and Competing with Much Larger LLMs appeared first on MarkTechPost.

Source: Read MoreÂ