As applications grow to serve more users with sensitive data, implementing robust security controls becomes critically important. One way to enhance security and meet complex access requirements is through row-level security. Row-level security allows you to limit data access at the row level based on user attributes.

PostgreSQL is one of the most popular open source relational database engines. It provides support for row-level security through row-level security policies. These policies make sure users can only view or modify data they are explicitly authorized to. When using row-level security in multi-tenant applications, consideration must be given to a proper connection management strategy.

In PostgreSQL, each new database connection requires the creation of a new operating system process (a fork of the parent PostgreSQL process). As the number of connections increase, the overhead of this process creation can become a bottleneck and degrade performance. Improper connection management, particularly in elastically scaling compute environments, is one of the primary causes of performance issues when working with PostgreSQL databases. The RDS Data API removes the need to manage connections or deal with complex connection pooling logic. To learn more about the RDS Data API, see Introducing the Data API for Amazon Aurora Serverless v2 and Amazon Aurora provisioned clusters.

In this post, we show you how to implement row-level security on Amazon Aurora PostgreSQL-Compatible Edition using the RDS Data API and PostgreSQL features. By following along, you’ll understand how to build secure and scalable multi-tenant PostgreSQL architectures on AWS.

The patterns used in the post work for both Aurora PostgreSQL clusters with provisioned instances and Amazon Aurora Serverless v2 instances. For more details on the RDS Data API, see Using RDS Data API.

Solution overview

In the following sections, we provide sample code to set up and test row-level security, and use the RDS Data API. You can run the steps using a command line tool, such as psql, or on the Amazon RDS console using the query editor.

Prerequisites

To follow along with the steps in this post, you need the following resources:

An AWS account with AWS Identity and Access Management (IAM) permissions to create an Aurora PostgreSQL database.

An Aurora PostgreSQL instance (provisioned or Serverless v2) version 13.11 or higher. For the full list of supported versions, see Data API with Aurora PostgreSQL Serverless v2 and provisioned.

Optionally, the PostgreSQL psql command line tool. For installation instructions, see Connecting to a DB instance running the PostgreSQL database engine.

Python installed with Boto3 library.

Using the RDS Data API does not require direct access to the database, so you can run the Python script from your local development environment. The machine where psql is run requires connectivity to the Aurora cluster.

Create a shared tenant schema

Create a tenant table schema to implement row-level security. The tenant table is shared with multiple tenants and isolation must be enforced such that a given tenant is allowed to read or write only their own account_balance. Run the following commands to create and populate the table:

CREATE TABLE tenant ( tenant_id integer PRIMARY KEY, tenant_name text, account_balance numeric );

INSERT INTO tenant VALUES (1, ‘Tenant1’, 50000), (2, ‘Tenant2’, 60000), (3, ‘Tenant3’, 40000);

Create a tenant isolation policy

The schema used is part of a multi-tenant software as a service (SaaS) application. A policy is created against the tenant_id to enforce tenant isolation. The policy uses the current_setting() function to compare the supplied tenant_id with the ID set in the session. The policy is defined as follows:

CREATE POLICY tenant_policy ON tenant USING (tenant_id = current_setting(‘tenant.id’)::integer);

Enable row-level security

Next, enable the row-level security policy on the tenant table and any other table that you want to enforce tenant isolation:

ALTER TABLE tenant enable row level security;

Create the application user

The examples in this post use a single shared application user for all the tenants. When using this pattern, create an AWS Secrets Manager secret to store the login information for each user that will use the RDS Data API. For more details about alternative data access patterns, see Choose the right PostgreSQL data access pattern for your SaaS application. Create the application user with the following commands:

CREATE ROLE app_user with login password ‘<any-strong-password>’;

GRANT SELECT on tenant to app_user;

Test the row-level isolation

Finally, test the row-level isolation using the following query when logged in as the app user (these steps must be run using psql):

— Change the user to app_user

SET ROLE to app_user;

— Test without setting tenant.id variable

SELECT * FROM tenant;

ERROR: unrecognized configuration parameter “tenant.id”

— Set the current tenant.id to 1

SET tenant.id=1;

— Verify only rows with tenant_id = 1 is returned

SELECT * FROM tenant;

tenant_id | tenant_name | account_balance

———–+————-+—————–

1 | Tenant1 | 50000

(1 row)

Think of the row-level security policy as an automatic WHERE clause that gets applied to the SELECT statement at runtime. As a best practice, you should still explicitly include a WHERE clause in your SQL statement to filter to only that tenant’s data. This provides an additional layer of defense against misconfiguration.

Traditional connection management

Before exploring the RDS Data API, it’s important to understand traditional connection management approaches. Starting with the application, the data access layer needs to use a driver to connect to the database. This means you must include the driver in your application and keep it up to date. If you connect to the database via SSL, you need to bundle the certificates as part of the application to create the connection.

As you onboard multiple tenants, there can be many open connections to the database server. This results in opening and closing connections at a high rate, exhausting database memory and compute resources.

To prevent the overhead of opening and closing connections both on the database and the client, you typically introduce a client connection pool. This allows you to lazily instantiate a pool of connections of a size you specify and reuse those connections. This reduces the overhead of opening and closing new connections. However, modern applications, particularly web applications, have high concurrency and often auto-scale, adding additional compute capacity to meet changing user activity. If each of these compute units has a client connection pool, this quickly adds strain on the database.

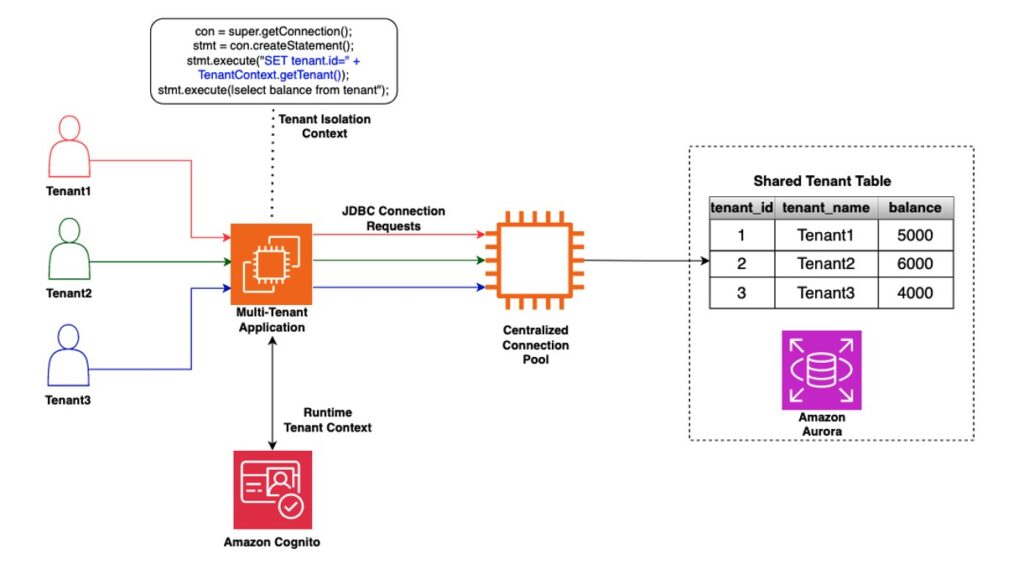

To reduce connection management overhead in distributed applications, a centralized connection pooling technology is used. Some popular options include Amazon RDS Proxy, pgBouncer or pgpool. The following diagram shows an example implementation.

The combination of application client pool and the centralized connection pooling is an effective database connection management strategy. One of the benefits of a centralized connection pooler is that you don’t have to make any changes to the application; your data access layer can continue to use the same connection logic transparently. It’s important to be aware of the following considerations with this approach:

It introduces a potential bottleneck or single point of failure

It requires monitoring and tuning of the client and centralized connection pool sizes as tenant numbers and activity scale

Network connectivity is needed from the application to the centralized connection pooler and from the connection pooler to the database

It has a fixed cost regardless of varying usage patterns and utilization

It requires database drivers to be included with the application

Using the RDS Data API

With the RDS Data API, applications don’t have to manage the pooling and sharing of connections. This removes the driver layer and remove the need to manage both client and centralized connection pools between the application and the database. Applications can use simple HTTP API calls to run SQL statements against an HTTP endpoint.

With the database schema configured for row-level security, it is queried securely through the RDS Data API, and the tenant isolation is enforced. The API allows you to run SQL statements without managing database connections. The following sample Python code passes in a user ID, sets it in the context, runs the query, and retrieves the filtered data:

import boto3

cluster_arn = ‘<cluster arn>’

secret_arn = ‘<secret arn>’

rdsData = boto3.client(‘rds-data’)

db_name = ‘postgres’

def get_tenant_id_from_context():

return 2;

tr = rdsData.begin_transaction(

resourceArn = cluster_arn,

secretArn = secret_arn,

database = db_name)

rdsData.execute_statement(resourceArn=cluster_arn,

secretArn=secret_arn,

database=db_name,

sql=’set tenant.id = {0}’.format(get_tenant_id_from_context()),

transactionId = tr[‘transactionId’])

response = rdsData.execute_statement(resourceArn=cluster_arn,

secretArn=secret_arn,

database=db_name,

sql=’select tenant_name from tenant’,

transactionId = tr[‘transactionId’])

cr = rdsData.commit_transaction(

resourceArn = cluster_arn,

secretArn = secret_arn,

transactionId = tr[‘transactionId’])

Because calls to execute_statement are independent, an explicit transaction is used to maintain the session state changes. If the transaction is not used, there is no guarantee these API calls will use the same connection, and the session state changes would be cleared. In the sample code, for brevity, the tenant_id attribute is hard-coded. In a production application, you would retrieve the tenant_id from the authentication token (usually a JWT token). For more details on this part of the process, see Building a Multi-Tenant SaaS Solution Using AWS Serverless Services. When the sample code is run, only the data for the tenant that is set in the context is returned:

Admin:~/environment $ python3 main.py

[[{‘stringValue’: ‘Tenant2’}]]

If the parameter value is changed, data is returned for a different tenant:

def get_tenant_id_from_context():

return 3;

[[{‘stringValue’: ‘Tenant3’}]]

Applications can continue to use the row-level security capability using the RDS Data API for multi-tenant data isolation. There will be no JDBC driver required because the application requests are simple HTTP requests that are stateless. The following diagram shows how multi-tenant applications are using HTTP APIs to interact with the database without the need for a proxy layer for connection pooling.

When using the RDS Data API as per the previous Python sample, you may prefer to avoid handling the explicit begin and commit of transactions in your application. An alternate way is to create a function to combine both the SET statement and the actual query to fetch tenant data. This approach of using a function allows you to run multiple statements together and helps you overcome the lack of multi-statement support with RDS Data API for implicit transaction handling:

CREATE OR REPLACE FUNCTION get_tenant_data(p_tenant_id integer)

RETURNS SETOF text AS

$func$

BEGIN

EXECUTE format(‘SET “tenant.id” = %s’, p_tenant_id);

RETURN QUERY

SELECT tenant_name

FROM tenant;

END

$func$ LANGUAGE plpgsql;

SET ROLE to app_user;

SELECT get_tenant_data(2);

tenant_id | tenant_name | account_balance

———–+————-+—————–

1 | Tenant2 | 60000

(1 row)

Now that the function has been created and tested to work, the following Python code is run to use the RDS Data API, invoke the function, and enforce the row-level security for tenant isolation:

import boto3

cluster_arn = ‘<cluster arn’

secret_arn = ‘<secret arn>’

rdsData = boto3.client(‘rds-data’)

db_name = ‘postgres’

def get_tenant_id_from_context():

return 2;

param1 = {‘name’:’id’, ‘value’:{‘longValue’: get_tenant_id_from_context()}}

paramSet = [param1]

response = rdsData.execute_statement(resourceArn=cluster_arn,

secretArn=secret_arn,

database=db_name,

sql=’select get_tenant_data(:id::integer)’,

parameters = paramSet)

print(response[‘records’])

RDS Data API cost considerations

The RDS Data API is charged per million requests. Each request is metered in 32 KB increments. In the following example of a multi-tenant system, each tenant has different access patterns, and therefore the cost per tenant for using the API can vary between different tenant profiles.

Tenant 1 has the following pattern:

Ten requests per second with less than 32 KB = 36,000 requests per hour x 730 hours in a month = 26.28 million requests per month.

Using the us-east-1 pricing at the time of writing ($0.35 per 1 million requests, up to 1 billion requests) = approximately $9.20 per month for tenant 1’s usage.

Tenant 2 has the following pattern:

Six requests per second greater than 32 KB in size but less than 64 KB (perhaps tenant 2 runs lots of verbose reports that have larger payloads) = 21,600 requests per hour x 730 hours in a month = 15.76 million requests per month.

This is metered as 31.52 million because each request consumes two 32 KB requests. This is equal to approximately $11.03 for tenant 2’s usage.

Because the cost per tenant usage can vary, tenant metering and consumption-based billing is an important aspect of your SaaS control plane. For full pricing details, see Amazon Aurora Pricing.

The RDS Data API has no limit on the number of concurrent queries that can be run. However, each query will consume database resources. Therefore, it’s important to make sure that rate-limiting controls are in place to maintain an equitable tenant experience. These rate-limiting controls can be implemented in the API, for example using Amazon API Gateway, to enforce a limit on the number of requests that can be sent by a tenant in a given period of time. Additional controls can be implemented at the data access layer to queue requests and process higher priority requests first. For more details, see Throttling a tiered, multi-tenant REST API at scale using API Gateway.

The following diagram shows an example implementation using API Gateway for rate limiting in a microservice. It also includes a dedicated metering microservice for capturing the RDS Data API execute_statement invocations and recording them in an Amazon DynamoDB database. These metering metrics can be used for monitoring the resource consumption per tenant and also for building the per-tenant cost profile.

Alternatively, you can log each API call to ExecuteStatement in AWS CloudTrail. You can use the CloudTrail logs to review the users and applications that are running queries. This metric along with other tenant metrics can be used to arrive at the per-tenant cost. The per-tenant cost is useful for the billing and chargeback of your tenants to their actual usage of resources. For more information, refer to Logging RDS Data API calls with AWS CloudTrail.

Clean up

To avoid incurring future charges, delete all the resources created in the prerequisites section.

Conclusion

In this post, we showed how you can implement row-level security on Aurora using PostgreSQL’s data access policies. The RDS Data API enables you to securely query the filtered data without having to manage connections or set up connection pools. You can extend this approach to build multi-tenant applications like SaaS platforms. Row-level security improves the security posture of a multi-tenant application and unlocks the ability to use shared databases for workloads with complex access requirements.

To learn more, see Using RDS Data API.

About the Authors

Josh Hart is a Principal Solutions Architect at Amazon Web Services (AWS). He works with ISV customers in the UK to help them build and modernize their SaaS applications on AWS.

Nihilson Gnanadason is a Senior Solutions Architect at Amazon Web Services (AWS) with a focus on databases and SaaS architectures. He works with ISVs in the UK to build, run, and scale their software products on AWS.

Source: Read More