AWS Database Migration Service (AWS DMS) is a managed migration and replication service that helps move your databases to AWS securely with minimal downtime and zero data loss. You can use this service for both homogeneous or heterogeneous migrations.

Data migration challenges can vary depending on the size of the data, complexity of the data structure, time constraints for migration, and availability of skilled resources. It sometimes requires a lot of time to estimate efforts, and then more time to convert and migrate the data. Data migration is typically part of an entire system migration.

AWS DMS can mitigate and address many of the potential challenges during a data migration. It supports the migration of most relational database management systems ,such as Oracle, PostgreSQL, MySQL, SQL Server, Sybase, and Db2. Migrating databases with large tables ranging from hundreds of gigabytes to terabytes can take time due to the amount of data that has to be migrated and the need for additional system resources to process the data.

In this post, we demonstrate how you can speed up database migrations by using AWS DMS parallel load options to make data loading more efficient for selected relational tables, views, and collections.

Solution overview

To speed up data migration and make it more efficient, we can use parallel load for selected relational tables, views, and collections. By default, AWS DMS accommodates 8 tables to be processed in parallel, up to a maximum of 49.

In this post, we focus on how to expedite the data load for a single table by using multiple parallel threads.

The following table summarizes our proposed AWS DMS options.

Use Case

AWS DMS Option

Migrate a subset of table data

Source filter

Migrate a complete table with partitions

Parallel load with partitions-auto

Migrate a table with partitions that contain subpartitions

Parallel load with subpartitions-auto

Migrate a table with selected partitions that contain subpartitions

Parallel load with partition-list and subpartitions-auto

Migrate a table with selected partitions

Parallel load setting with partitions-list

Migrate an unpartitioned table

Parallel load setting with the ranges option

The solution we discuss in this post works for all the supported databases; however, for this post, we demonstrate using Oracle Database.

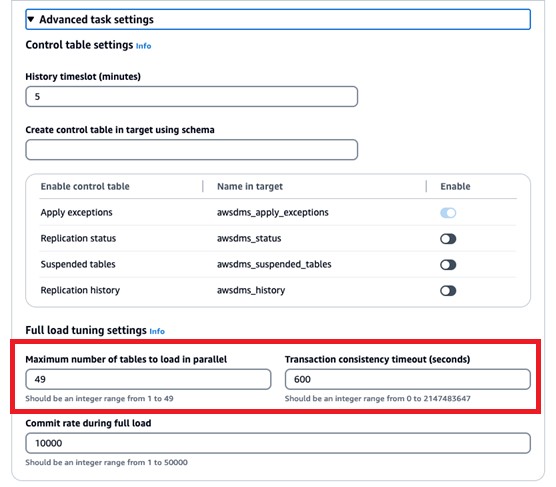

AWS DMS has a limit of 49 parallel processes for handling table partitions. This means that at any time it can process a maximum of 49 table partitions. You can manage this under the Advanced task settings section on the AWS DMS console.

Alternatively, you can use the MaxFullLoadSubTasks parameter under FullLoadSettings in JSON format.

In the following sections, we examine a use case of migrating a huge table with 1 tparaerabyte in size. We discuss the options, challenges, and recommendations while performing data migration using AWS DMS. These options are applicable only for full-load migrations.

Prerequisites

To get started with, you must have the following prerequisites:

An active AWS account

A source database and target database

An AWS DMS replication instance with the specified source and target endpoints

A table with 124 daily partitions, and each partition has 256 subpartitions

The below file contains an Oracle table definition for our use case.

Source filter

AWS DMS provides the filters option to limit the rows and break down huge tables into multiple portions by creating a WHERE clause. You can create multiple tasks using a WHERE clause, and these tasks work independently and can run concurrently.

For example, our table TEST_PART has 124 daily partitions, and each partition has 256 subpartitions. We need to find an efficient filter that we can use to break up the table. It’s always a good idea to break up a table by the same number of rows. COB_Date is the suitable column filter for the TEST_PART table as it divides the rows equally.

The table data migration status can be viewed from Table statistics as shown below:

The following screenshot shows an example of the table log from AWS DMS Task Logs.

The source filter selects a subset of the data from the source table, and only one sub-task is performing the load.

As of this writing, AWS DMS parallelism options combined with a source filter is not supported. Internally, if a source filter along with parallelism is provided, then parallelism will be performed and the source filter will be applied later. This will not yield the expected results when a source filter is applied.

To check this, let’s try using a source filter with a subpartition. In the following example, we select three daily partitions, and each partition has 256 subpartitions:

After running the full load, the logs shows that 49 subtasks are started and AWS DMS is scanning 31,744 segments (124 partitions, each with 256 subpartitions).

AWS DMS scans each subpartition, then it applies the source filter rule, which can be time-consuming when loading large tables.

We recommend using a source filter when you only need to migrate a subset of the data.

Parallel load with partitions-auto

The partitions-auto option can be useful when you have data in all partitions and want to migrate the complete table data. AWS DMS scans each segment and, in parallel, loads 49 segments based on the AWS DMS task setting maxfullloadsubtask. See the following code:

This option offers performance benefits because it uses auto partition and parallelism internally. In the following example, 49 threads work on 49 partitions, and it loads the data to the target

The following screenshot shows the migration logs.

We recommend using parallel load with partitions-auto when you need to migrate large table data that is partitioned at the source end.

Parallel load with subpartitions-auto

The subpartitions-auto option can be useful when you have a limited number of partitions and each partition as multiple subpartitions. This option is suitable when you want to migrate only selected subpartitions in parallel. In this use case, the AWS DMS parallel load setting will load subpartitions in parallel. See the following code:

AWS DMS scans each subpartition (31,744) and loads 49 segments in parallel based on our task setting provided in maxfullloadsubtask.

The following screenshots show our logs and table statistics.

We recommend using parallel load with subpartitions-auto when you need to migrate large table data that has limited partitions and each partition has huge subpartitions at the source end.

Parallel load with partition-list and subpartitions-auto

You can use partition-list and subpartitions-auto to migrate specified partitions data that have huge subpartitions. This option is suitable when you want to migrate only the selected partitions that have multiple subpartitions and only migrate those selected partitions in parallel. In this use case, the AWS DMS parallel load setting will load subpartitions in parallel. AWS DMS applies parallel threads at the subpartition level.

Use the following query to find the subpartition names:

Use the output of this query under the table mapping subpartitions section in the following code snippet. The following code contains 256 subpartitions based on our use case:

The following screenshots show our logs and table statistics.

We recommend using parallel load with partition-list and subpartitions-auto when you need to migrate large table data with selected partitions that have multiple subpartitions at the source end.

Table parallel load setting with partitions-list

You can use the partitions-list option to migrate only the selected partitions from a table that contains a huge number of partitions. This option is suitable when you want to migrate only selected partitions that have multiple partitions and only migrate those selected partitions in parallel. Here, each parallel thread works on an individual partition. See the following code:

In this example, we migrate 5 partitions based on the partition-list provided in the sample table containing 124 partitions. Only five threads are working on the table.

The following screenshots show our logs and table statistics.

We recommend using parallel load with partition-list when you need to migrate specific partitions from the table at the source end. You can use a source filter with partition-list, but AWS DMS won’t apply parallel threads on the table.

Table parallel load setting with the ranges option

You can use the ranges option if the table is not partitioned and you want to use parallel threads. If the table has fewer partitions and a huge amount of data, the ranges option is better than other AWS DMS parallel options. Based on the multiple test cases and results obtained, we observed that the ranges option is more beneficial than other parallelism options for loading data using AWS DMS because the querying on metadata tables took a long time while using parallelism.

Identify the right column that splits the table data equally. In the following example, we use the ID column from the table Test_Part, which has a primary key that has been identified for splitting data from the table equally.

Query to identify the ranges :

The query uses the following parameters:

ID – This represents the column name.

ntile(x) – x represents the number of equal ranges. In our example, it’s 10.

y – This represents the total number of rows in the table.

The following screenshot shows our output.

With this query, we are able to divide the table into 10 equal partitions and use parallel load with the ranges option:

The following screenshots show our logs and table statistics.

We recommend using the ranges option if the table is not partitioned and you want to use parallel threads. If the table has fewer partitions or a lot of data, the ranges option is better than other AWS DMS parallel options.

MongoDB only supports the auto segmentation and range segmentation options of a parallel full load. Amazon DocumentDB (with MongoDB compatibility) only supports the auto segmentation and range segmentation options of a parallel full load using the maxfulllloadsubtasks parameter.

Conclusion

In this post, we discussed AWS DMS best practices for moving large tables with table parallelism settings under full load, which helps address common challenges faced while loading data using AWS DMS. AWS DMS offers different parallelism options; you need to choose your task settings based on the volume of the table data, partitions, subpartitions, and filters. The options we discussed in this post are also applicable for LOB data types; however, you should consider the limitations of AWS DMS while handling the LOB data load.

For more information about source and target engine limitations, refer to Sources for data migration and Targets for data migration, respectively. For more information about AWS DMS and task parameters, refer to Specifying task settings for AWS Database Migration Service tasks.

About the Authors

Bhavani Akundi is a Senior Lead Database consultant at AWS Professional Services. She helps AWS customers migrate and modernize their databases and applications to AWS. Outside of work, Bhavani likes spending time with family and visiting different places and listening to melodious songs.

Nagarjuna Paladugula is a Senior Cloud Support Engineer at AWS Professional Services, specialized in Oracle, Amazon RDS for Oracle, and AWS DMS services. He has over 19 years’ experience on different database technologies, and uses his experience to offer guidance and technical support to customers to migrate their databases to the AWS Cloud. Outside of work, Nagarjuna likes traveling, watching movies and web series, and running.

Source: Read More