Mixture-of-experts (MoE) architectures use sparse activation to initial the scaling of model sizes while preserving high training and inference efficiency. However, training the router network creates the challenge of optimizing a non-differentiable, discrete objective despite the efficient scaling by MoE models. Recently, an MoE architecture called SMEAR was introduced, which is fully non-differentiable and merges experts gently in the parameter space. SMEAR is very efficient, but its effectiveness is limited to small-scale fine-tuning experiments on downstream classification tasks.

Sparsely activated MoE models have emerged as a useful method to scale up model sizes efficiently. The sparse MoE architecture is adapted into transformer models to achieve better performance on machine translation. Traditional MoE models are trained to route input data to expert modules, resulting in a non-differentiable, discrete decision-learning problem problem. Further, top-1 or top-2 routing strategies are used to train these existing models based on a designed load-balancing objective. MoE models are complicated when trained, creating the problem of training instability, expert under-specialization, and inefficient training.

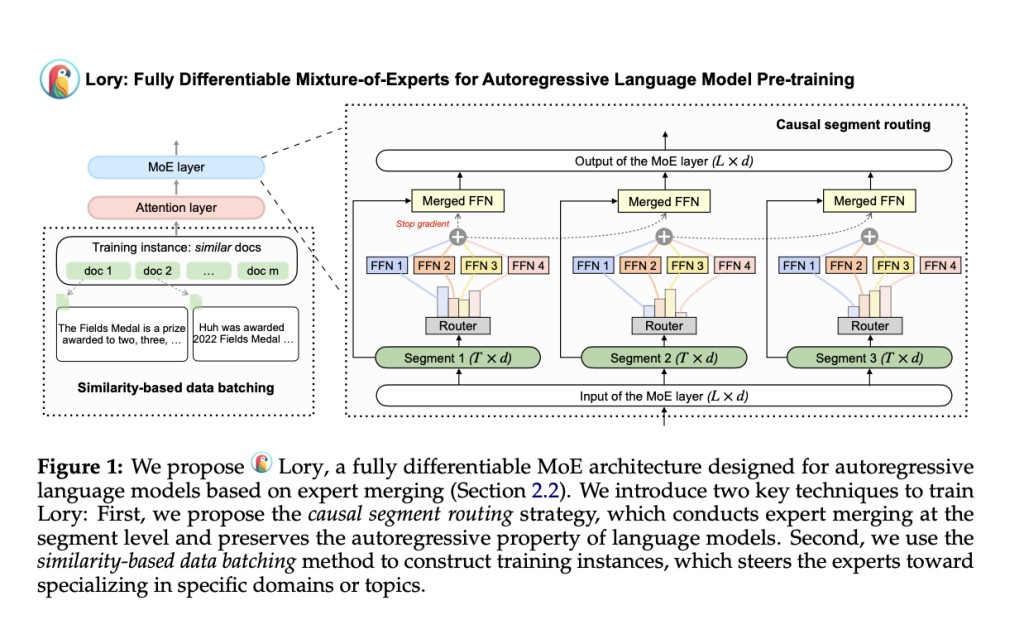

Researchers from Princeton University and Meta AI introduced Lory, a method to scale MoE architectures to autoregressive language model pre-training. Lory consists of two main techniques: (a) a casual segment routing strategy that is efficient in expert merging operations while maintaining the autoregressive nature of language models (LMs), and (b) a similarity-based data batching method that supports expert specialization by creating groups for similar documents during training. Also, Lory models outperform state-of-the-art MoE models with the help of token-level routing instead of segment-level routing.

Casual segment routing, the first technique, is split into smaller segments with a fixed length for a sequence of input tokens. The original segment is used to get the router’s weight and evaluate the merged expert for the subsequent segment. The segment-level routing made using prompts during inference can lead to insufficient specialization of experts because the text data for pre-training language models usually merges random sets of documents. So, the second technique, i.e., similarity-based data batching for MoE training, overcomes this challenge by grouping similar documents to create sequential segments. This technique is used to train LMs, which results in efficient training for expert routing.

Lory shows outstanding results for various factors. They are:

Training efficiency and convergence: Lory achieves an equivalent loss level with less than half of the training tokens for 0.3B and 1.5B models, indicating better performance with the same training compute.Â

Language modeling: Proposed MoE models outperform the dense baseline in all domains, leading to a decrease in perplexity. For example, compared to the 0.3B dense model, 0.3B/32EÂ models achieve a relative improvement of 13.9% on Books.

Downstream tasks: The 0.3B/32E model achieves an average performance increase of +3.7% in common sense reasoning, +3.3% in reading comprehension, +1.5% in reading comprehension, and +11.1% in text classification.

In conclusion, Princeton University and Meta AI researchers proposed Lory, a fully differentiable MoE model designed for autoregressive language model pre-training. Lory consists of two main techniques: a casual segment routing strategy and a similarity-based data batching method. The proposed method outperforms its dense counterpart on language modeling and downstream tasks, and the trained experts are highly specialized and capable of capturing domain-level information. Future work includes scaling up Lory and integrating token and segment-level routing by developing efficient decoding methods for Lory.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter. Join our Telegram Channel, Discord Channel, and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 42k+ ML SubReddit

The post Researchers from Princeton and Meta AI Introduce ‘Lory’: A Fully-Differentiable MoE Model Designed for Autoregressive Language Model Pre-Training appeared first on MarkTechPost.

Source: Read MoreÂ