Multimodal large language models (MLLMs) represent a cutting-edge intersection of language processing and computer vision, tasked with understanding and generating responses that consider both text and imagery. These models, evolving from their predecessors that handled either text or images, are now capable of tasks that require an integrated approach, such as describing photographs, answering questions about video content, or even assisting visually impaired users in navigating their environment.

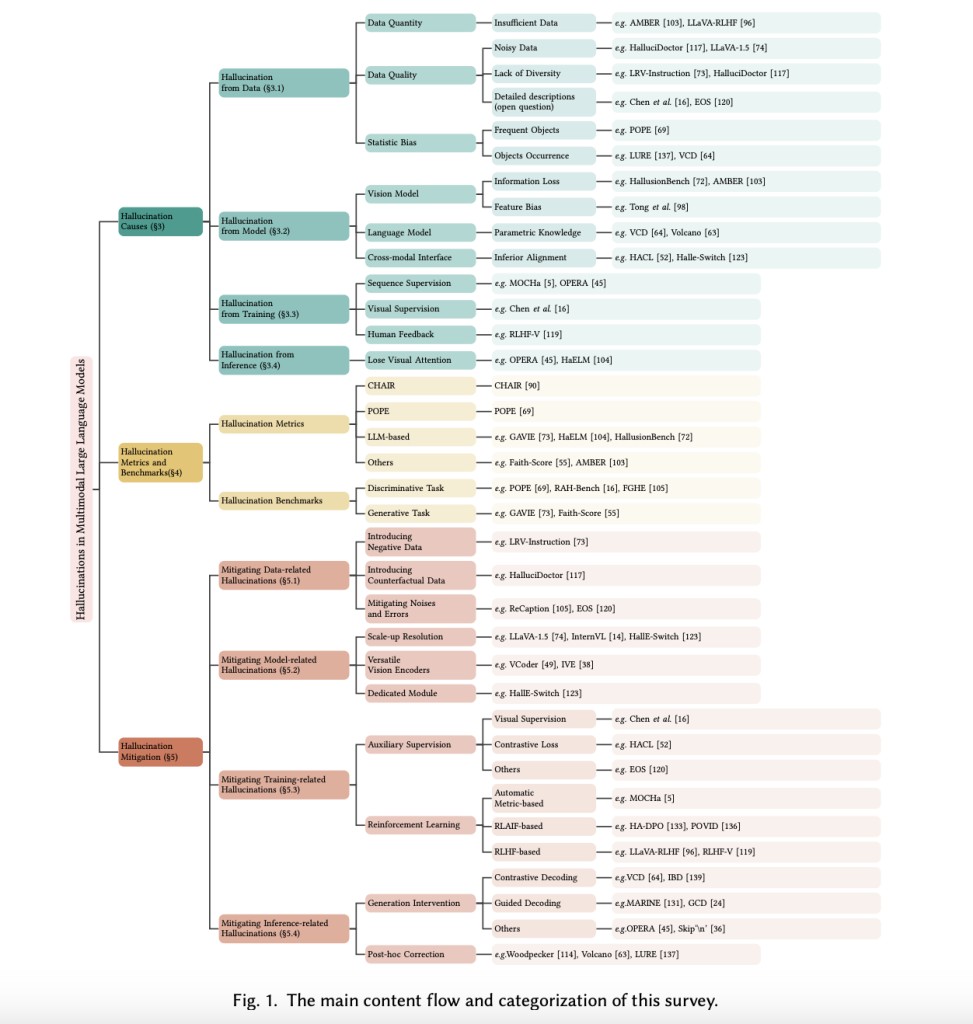

A pressing issue these advanced models face is known as ‘hallucination.’ This term describes instances where MLLMs generate responses that seem plausible but are factually incorrect or not grounded in the visual content they are supposed to analyze. Such inaccuracies can undermine trust in AI applications, especially in critical areas like medical image analysis or surveillance systems, where precision is paramount.

Efforts to address these inaccuracies have traditionally focused on refining the models through sophisticated training regimes involving vast annotated images and text datasets. Despite these efforts, the problem persists, largely due to the inherent complexities of teaching machines to interpret and correlate multimodal data accurately. For instance, a model might describe elements in a photograph that are not present, misinterpret the actions in a scene, or fail to recognize the context of the visual input.

Researchers from the National University of Singapore, Amazon Prime Video, and AWS Shanghai AI Lab have surveyed methodologies to reduce hallucinations. One approach studied tweaks the standard training paradigm by introducing novel alignment techniques that enhance the model’s ability to correlate specific visual details with accurate textual descriptions. This method also involves a critical evaluation of the data quality, focusing on the diversity and representativeness of the training sets to prevent common data biases that lead to hallucinations.

Quantitative improvements in several key performance metrics underscore the efficacy of studied models. For instance, in benchmark tests involving image caption generation, the refined models demonstrated a 30% reduction in hallucination incidents compared to their predecessors. The model’s ability to accurately answer visual questions improved by 25%, reflecting a deeper understanding of the visual-textual interfaces.

In conclusion, the review of multimodal large language models studied the significant challenge of hallucination, which has been a stumbling block in realizing fully reliable AI systems. The proposed solutions advance the technical capabilities of MLLMs but also enhance their applicability across various sectors, promising a future where AI can be trusted to interpret and interact with the visual world accurately. This body of work charts a course for future developments in the field and serves as a benchmark for ongoing improvements in AI’s multimodal comprehension.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter. Join our Telegram Channel, Discord Channel, and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 42k+ ML SubReddit

The post A Survey Report on New Strategies to Mitigate Hallucination in Multimodal Large Language Models appeared first on MarkTechPost.

Source: Read MoreÂ