The discipline of computational mathematics continuously seeks methods to bolster the reasoning capabilities of large language models (LLMs). These models play a pivotal role in diverse applications ranging from data analysis to artificial intelligence, where precision in mathematical problem-solving is crucial. Enhancing these models’ ability to handle complex calculations and reasoning autonomously is paramount to advancing technological and scientific research.

One crucial challenge in this domain is the frequent logical and numerical errors encountered by LLMs when tackling multi-step mathematical problems. Traditional approaches often rely on integrating code interpreters to manage numerical calculations. However, such methods typically need to be revised when it comes to amending the logical inaccuracies that emerge during the step-by-step problem-solving process.

Existing research in computational mathematics includes frameworks like Chain of Thought (CoT) and Program of Thought (PoT), which utilize external code interpreters through models such as the Program-Aided Language (PAL). The REACT framework, DeepSeekMath, and MARIO models integrate coding environments to improve mathematical reasoning accuracy. Moreover, supervised fine-tuning models like MAmmoTH and MathCoder utilize annotated datasets to refine LLM capabilities, focusing on precise problem-solving. These approaches, however, often involve high costs and substantial manual dataset preparation.

Researchers from Alibaba Group have introduced a novel approach named AlphaMath that leverages the Monte Carlo Tree Search (MCTS) to automate the generation and refinement of training data for LLMs in mathematical reasoning. This method uniquely eliminates the need for manual data annotation, a common bottleneck in traditional model training, by using a combination of pre-trained LLMs and algorithmic enhancements to autonomously produce and improve training inputs.

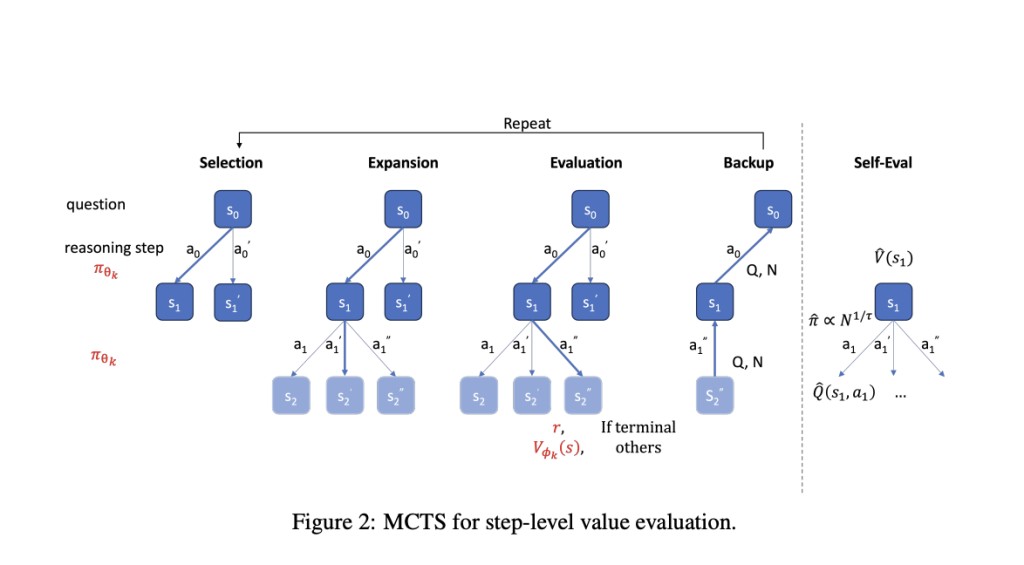

The methodology of AlphaMath hinges on integrating MCTS with a policy model and a value model. Initially, these models use a dataset comprising only questions and their final answers, avoiding detailed solution paths. The MCTS algorithm iteratively develops and evaluates potential solution paths, refining them based on the estimated values from the value model. This continuous process not only generates high-quality training data but also optimizes the model’s problem-solving strategies. The training and evaluation are conducted using the MATH dataset, renowned for its complexity, thereby testing the models’ proficiency under challenging conditions.

The application of the MCTS methodology in AlphaMath has yielded significant improvements in the model’s performance on the MATH dataset. Specifically, the enhanced models demonstrated a solution accuracy rate that exceeded 90% on complex problem sets, an increase from the baseline accuracy rates previously recorded. These results indicate a substantial advancement in the model’s ability to solve intricate mathematical problems with minimal error autonomously, validating the effectiveness of the MCTS integration in reducing the need for manual data annotation while maintaining high levels of accuracy and reliability in mathematical reasoning tasks.

To summarize, the research by Alibaba Group introduces a novel approach, Alphamath, using MCTS to enhance large language models’ capabilities in mathematical reasoning. By automating the generation of training data and refining solution paths without manual annotation, this methodology significantly improves model accuracy on complex mathematical problems, as evidenced by its performance on the MATH dataset. This advancement not only reduces the reliance on costly human intervention but also sets a new standard for efficiency and scalability in the development of intelligent computational models.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter. Join our Telegram Channel, Discord Channel, and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 42k+ ML SubReddit

The post This AI Paper by Alibaba Group Introduces AlphaMath: Automating Mathematical Reasoning with Monte Carlo Tree Search appeared first on MarkTechPost.

Source: Read MoreÂ